Attention Is All You Need: The Original Transformer Architecture

This newsletter is the latest chapter of the Big Book of Large Language Models. You can find the preview here, and the full chapter is available in this newsletter

The Self-Attention Mechanism

The Multi-head Attention Layer

The Positional Encoding

The Encoder

The Residual Connections

The Layer Normalization

The Position-wise Feed-Forward Network

The Decoder

The Cross-Attention

Masking The Self-Attention Layer

The Prediction Head

The Decoding Process

Training For Causal Language Modeling

Understanding the scale of the model

Estimating The Number Of Model Parameters

Estimating The Floating‐Point Operations

The Different Architecture Variations

The Encoder-Only Architecture

The Decoder-Only Architecture

The Encoder-Decoder Architecture

The "Attention Is All You Need" paper is one of the most influential works in modern AI. By replacing recurrence with self-attention mechanisms, the authors introduced the Transformer architecture, a design that enabled parallelized training, captured long-range dependencies in data, and scaled effortlessly to unprecedented model sizes. This innovation not only rendered RNNs obsolete but also laid the groundwork for BERT, GPT, and the modern LLM revolution, powering breakthroughs from conversational AI to protein folding. Beyond technical innovations, the paper catalyzed a paradigm shift toward general-purpose models with the rise of foundation models trained on massive datasets and reshaped industries from healthcare to creative arts. In essence, it transformed how humanity interacts with language, knowledge, and intelligence itself.

Architecture Overview

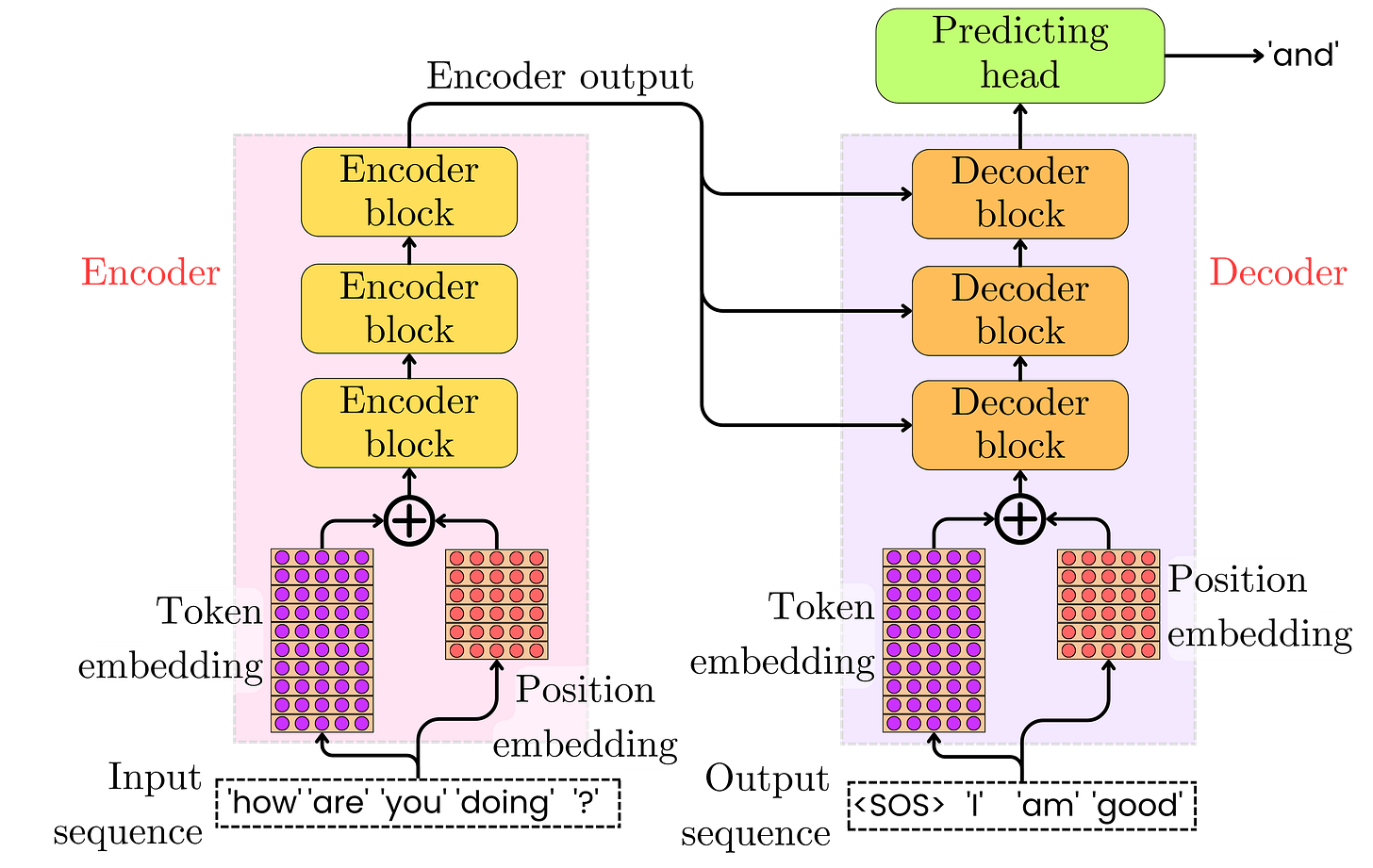

The architecture presented in the "Attention Is All You Need" paper builds directly from the RNN encoder-decoder architecture while discarding recurrence entirely and replacing Bahdanau/Luong's cross-attention with intra-sequence attention. There are four important components to the architecture:

The embeddings: Besides the token embeddings necessary to project the tokens into their vector representations, the Transformer introduced the need for positional encoding to ensure that the information related to the token positions is captured by the model.

The encoder: As for the RNN encoder-decoder, the encoder is in charge of encoding the input sequence into vector representations such that the decoder has enough information to decode the output sequence. It comprises a stack of identical encoder blocks, each with multi-head self-attention (capturing global dependencies) and a position-wise feed-forward network (applying non-linear transformations).

The decoder: Similar to the encoder but adds masked multi-head self-attention (preventing future token visibility) and encoder-decoder attention (aligning decoder inputs with encoder outputs, akin to Bahdanau/Luong but without RNNs). As before, the autoregressive generation proceeds token-by-token.

Prediction head: The prediction head is a classifier over the whole token vocabulary made out of a linear layer followed by Softmax, converting the decoder's final hidden states into token probabilities to predict the next word.

We will cover each component in detail in the remainder of this chapter. Self-attention and position embedding are central to the transformer architecture, and we need to discuss those technical innovations before we can understand the entire architecture.

The Self-Attention

The Self-Attention Mechanism

The Architecture

In the case of the Bahdanau/Luong attention, the goal was to capture the interactions between the tokens of the input sequence and the ones of the output sequence. In the Transformer, the self-attention captures the token interactions within the sequences. It is composed of three linear layers: WK, WQ, and WV. The input vectors to the attention layer are the internal hidden states hi resulting from the model inputs. There are as many hidden states as tokens in the input sequence, and hi corresponds to the ith token. WK, WQ and WV project the incoming hidden states into the so-called keys ki, queries qi and values vi:

The keys and queries are used to compute the alignment scores:

As in the case of the Bahdanau attention, eij is the alignment score between the ith word and the jth word in the input sequence. dmodel is the common naming convention for the hidden size:

The scaling factor √dmodel in the scaled dot-product is used to counteract the effect of the dot product's magnitude growing with the dimensionality dmodel, which stabilizes gradients and ensures numerical stability during training. It is common to represent to represent those operations as matrix multiplications. With the matrix K =[k1, …, kN] and Q =[q1, …, qN], we have:

or:

with N being the number of tokens in the sequence.

As for the other attentions, the alignment scores are normalized to 1 through a Softmax transformation:

where aij is the attention weight between the tokens i and j, quantifying how strongly the model should attend to token j when processing token i. Because we have Σ aij = 1, aij can be interpreted as the probability that token j is relevant to token i.

The attention weights are used to compute a weighted average of the values vectors:

In the jargon used in the previous chapter, ci are the context vectors coming out of the attention layer, but we can think of them as another intermediary set of hidden states within the network. Using the more common matrix notation, we have:

where V =[v1, …, vN], C =[c1, …, cN] and A = Softmax(E) is the matrix of attention weights.

The whole set of computations happening in the attention layer can be summarized as the following equation:

The Keys, Queries, and Values Naming Convention

The names "queries," "keys," and "values" are inspired by information retrieval systems (such as databases or search engines). Each token generates a query, key, and value to "retrieve" relevant context from other tokens. The model learns to search for relationships between tokens dynamically.

The queries represent what the current token is "asking for." For example, the word "it" in "The cat sat because it was tired," the query seeks antecedents (e.g., "cat"). The keys represent what other tokens "offer" as context. In our example, the key for "cat" signals it is a candidate antecedent for "it." The values are the actual content to aggregate based on attention weights. The value for "cat" encodes its contextual meaning (e.g., entity type, role in the sentence, ...). For each query (current token), the model "retrieves" values (context) by comparing the query to all keys (other tokens). For example, let us consider the sentence:

"The bank is steep, so it's dangerous to stand near it."

Query ("it"): "What does 'it' refer to?"

Keys ("bank," "steep," "dangerous"): Highlight candidates for reference.

Values: Encode the meaning of each candidate.

The model computes high attention weights between the query ("it") and keys ("bank," "steep"), then aggregates their values to infer "it" refers to the riverbank.

The Multi-head Attention Layer

The Naive Description

We have talked about self-attention so far, but we use the so-called multi-head attention layer in the transformer architecture. The multi-head attention layer works as multiple parallel attention mechanisms. By having multiple attention layers in parallel, they will be able to learn various interaction patterns between the different tokens in the input sequence. Combining those will lead to more heterogeneous learning, and we will be able to learn richer information from the input sequence. Think about the multi-head attention layer as an ensemble of self-attentions, a bit like the random forest is an ensemble of decision tree models.

We call "heads" the parallel attention mechanisms. To ensure that the time complexity of the computations remains independent of the number of attention heads, we need to reduce the size of the internal vectors within the layers. The hidden size dimensionality per head is divided by the number of heads:

where nhead is the number of heads. This implies that the hidden size has to be chosen so that it is divisible by the number of heads.

Let us call H =[h1, …, hN] the incoming hidden states. Each head h generates resulting hidden states H’h of size dhead = dmodel / nhead:

To combine those heads' hidden states, we concatenate them, and we pass them through a final linear layer WO to mix the signals coming from the different heads:

To generate smaller hidden states, we need to reduce the dimensionality of the internal matrices. In each head, the projection matrices WK, WQ, and WV take vectors of size dmodel and generate vectors of size dmodel / nhead.

The Tensor Representation

Although the information we have described so far about the multi-head attention layer is accurate, there is a critical subtlety to understand when it comes to its implementation. To illustrate the mathematical properties of the attention heads, we pictured separate "boxes" where each attention mechanism evolved in parallel, but in reality, they are slightly more connected. To fully utilize the efficient parallelization capability of the GPU hardware, it is critical to rethink every operation as a tensor operation. We described WK, WQ, and WV of each head as separate matrices, but in practice, it is just three matrices that we conceptually break down by the number of heads needed.

Similarly, there is only one set of keys, queries, and values, and each head processes the entire sequence of tokens but operates on a distinct subset of features. The keys, queries, and values have dimension dmodel X N, where N is the number of tokens in the input sequence. To specify each head's sub-segment explicitly, we reshape the matrices into 3-dimensional tensors with dimension nhead X dhead X N. Let us consider the incoming set of the hidden states. It is first projected into keys, queries, and values:

We then reshape the resulting matrices into 3-dimensional tensors:

Reshaping is computationally efficient as it only reorganizes the tensor dimensions. When we compute the alignment scores E' from new tensors, this leads to N X N score for each head:

Here, we use the shorthand notation K’T to streamline the notation and imply permutation on the last two indices of the tensor, similar to the transpose operation for matrices:

where k’ijk is an element of K'. Notice that the way the operations are performed ensures the computation of N X N attention weights per head while keeping the number of arithmetic operations constant compared to the vanilla attention layer. The attention weights A' are obtained by normalizing on the last dimension:

again, e’ijk is an element of the tensor E' and a’ijk of the tensor A'. The context vectors are computed as the weighted average of the values with the attention weights:

or in tensor notation:

At this point, we have N context vectors of size dhead per head. We can reshape this tensor such that we have N context vectors of size dmodel = nhead dhead:

We described this earlier as the concatenation of the different heads' context vectors. As a way to combine further the signal coming from the different heads, we pass the resulting context vectors through a final linear layer:

This approach lets the model process information more efficiently than sequential methods, making it better at understanding both nearby and far-apart relationships in the data.

The Positional Encoding

The Structure

The goal of the positional encoding (a.k.a. position embedding) in the Transformer architecture is to inject sequential order information into the model, enabling it to understand the position of tokens in a sequence. Since Transformers process all tokens in parallel (unlike sequential models like RNNs), they lack inherent awareness of token order. Position embeddings address this by encoding positional data. Without positional information, the Transformer would treat the input as a "bag of words," losing critical order-dependent structure.

In the "Attention is all you need" paper, the positional encoding is defined as another embedding matrix with the same embedding size as the token embedding. The number of rows in the position embedding defines the maximum number of tokens that the model can ingest within a sequence, also known as the context size. The positional information of the token is added to the model by summing the semantic vector representations of the tokens from the token embedding and their positional vector representations from the position embedding. This ensures that the self-attention weights carry the positional information such that the order of the tokens impacts the model inference.

The position embedding (PE) is a static matrix of numbers. If i is the index position of the vectors in the embedding, and j is the index position of the elements in the vectors, the matrix elements are defined by the following formula:

where i ranges in [0, context size - 1] and j in [0, dmodel - 1].

Capturing The Relative Token Positions

The motivation behind this choice of sinusoidal functional form for positional encodings is so the model can more easily learn attention weights reflecting each token's relative position. It stems from the trigonometric identities for sine and cosine functions:

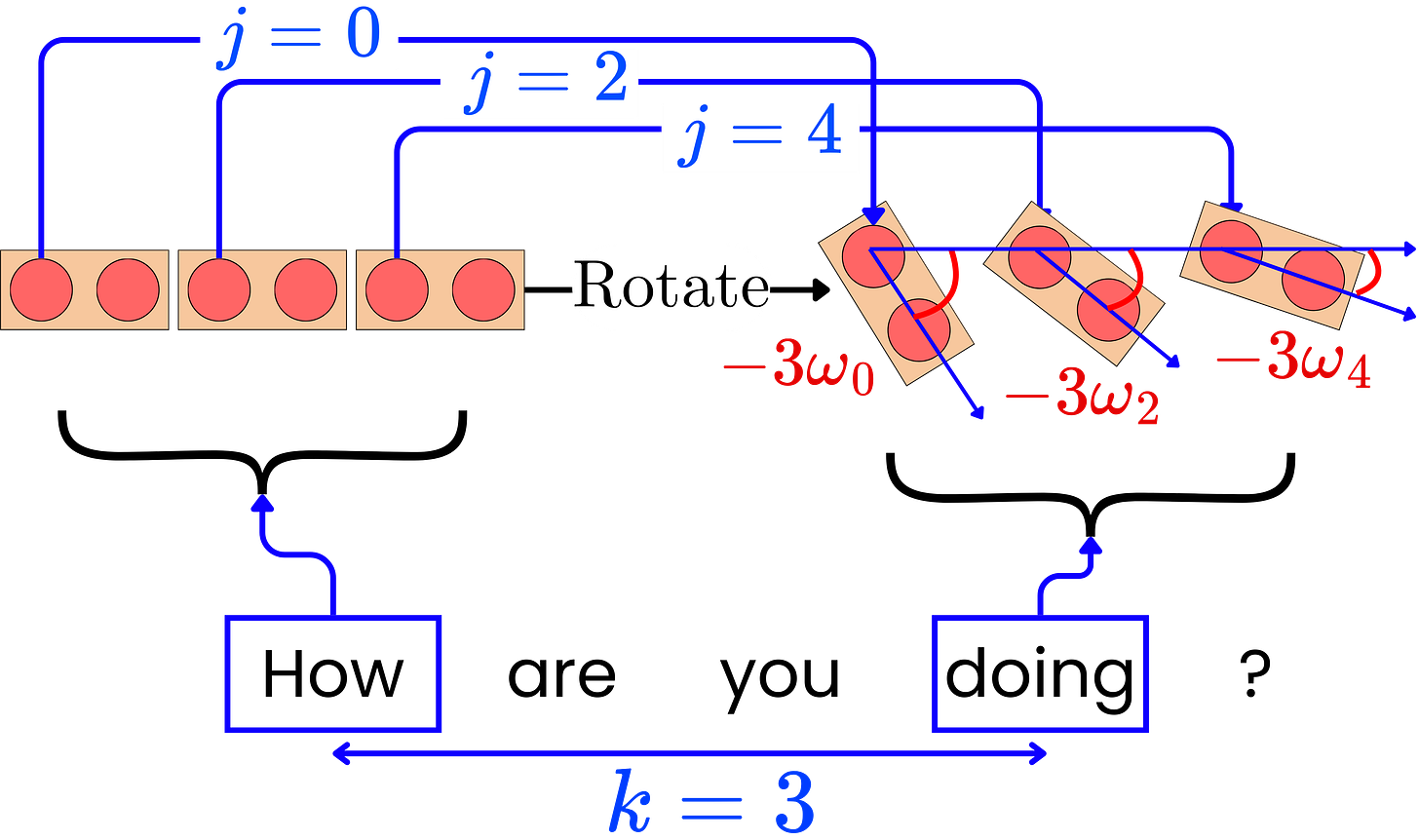

Let us consider a fixed offset k, and we apply the trigonometric identities to the encoding formula:

where 𝛚j = 1 / 1000j/dmodel. In matrix notation, we have:

Let us define PE(i, j) as:

We obtain:

where:

In linear algebra, Rj(k) is called the rotation matrix and is used to perform a rotation in Euclidean space. Effectively, it means that PE(i+k, j) is the rotation of PE(i, j) by an angle -𝛚jk.

So far, we have shown that, for two tokens with relative distance k, each pair of elements (j, j+1) within their positional encodings are related to each other through a rotation with angle -𝛚jk. Let us call PE(i) = [PE(i, 0), PE(i, 1), …, PE(i, dmodel)]. We can relate PE(i) and PE(i+k) through the pairwise rotation matrix:

where

Let us now consider two hidden states hi and hi+k, corresponding to two tokens with relative distance k, coming into the self-attention layer. Both of them are the result of summing the token embedding vectors xi and xi+k and the positional encoding vectors PE(i) and PE(i+k):

We can compute their alignment score after projecting them into their keys and queries (we ignore heads for simplicity):

If we expend, we obtain:

We effectively decomposed the alignment score into four components:

Token-Token Interaction: Pure content-based alignment between xi and xi+k

Token-Position Interaction: How the token at i interacts with the relative position k of xi+k

Position-Token Interaction: How the position i interacts with the token at xi+k

Position-Position Interaction: How the relative position k (encoded via R(k)) interacts with the absolute position i.

Let's remember that R(k) is the fixed, mathematically defined transformation matrix (from sinusoidal identities) that maps PE(i) to PE(i+k) and it exists purely as a property of the positional encoding scheme. With this linear relationship, the model parameters WK and WQ can learn to leverage the structure of positional encodings to compute attention scores that depend on content and relative positions. During training, the model will learn to weigh these interactions by adjusting WK and WQ. It makes the training more efficient as the model does not need to relearn positional relationships from scratch; it builds on the mathematical structure of PE(i). The sinusoidal nature of the encoding also helps the model to generalize better to both unseen absolute positions and positional offsets.

Positional Encoding's Multi-Frequency Design

The positional encoding defines a frequency that depends on the position of vector elements:

Here, the constant 10,000 is a hyperparameter that controls the range of frequencies used to encode positional information. This means that the period of oscillations is 2𝝿 10000j/dmodel. The frequencies range between [1, 1 / 10000(dmodel-1)/dmodel]. High Frequencies lead to rapidly oscillating sine/cosine waves, which are adapted to distinguishing between nearby positions. This is crucial for local sentence syntax (e.g., word order in a phrase). Low frequencies lead to slowly oscillating waves that generalize over longer distances, which is useful for capturing global structure (e.g., paragraph-level coherence).

The high value of 10,000 ensures a smooth transition from high to low frequencies across the embedding dimensions. 2𝝿 10000 is the maximum period supported by the model. Theoretically, this means it allows unique positional signals for tokens up to 2𝝿 10000 ~ 62,832 positions. However, transformers trained on sequences of fixed, shorter lengths (e.g., 512–4096 tokens) do not learn to handle positional relationships beyond this range. While the encoding theoretically supports very long periods, the model's effective context size is constrained by training data.