What is a Knowledge Base

Getting the Data

Create the Graph representation

Augmenting LLMs with a Knowledge base

Using the Diffbot Graph Transformer

Creating a local Graph Database

Augmenting an LLM with the Graph Database

Below is the code used in the video!

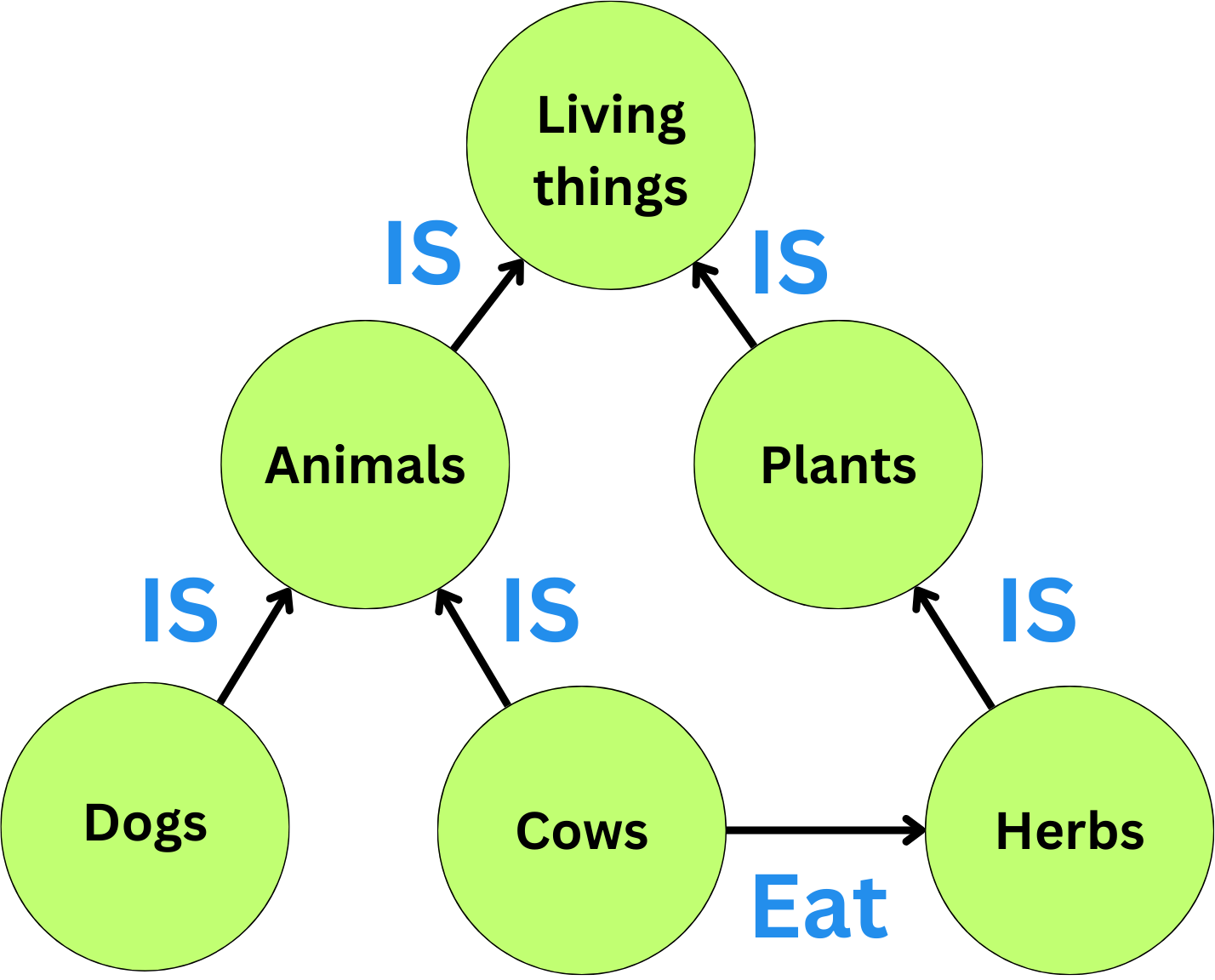

What is a Knowledge Base

Here is a small example of a knowledge base

Getting the Data

We install the packages

%pip install networkx pygraphviz neo4j langchain-experimentalWe get the data

from langchain.document_loaders import PyPDFLoader

file_path = "_the_Dementia_Wars__Santa's_Salvation_.pdf"

book_loader = PyPDFLoader(file_path=file_path)

book_data = book_loader.load_and_split()Create the Graph representation

from langchain.indexes import GraphIndexCreator

from langchain.llms import OpenAI

llm = OpenAI(temperature=0)

index_creator = GraphIndexCreator(llm=llm)

graph = index_creator.from_text(book_data[20].page_content)We can get the triples

graph.get_triples()We can draw the graph on the screen

from IPython.display import SVG

graph.draw_graphviz(path="book.svg")

SVG('book.svg')Here is the prompt used to create those triples:

You are a networked intelligence helping a human track knowledge triples about all relevant people, things, concepts, etc. and integrating them with your knowledge stored within your weights as well as that stored in a knowledge graph. Extract all of the knowledge triples from the text. A knowledge triple is a clause that contains a subject, a predicate, and an object. The subject is the entity being described, the predicate is the property of the subject that is being described, and the object is the value of the property.

EXAMPLE

It's a state in the US. It's also the number 1 producer of gold in the US.

Output: (Nevada, is a, state)<|>(Nevada, is in, US)<|>(Nevada, is the number 1 producer of, gold)

END OF EXAMPLE

EXAMPLE

I'm going to the store.

Output: NONE

END OF EXAMPLE

EXAMPLE

Oh huh. I know Descartes likes to drive antique scooters and play the mandolin.

Output: (Descartes, likes to drive, antique scooters)<|>(Descartes, plays, mandolin)

END OF EXAMPLE

EXAMPLE

{text}Output:

We can transform the whole book into a graph

import networkx as nx

from langchain.graphs.networkx_graph import NetworkxEntityGraph

graphs = [

index_creator.from_text(doc.page_content)

for doc in book_data

]

graph_nx = graphs[0]._graph

for g in graphs[1:]:

graph_nx = nx.compose(graph_nx, g._graph)

graph = NetworkxEntityGraph(graph_nx)And we can draw the whole graph to a file

graph.draw_graphviz(path="graph.pdf", prog='fdp')Augmenting LLMs with a Knowledge base

Let’s ask some questions

from langchain.chains import GraphQAChain

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(temperature=0)

chain = GraphQAChain.from_llm(

llm=llm,

graph=graph,

verbose=True

)

question = """

What is happening in the North Pole?

"""

chain.run(question)'The North Pole has been transformed into a battlefield, but it is protected and emerges victorious. It has scars of battle, but it is healing and rebuilding. The North Pole has regained its former glory and celebrates a hard-fought victory. It has work to do and is filled with stories of hope and resilience. The North Pole has a workshop, machinery, and a rejuvenated landscape. It is a beacon of hope and joy for generations to come, with a warm glow of laughter and joy.'

Using the Diffbot Graph Transformer

Let’s use Diffbot

from langchain_experimental.graph_transformers.diffbot import (

DiffbotGraphTransformer

)

diffbot_nlp = DiffbotGraphTransformer(

diffbot_api_key=diffbot_api_key

)

book_graph = diffbot_nlp.convert_to_graph_documents(

book_data

)Creating a local Graph Database

Let’s create a local Neo4J database

docker run \

--name neo4j \

-p 7474:7474 -p 7687:7687 \

-d \

-e NEO4J_AUTH=neo4j/pleaseletmein \

-e NEO4J_PLUGINS=\[\"apoc\"\] \

neo4j:latestLet’s connect to it

from langchain.graphs import Neo4jGraph

url = "bolt://localhost:7687"

username = "neo4j"

password = "pleaseletmein"

graph_db = Neo4jGraph(

url=url,

username=username,

password=password

)We add the data

graph_db.add_graph_documents(book_data)

graph_db.refresh_schema()Augmenting an LLM with the Graph Database

Let’s connect our LLM to the graph database

from langchain.chains import GraphCypherQAChain

from langchain.chat_models import ChatOpenAI

chain = GraphCypherQAChain.from_llm(

cypher_llm=ChatOpenAI(temperature=0, model_name="gpt-4"),

qa_llm=ChatOpenAI(temperature=0, model_name="gpt-3.5-turbo"),

graph=graph_db,

verbose=True,

)Let’s ask a question

question = """

What is happening in the North Pole?

"""

chain.run(question)

> "I don't know"