Deep Dive: How to build the Facebook Ads Ranking System

Machine Learning System Design

Ads Ranking is certainly one of the most successful Machine Learning applications financially speaking. We present here the design to personalize ads to users on the Facebook platform. We will look at:

The design overview

The models

The training data

The metrics

The auction process

The serving pipeline

Introduction

Today Facebook has about ~3 billion users and ~2 billion daily active users. It is the second largest ads provider in the world after Google. 95% of Meta’s revenue comes from ads and Facebook generates ~$70B every year while Instagram ~$50B in ads alone. There are ~15 million active advertising accounts, with approximately more than 15 million active ad campaigns running at any point in time.

Facebook feeds are lists of posts with ads between. Why is that specific ad shown to me?

Design overview

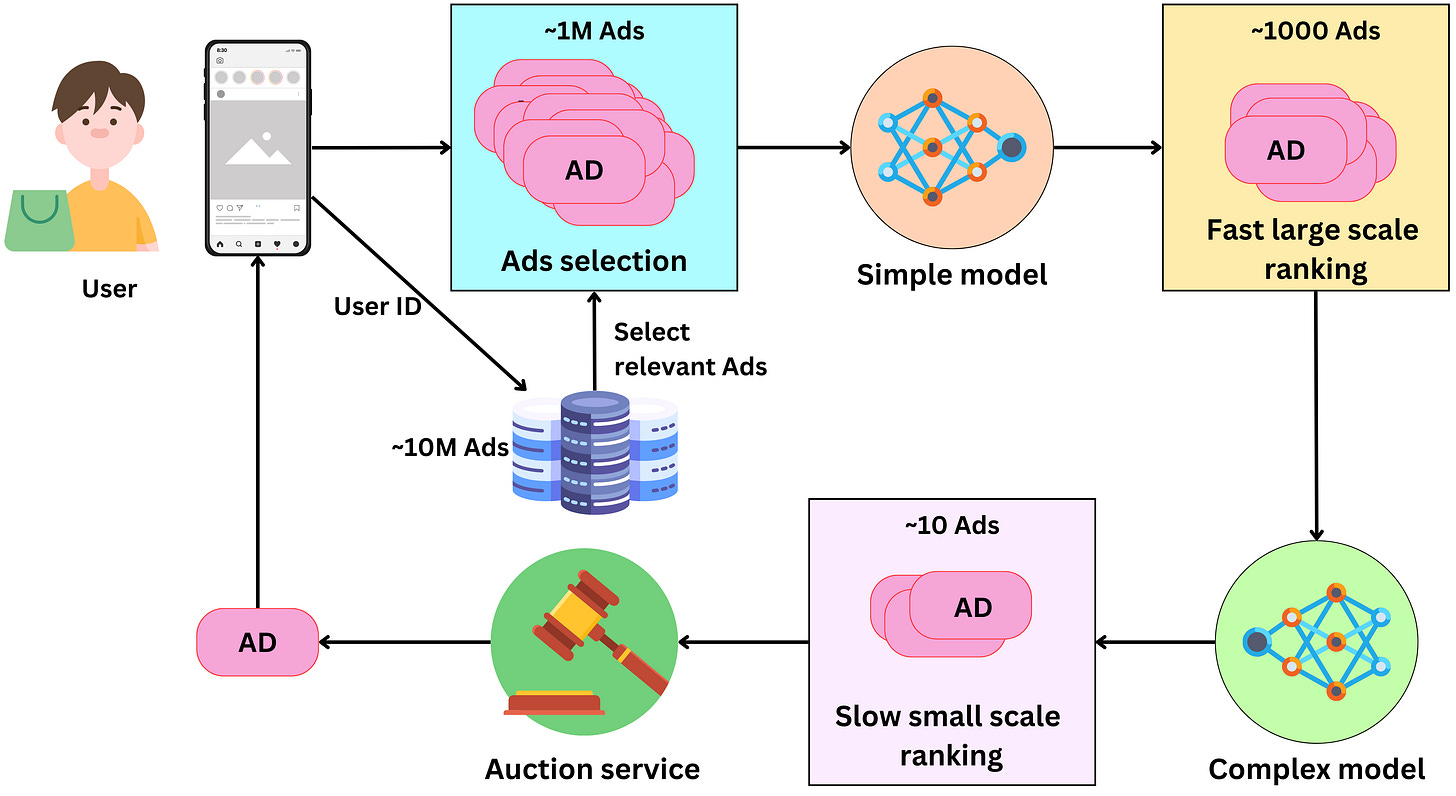

Conceptually, choosing the right ad for the right user is pretty simple. We pull ads from a database, rank them according to how likely the user is to click on them, run an auction process to pick the best one, and we finally present it to the user. The process to present one ad on the user’s screen has the following components to it:

Selecting ads: The ads are indexed in a database and a subset of them is retrieved for a specific user. At all times, there are between 10M and 100M ads and we need to filter away ads that are not relevant to the user. Meta has access to age, gender, location, or user’s interest data that can be used as keywords filters for fast ads retrieval. Additional context information can be used to further filter the selected ads. For example, if it is winter and the user lives in Canada, we could exclude ads for swim suits! We can expect this process to pull between 100K and 1M ads

Fast large scale ranking: With that many ads, we need to rank them in stages. Typically there are two stages: a first fast (per ad) large scale ranking stage and a slow (per ad) small scale ranking one. The first stage needs to be able to handle a large amount of ads (from the ads selection step) while being relatively fast for a low latency process. The model has usually a low capacity and uses a subset of the features making it fast. The ads are scored with a probability of being clicked by the user and only the top ads are retained for the next step. Typically this process generates between 100 and 1000 ads.

Slow small scale ranking: This step needs to handle a smaller amount of ads, so we can use a more complex model that is slower per ad. This model, being more complex, has better predictive performance than the one in the previous step, leading to a more optimal ranking of the resulting ads. We keep only the best ranking ads for the next step. At this point, we may have of the order of ~10 remaining ads.

The auction: An advertiser only pays Facebook when the user clicks on the ad. During the campaign creation, the advertiser sets a daily budget for a certain period. From this, we can estimate the cost he is willing to pay per click (bid) and the remaining ads are ranked according to their bid and probability of click. The winning ad is presented to the user.

The process does not have to be real-time and can be pre-processed ahead. For example, ads can be pre-computed for first-time users. Ads for users with low activity can be pre-computed in batches. For high-activity users, it is imperative to have a process close to real-time. Furthermore, multiple ads might be presented during a user session, so it is important to prepare more than one ad for the user scrolling on the website.

The models

The general architecture

The models used in multi-stage ranking are recommender engines. They take as inputs a set of features about the user, the ads and the context, and they output the probability that the user will click on the ads. The base architecture used at Facebook is the SparseNN architecture. The main components are as follows:

The dense features: those are typically the continuous variables and low dimensionality categorical variables: age, gender, number of likes in the past week, …

The sparse features: those are the high dimensionality categorical variables and they are represented by an embedding: user id, page id, ad id, …

The dense architecture: dense features are fed to the dense architecture. Latent representations of dense features are derived from non-linear functions in dense architecture. The latent representations have the same size as sparse features’ embeddings.

The dot product layer: reminiscent of the latent matrix factorization model, multiple dot products of pairs of dense and sparse embeddings are computed.

The overarch: the resulting outputs are fed to a set of non-linear functions before producing a probability prediction.

More specific architectures

For recommender systems, there are several architecture paradigms used. For example the Multi-task learning architecture (“An Overview of Multi-Task Learning in Deep Neural Networks”), Mixture of experts (“Recommending What Video to Watch Next: A Multitask Ranking System“) or Multi-tower models (“Cross-Batch Negative Sampling for Training Two-Tower Recommenders”).

As an example, the Two-tower paradigm is an extension of the more classical latent matrix factorization model. The latent matrix factorization model is a linear model where the users are represented by a matrix U and the ads by a matrix P such that the dot product R is as close as possible to the target to predict:

Two-tower architecture allows dense and sparse features, and users (and context) and ads have their own non-linear towers. The tower output is used in a dot product to generate predictions:

The training data

The features

When it comes to features, we need to think about the different actors of process: users, the ads and the context. Meta has ~10,000 features capturing those actors' behavior. Let’s look at a few examples: