DragGAN: A fiery new method of image manipulation

Today I am excited to present to you a special guest post by Shivangee Trivedi about DragGAN. DragGAN is a Generative Adversarial Network to transform and modify images with ease. Shivangee is currently a data scientist at Octane Lending and you can get to know more about her on her LinkedIn. We cover:

Introduction to GANs

StyleGAN

DragGAN

What does DragGAN do that other GANs haven't explored, yet?

Applications

Introduction

Generative AI has taken the world by storm. ChatGPT, Dall-E, AlphaCode, and Bard are just a few tools that exemplify the myriad advancements made in this field. A natural question that arises is what exactly is Generative AI? The term Generative AI refers to a set of deep learning-based algorithms that generate new content, often building up on existing data. This methodology differs from traditional, supervised machine learning techniques that focus on making predictions based on existing data. In contrast, Generative AI models use semi-supervised and unsupervised techniques to automatically learn underlying patterns in the data and generate original artifacts. A classic example is comparing a spam email classifier with ChatGPT - while the spam classification model is trained on emails that are both spam and not spam, its objective is to predict a binary output indicating whether a new email coming into someone’s inbox is a spam email or not. ChatGPT, on the other hand, is trained on enormous data and has learned the underlying distribution well enough to actually generate text very similar to handwritten text.

In the field of image synthesis and manipulation, Generative Adversarial Networks (GANs) can be called the OG invention. Since their inception in 2014, there have been countless versions of GANs to achieve miscellaneous objectives. DragGAN is a recent invention that enables editing of images in a user-friendly manner through a simple point-and-drag methodology. This article will provide an overview of the inner workings of a GAN, followed by exploring the basics of DragGAN and concluding with some examples and use cases of this novel technique. A gentle disclaimer to readers looking for implementation details - this is not an attempt to cover DragGAN in depth. The main aim of this article is to educate the audience about GANs and draw attention to the potential use cases of DragGAN!

Introduction to GANs

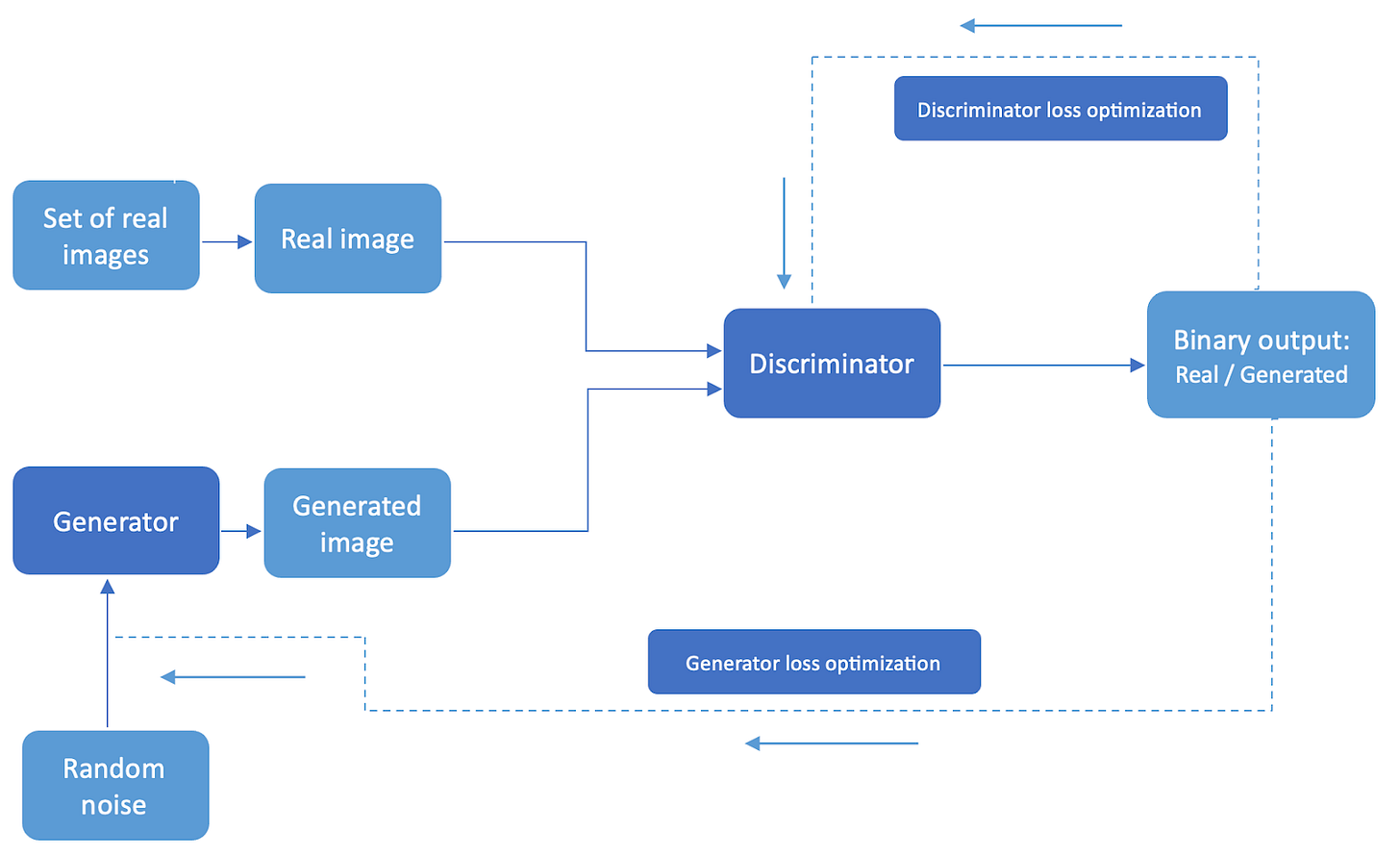

Although there are GAN applications outside of image generation, such as GANBERT and WaveGAN, our GAN talk in this article will largely be tied to images. Convolutional Neural Networks (CNNs) are neural networks designed specifically to learn textures, shapes, and patterns present in images to enable better learning. GANs consist of two neural networks paired up against each other to form a system that generates original, photo-realistic images. In their most basic arrangement, GANs contain at least one Generator and one Discriminator. The Discriminator functions as a classification model, discriminating between two inputs - one, the input the user provides, which can represent an actual image, and another input, provided to it by the Generator. The output of the Discriminator is binary and its aim is to determine if the two images belong to the same class of objects. The Generator, on the other hand, is a neural network that takes in random noise as an input. Its aim is to generate an image that looks similar to an actual image.

If you’re wondering how these neural networks come together to work their magic, look no further. The magic happens because of good old Backpropagation and the cleverly defined loss functions for both the Generator and the Discriminator. The function that the Discriminator tries to optimize for is a simple Binary Cross Entropy Loss - it is high when the two images (the real image and the generated image) are very different from each other, and low when the two images are similar to each other. The function that the Generator tried to optimize is the backpropagated error from the Discriminator - the larger the error, the larger the difference between the two images. A low loss for both the Generator and the Discriminator equals an extremely lifelike and compelling fake image!

This way, both the Discriminator and Generator are set up for success in their own regard - while the Discriminator tries to best distinguish between the two images provided to it, the Generator tries to best produce an image that matches the original image. Even though the two neural networks are fighting against each other, we see that ultimately they are working towards the same goal of trying to generate an image resembling the set of images we provided in the first place. That’s the power unleashed with the development of GANs - leveraging unsupervised learning to generate authentic, fake images that look like real images to the naked human eye. While there are downsides to GANs such as vanishing gradients, their discovery has led to unstoppable developments in the field of image (and other content) generation.

StyleGAN

An important concept that we ought to talk about in a little detail is StyleGAN, as it sets the stage for DragGAN (the architecture of the latter is inspired by the former). Style transfer is formally described as rendering a content image in the style of another image (see "Arbitrary Style Transfer in Real-time with Adaptive Instance Normalization"). StyleGAN achieved a major breakthrough when it introduced a new version of the Generator that allows for better control to generate a resulting image whose style is derived from one or more input images. For example, using StyleGAN, it is possible to produce an image that looks like a cross between a zebra and a horse!

StyleGAN covers two main concepts:

Mapping Network - An architecture for the Generator to learn affine transformations from the input image and draw samples from each style

Synthesis Network - A system that generates an image based on a collection of styles

The two source images A and B are generated using two different latent codes. The first row represents images where the coarse styles are borrowed from image B, i.e., the high-level features such as pose, glasses, and face shape resemble image B but the facial details resemble that of image A such as the eyes and hair. The second row represents images where the middle styles are borrowed from image B i.e., the facial features and hairstyle is borrowed from image B while the pose and general face shape are borrowed from image A. The third row represents images where the fine styles are all preserved from image A while only the color schemes are borrowed from image B.

Using StyleGAN, it is possible to allow incorporating styles from input images that are localized in the resulting image, thus allowing for scale-specific modifications. The applications of this variation of GANs as you can imagine are endless, and it is therefore regarded as one of the best breakthroughs in recent times.

DragGAN

While StyleGAN introduced style mixing along with maintaining a great quality of generated images, it doesn’t provide users with complete and precise control over modifications to be made. DragGAN offers exactly that - interactive and complete control using a drag-and-drop methodology. The basic architecture of DragGAN is highly inspired by StyleGAN.

The user starts by defining a couple of pairs of handle and target points, which represent the positions of parts of the image to be manipulated. The objective here is for the user to be able to drag portions of the image from the position of the handle points to the position of the target points. Optionally, the authors also provide the user with the choice of selecting a region of the image which can be moved as a part of this manipulation exercise. The optimization process is broken down into 2 parts:

Motion Supervision - This is the first step that involves optimizing for a loss function that causes the handle points to move toward the target points

Point Tracking - This is the second step that updates the handle points to track the object itself so that the handle points are updated after the motion supervision step and the focus is still the same manipulations as initially defined by the user. This step is achieved using nearest neighbor interpolation

The image above summarizes the approach of DragGAN. The user defines pairs of points on the original image - the red points signify the handle point and the blue points signify the target point. Optionally, the user can also define a specific area on the image that can move during the process of image editing. The first step, motion supervision is performed on the learned image representations of the Generator. The second step is performed on the image produced after point tracking to update the position of the handle points. Then, this pattern of the first step followed by the second step is repeated until the final image is produced.

The above video is the official demo of DragGAN. The video shows how a variety of images can be easily modified by placing the source and target points in desired positions. Notice how diverse the applications of DragGAN are - the authors demonstrate image manipulation in the field of fashion, on animals, and also on automobiles.

What does DragGAN do that other GANs haven't explored, yet?

DragGAN introduces novel ways of generating GAN-based manipulated content:

Interactive, point-based image manipulation

DragGAN demonstrates that it is easy to deform an image and produce pose, shape, and background variations in an interactive and user-friendly manner.

Flexible

The fact that DragGAN accounts for different types of changes in an image to be manipulated is an impressive feature. The target and handle points positions can be placed anywhere to achieve desired changes in pose, expression, shape, or layout.

Precise

The quality of the generated image is not disrupted in any manner, showcasing a plausible use case in the real world.

Object / Category Agnostic

DragGAN achieves image manipulation across various genres of images, its application is not restricted to any particular category, making it a generic approach

Better than previous approaches

There are other, existing approaches that use point tracking to manipulate images but the creators of DragGAN show that their approach is universal, fast, interactive, and state-of-the-art

Applications

As you may have guessed, there is no dearth of applications that could benefit from using DragGAN

Deepfakes

Even though Deepfakes were introduced to the world as a means of spreading disinformation, they have ethical use cases too. One such use-case is Deepfakes which can be used in the Entertainment and/or Movie industry. An actor having different expressions that are GAN-generated but based on a single real image of the same actor? Yes, please!

Photography enthusiasts

We all know that good photography comes with its own share of editing. Imagine occluding an unwanted object in the background of your favorite picture using a simple point-and-drag method - what a productivity boost!

E-commerce

E-commerce websites can largely benefit from being able to capture their products being used in different lighting, backgrounds, or different poses, all derived by manipulating a single image

Conclusion And Other Work

In this blog post, we briefly touched upon the overview of a GAN and took a trip down memory lane looking at StyleGAN, only to go back to the future and introduce DragGAN. DragGAN was released in early 2023 and has since been an intriguing topic. There is a cool tutorial that gives users context on DragGAN and shows how to install it. The official paper can be found here and the official code was released in June 2023 here. The authors also have a website where they provide some more examples of their approach in practice.

Adobe Firefly has recently released a string of Generative AI models that serve a variety of functionalities. With Adobe Firefly, captivating images can be generated based on a user’s text descriptions, and image manipulations including the removal or addition of new objects can be done using their Generative Fill feature. Clearly, we are moving towards a new state in the field of content generation where we rely on AI. Tools like DragGAN and Adobe Firefly are just the beginning, and I will certainly be on the lookout for more research to follow in the new future!

About the author

Shivangee Trivedi is currently a data scientist at Octane Lending where she builds predictive machine learning models in the consumer lending space. She has previously worked at McAfee where she actively researched adversarial attacks on machine learning applications in the real world and on detecting Deepfakes. Get to know more about her work and interests on her LinkedIn.

Hey Shivangee & Damien, great article on DragGAN! It's amazing to see how Generative AI keeps evolving, and DragGAN's user-friendly point-and-drag approach is truly groundbreaking. Looking forward to more exciting developments in the world of image manipulation with GANs.

Thanks Adam for the kind words! Absolutely, so much to keep up with new developments coming up everyday!