Float32 vs Float16 vs BFloat16?

Float32, Float16 or BFloat16! Why does that matter for Deep Learning? Those are just different levels of precision. Float32 is a way to represent a floating point number with 32 bits (1 or 0), and Float16 / BFloat16 is a way to represent the same number with just 16 bits. This is quite important for Deep Learning because, in the

backpropagation algorithm, the model parameters are updated by a gradient descent optimizer, and the computations are done with Float32 precision to make sure there are fewer rounding errors. The model parameters and the gradients are usually stored in memory in Float16 to reduce the pressure on the memory, so we need to convert back and forth between Float16 and Float32.

Watch the video for the full content:

Let’s review the different data types used in a model. Float32, Float16, or BFloat16 are just different levels of precision. Float32 is a way to represent a floating point number with 32 bits (1 or 0), and Float16 / BFloat16 is a way to represent the same number with just 16 bits. With Float32, we allocate the first bit to represent the sign, the next 8 bits to represent the exponent, and the next 23 bits to represent the decimal points (also called Mantissa).

We can go from the bits representation to the decimal representation by using the simple formula:

Float16 uses 1 bit for the sign, 5 bits for the exponent, and 10 bits for the Mantissa with the formula:

Brain Float 16 (BFloat16) is another float representation in 16 bits. We give less decimal precision but as much range as Float32. We have 8 bits for the exponent and 7 bits for the Mantissa with the same conversion formula.

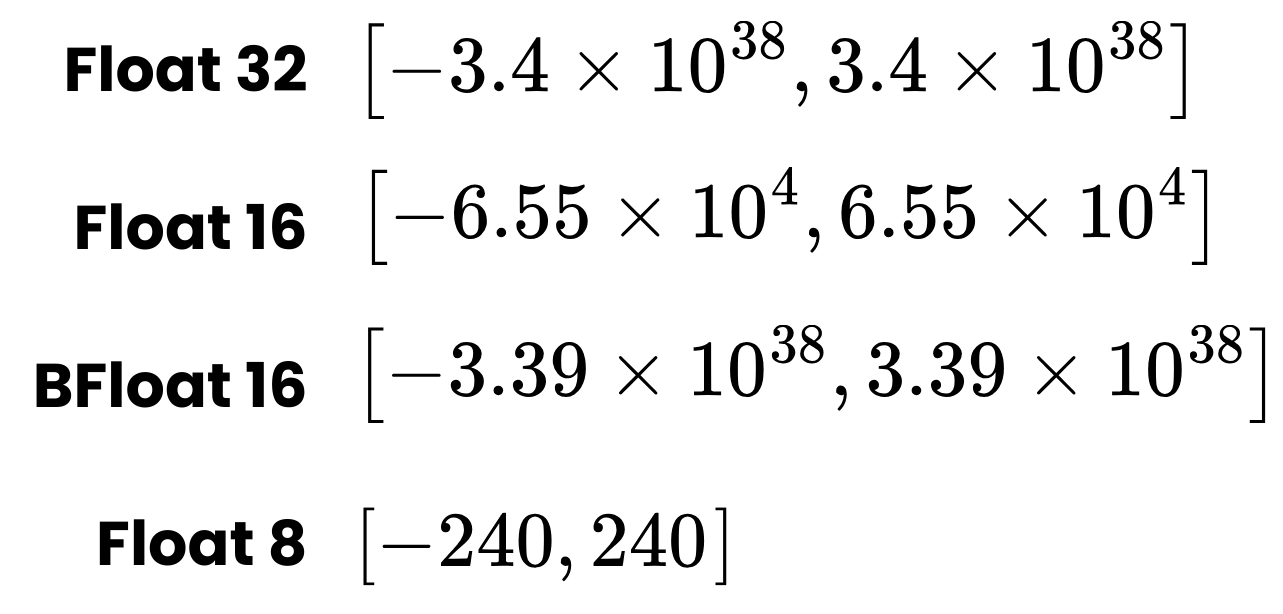

Float 32 can range between -3.4e^38 and 3.4e^38, the range of Float16 is between -6.55e^4 and 6.55e^4 (so a much smaller range!), and BFloat has the same range as Float32.

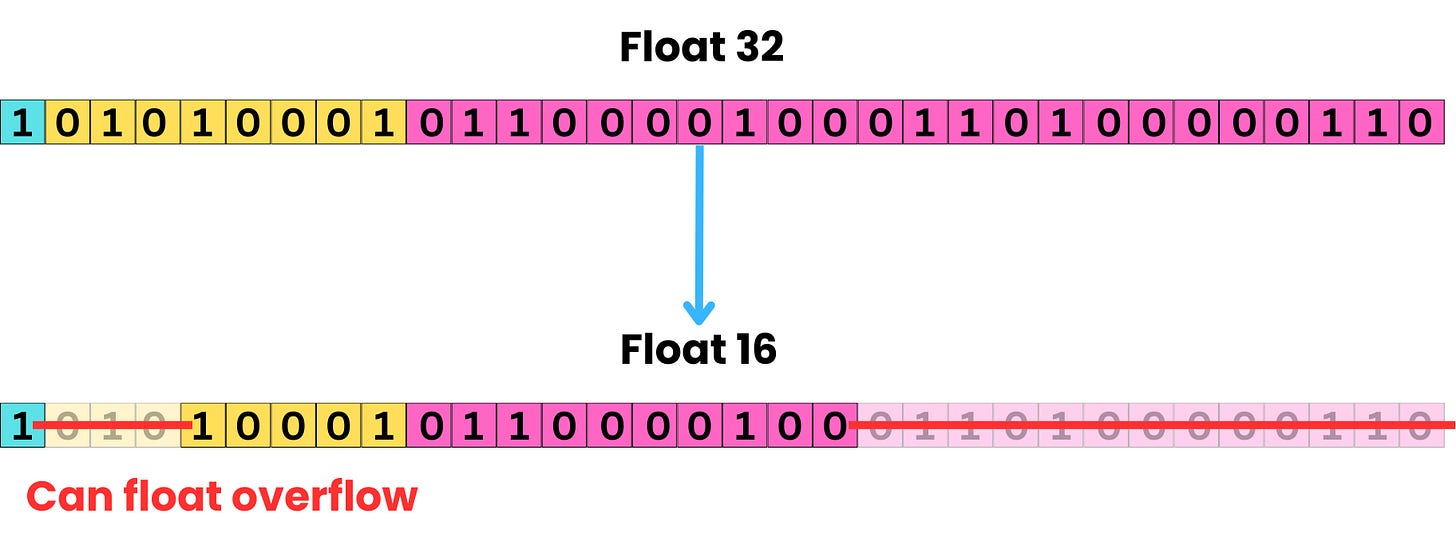

To convert from Float32 to Float16, you just need to remove the digits that cannot fit in the 5 and 10 bits allocated for the exponent and the Mantissa. For the Mantissa, you are just creating a rounding error, but if the Float32 number is greater than 6.55e^4, you will create a float overflow error! So, it is quite possible to get conversion errors from Float32 to Float16.

Converting to BFloat16 from Float32 is trivial because you just need to round down the Mantissa.

BFloat16 is a good choice because it prevents float overflow errors while keeping enough precision for the forward and backward passes of the backpropagation algorithm.

SPONSOR US

Get your product in front of more than 66,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - tens of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

To ensure your ad reaches this influential audience, reserve your space now by emailing damienb@theaiedge.io.