From Words to Tokens: The Byte-Pair Encoding Algorithm

Why do we keep talking about "tokens" in LLMs instead of words? It happens to be much more efficient to break the words into sub-words (tokens) for model performance!

So we could create tokens simply from the words in the input sequence. Well, actually, it is not the best strategy because this tends to create a very large vocabulary, we cannot deal with morphologically rich language like Turkish or Finish, we don't handle very well the variants of the different words like plural or tense variation, and it is not great for evolving language.

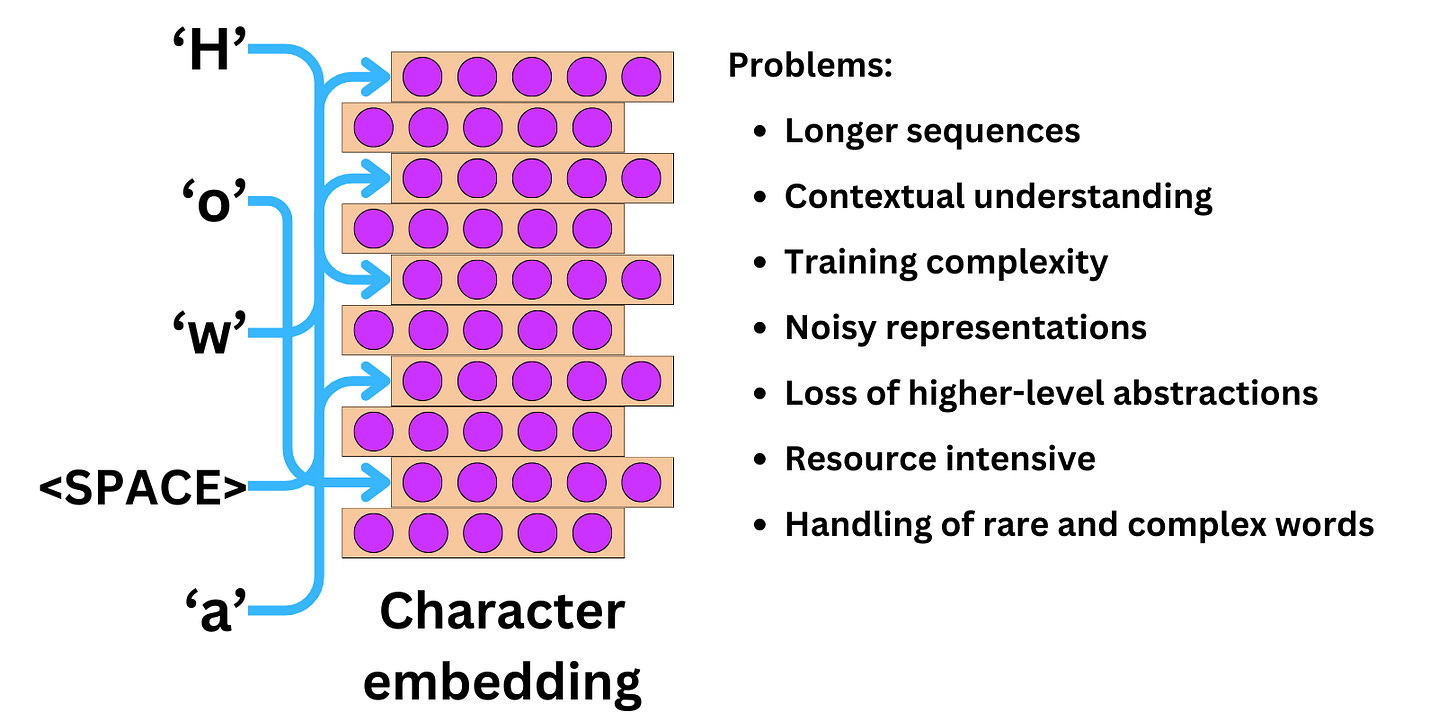

So, we could create tokens that are the characters in a sequence. Well, actually it is not great either. We have much longer sequences, we need to train models longer just for them to relearn the structure of words. It makes the training a bit noisy.

It tends to be more advantageous to create sub-word level embeddings when we train models. The typical strategy used in most modern LLMs (GPT-1, GPT-2, GPT-3, ChatGPT, Llama 2, etc.) is the Byte Pair Encoding (BPE) strategy. The idea is to use as tokens sub-word units that appear often in the training data. The algorithm works as follows:

- We start with a character-level tokenization

- we count the pair frequencies

- We merge the most frequent pair

- We repeat the process until the dictionary is as big as we want it to be

Let’s see an example:

Let’s look at the second iteration:

We can iterate this process as many times as we need:

The size of the dictionary becomes a hyperparameter that we can adjust based on our training data. For example, GPT-1 has a dictionary size of ~40K merges, GPT-2, GPT-3, ChatGPT has a dictionary size of ~50K, and Llama 2 only 32K.

Watch the video for more information!

SPONSOR US

Get your product in front of more than 62,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - tens of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

Space Fills Up Fast - Reserve Today

Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing damienb@theaiedge.io.