GPT-4: The largest Large Language Model yet!

Let's break the secret!

GPT-4 is here and it is probably the biggest Large Language Model yet! A lot of the information is actually secret but there are a lot of guesses we can make. We are going to look at:

The Architecture

The Training process

The number of parameters

When the creators starts to fear their creation

GPT-4 was just released yesterday on Pi Day (March 14th 2023) and it looks delicious! Well actually, so far you can only join the waiting list to have access to the API: GPT-4. But at least now we have more information to separate fantasy from reality. Here is the GPT-4 technical paper: “GPT-4 Technical Report“. The main features are:

It is multi-modal with text and image data as input

It fine-tuned to mitigate harmful content

It is bigger!

Let’s dig into it!

The Architecture

GPT-4 is here and it is probably the biggest Large Language Model yet! OpenAI finished training GPT-4 back in August 2022, and they spent the past 7 months studying it and making sure it is "safe" for launch! GPT-4 takes as input text and image prompts and generates text.

From the paper:

“Given both the competitive landscape and the safety implications of large-scale models like GPT-4, this report contains no further details about the architecture (including model size), hardware, training compute, dataset construction, training method, or similar.”

OpenAI is trying to keep the architecture a secret but there are many guesses we can make. First, they made it clear that it is a Transformer model, and following the GPT-1, GPT-2, and GPT-3 tradition, it is very likely a decoder only architecture pre-trained with the next word prediction learning task. To include image inputs, we need to encode the image in the latent space using a ConvNet or a Vision Transformer. From the ViT paper (“An image is worth 16x16 words: Transformers for image recognition at scale”), we know that it outperforms ConvNet with enough data and using the attention mechanism would help building cross "text-image" attentions.

The Training

We know they used the same training method as InstructGPT and ChatGPT but it is further fine-tuned with a set of rule-based reward models (RBRMs):

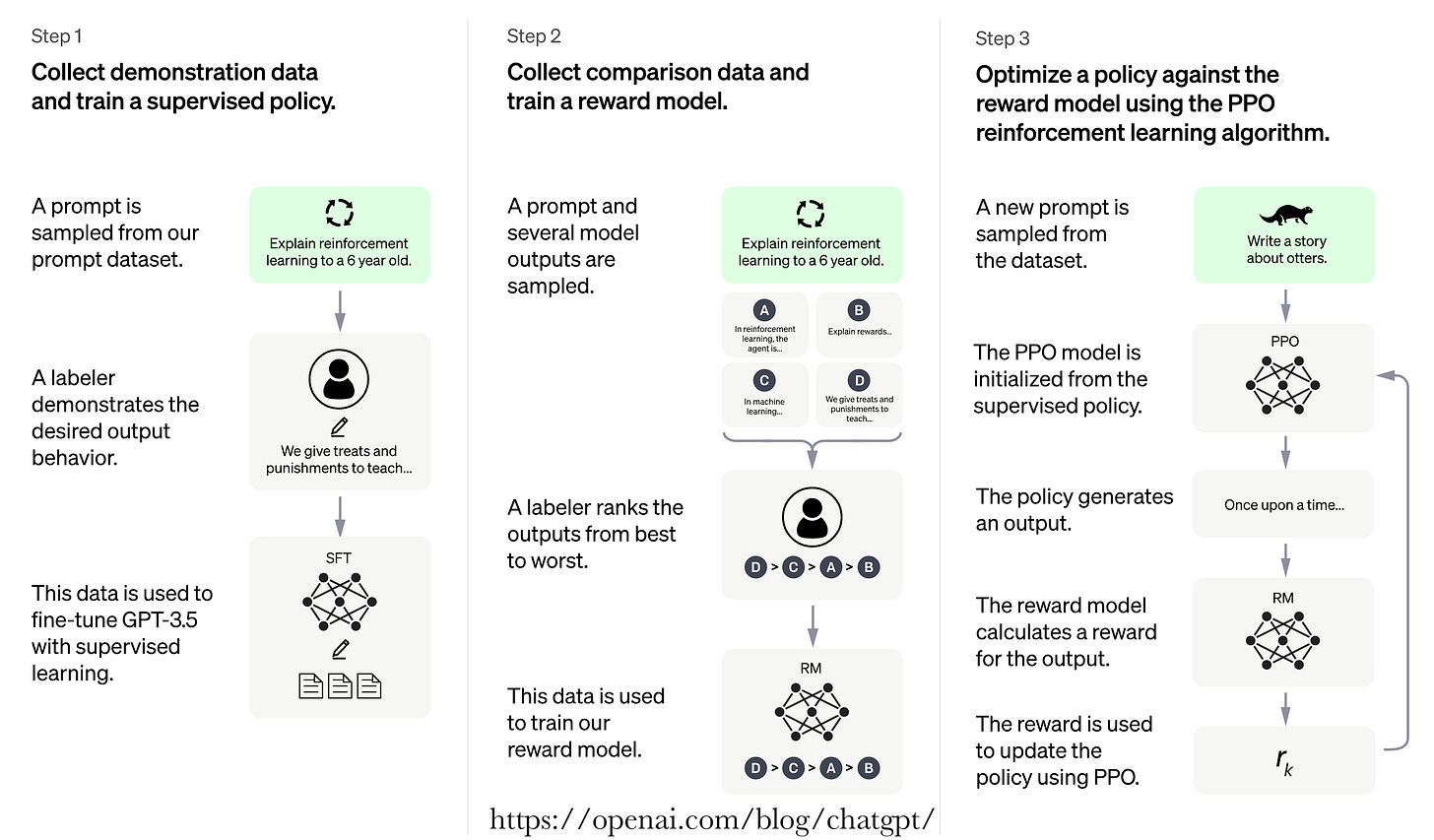

They first pre-trained the model to predict the next word with tons of internet data and data licensed from third party providers.

Then they sampled typical human prompts and asked labelers to write down the correct outputs. They fine-tuned the model in a supervised learning manner.

Then, they sampled human prompts and generated multiple outputs from the model. A labeler is then asked to rank those outputs. The resulting data is used to train a Reward model.

They then sampled more human prompts and they were used to fine-tuned the supervised fine-tuned model with Proximal Policy Optimization algorithm (PPO) (“Proximal Policy Optimization Algorithms“), a Reinforcement Learning algorithm.. The prompt is fed to the PPO model, the Reward model generates a reward value, and the PPO model is iteratively fine-tuned using the rewards and the prompts.

The RBRMs are there to mitigate harmful behaviors. There is a set of zero-shot GTP-4 classifiers that provide an additional reward signal for the PPO model. The model is fine-tuned such that it is rewarded for refusing to generate harmful content. For example, GPT-4 will refuse to explain how to build a bomb if prompted to do so.

The number of parameters

OpenAI CEO: “people are begging to be disappointed and they will be” talking about the rumor that GPT-4 could have 100 Trillion parameters.

OpenAI is trying to hide the number of parameters but it is actually not too difficult to estimate it! In Figure 1 of the GPT-4 Technical report, they plot the loss function as a function of the compute needed to train the model.