How is AI making Autonomous Driving Possible

The Architecture

The High-level path planning

Behavior arbitration

The Low-level path planning

Motion controllers

The Perception module

The Localization module

The Architecture

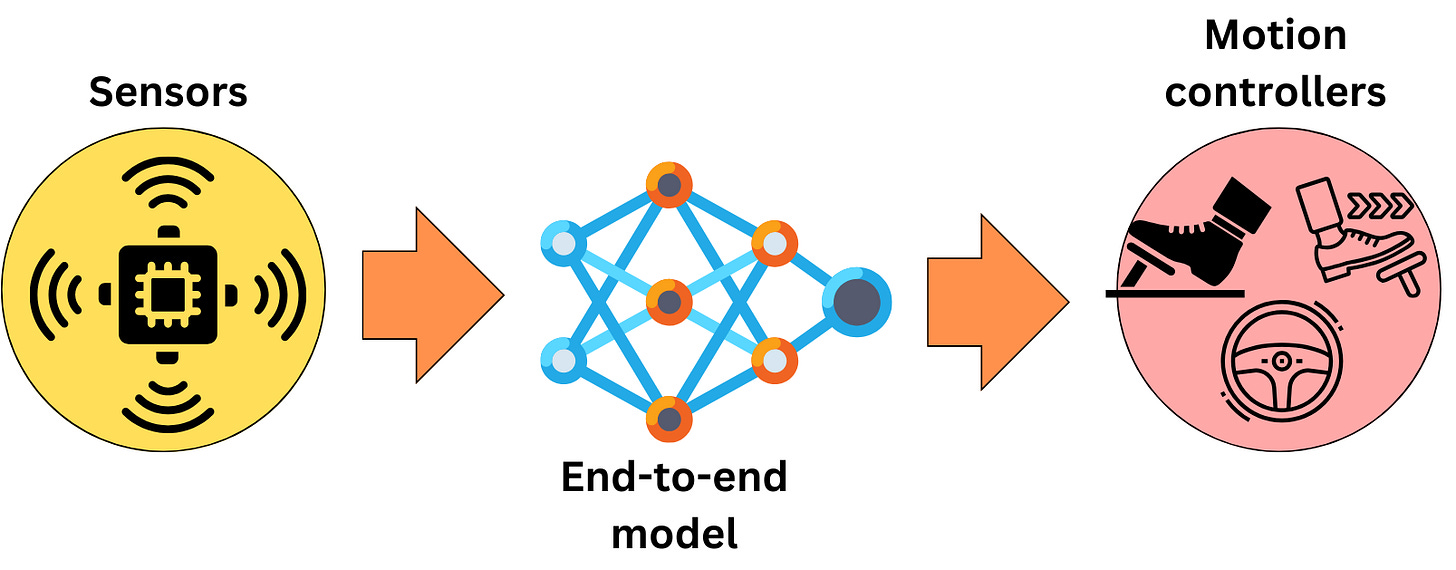

Let’s say we want to design an Autonomous Driving system. This means that we want the car to be able to drive autonomously from point A to point B while adapting to the possible unpredictable events or obstacles that may occur during the trip. As low-level control, we can only accelerate, brake, and steer the car. As a way to capture information, we have sensors such as cameras, LiDAR (Light Detection and Ranging) detectors, radars, and GPS. How do we use the sensor information to execute a series of motion controls that lead to moving from A to B?

Typically, the problem can be broken down into the following pieces:

High-level path planning: based on the current location and state of the car within the environment, we need to establish a series of small steps to take us from A to B. This means establishing all the different roads and intersections that we need to go through, as well as the different lane changes that will need to happen to turn at the different intersections safely. Because the path can be recomputed at any point during the trip, the system needs to know exactly where the car is with respect to the local road, lanes, and destination. This process can be designed to optimize for some metrics like time to destination or energy consumption.

Behavior arbitration: at any point of the trip, the system needs to be able to adjust in real-time to unplanned events. You can think of behavior arbitration as small real-time corrections to the pre-planned path. This module makes decisions on actions to take to bring the passengers to their destination safely. The different actions could be:

Line-changes

U-turns

Emergency stops

Merging onto highways

Overtaking

…

The word "arbitration" implies a level of dynamic decision-making. It suggests that the system is capable of evaluating the current context and adapting its behavior in real-time to navigate complex and dynamic environments effectively.

Low-level planning: once the action has been planned, either by the High-level path planning module or the Behavior arbitration module, the optimal trajectory has to be calculated by taking into consideration the current speed and location of the car and the surrounding vehicles and obstacles.

Motion controllers: Once the trajectory has been calculated, it has to be executed using the different controls available. The motion controller translates the calculated trajectory into a set of low-level controls such as acceleration, braking, and steering.

Localization: to accurately establish decisions, the system needs to know exactly where it is located with respect to its surrounding environment. It needs to know where are the lanes, the other vehicles, the walls, etc. This implies that the system can perceive and categorize those different elements. It also needs to know where it is with respect to the destination to readjust the high-level path planning if need be.

Perception: the system needs to accurately recognize other vehicles, pedestrians, lanes, road signs, etc, by using the different sensor information it continuously receives.

Sensors: The sensor signals need to be treated and accurately combined to be used as inputs to the perception and localization modules.

So as inputs, we have the sensor data, and as output, we have the low-level motion controls that take us from A to B. But why do we need to break down the problem into small pieces? After all, when we think about using machine learning to solve a problem, it might be more accurate to architect a model that takes the input we have and returns the predictions we need.

This is one of the approaches taken to solve this problem, but in general, this leads to some difficulties. To build a model, we need labeled data, and it is difficult to acquire a lot of labeled images with motion control labels. It is much easier to acquire a lot of images labeled with what is visible on it. If we have very accurate models when it comes to the perception and localization tasks, the other tasks can be achieved with simpler models or even no ML models at all. The ability to isolate the different tasks allows for using very specialized modules that may or may not require ML modeling and we can work on improving the different modules independently from each other.

The High-level path planning

High-level path planning is about establishing the set of actions that will need to happen for the vehicle to reach the destination. The minimum output would be a list of specific geographic locations (waypoints) or decision points that the vehicle should pass through. A routing engine would try to find a path from A to B that optimizes a certain optimization function. For example, we could want to optimize by minimizing the time to go from A to B. A routing engine can work as follows:

The different road segments and intersections work as a graph. The intersections are the nodes, and the road segments are the edges connecting them.

Based on current traffic information and historical data, we produce a time estimate to go from one node to another neighboring node. Each road segment receives an edge weight matching those time estimates.

We can now use graph algorithms to find the path that minimizes the total time to travel from the current location to the destination.

Beyond just the path, we need to establish an exhaustive list of actions along each segment. For example, if we know we will need to turn right, the car will need to merge into the right lane, stop at the possible stop sign or light, and turn right.

At any point during the trip, the path and actions could need to be recalculated due to unexpected events such as road closures or accidents. As a consequence, high-level path planning is very dependent on the vehicle being able to perceive where it is located with respect to its environment and the destination.

The problem reduces to finding the optimal path in a graph. To make sure we can include actions, we can define the edges through the topological information as well as the set of actions that could be taken. For example, between two neighboring nodes in the graph, we could model multiple alternative routes depending on the different combinations of actions that could be taken.

In principle, there is no need to use machine learning to solve the basic form of this problem. We could use any graph path search algorithm. A typical path search algorithm is the A* search algorithm, and it is likely that most routing engines utilize custom versions of it. We iteratively explore the graph in a best-first search manner by taking paths that (1) minimize the time spent from the origin and (2) minimize the distance to the destination. To compute the distance to the destination, we can use the Haversine distance formula with the latitude and longitude values. The algorithm stops when the destination is reached. It is fast, but it is only an approximation to the optimal solution as we avoid exploring all possible paths and focus only on the most promising. Here is the pseudo-code:

nodes_to_explore = []

explored_nodes = []

# initiate the value attribute of the node

start_node.value = 0

# we start from the starting node

nodes_to_explore.append(start_node)

while nodes_to_explore:

# get the node with smallest value

node = nodes_to_explore.pop_smallest_value()

explored_nodes.append(node)

# if we reached the goal, we can return the whole path

if node is goal:

return 'goal reached'

# else we explore the children of the current node

for child in node.children:

if child in explored_nodes:

continue

child.time_from_start = node.time_from_start + time_to_child

child.distance_to_end = distance from child to end

# the node's value is the key metric of the problem!

# We want to take the path that both minimize the time

# from the starting point and is closer to the end point.

# By minimizing on the value, to take a good guess on the

# next node to explore first.

child.value = child.time_from_start + child.distance_to_end

nodes_to_explore.append(child)