How To Bring Machine Learning Projects to Success

[Webinar] Accelerate AI Experimentation and Innovation with Gretel and Lambda Labs (Sponsored)

Join this virtual event to learn how Gretel and Lambda Labs together unlock faster experimentation so teams can easily vet approaches, fail fast, and be much more agile in delivering an LLM solution that works.

To build a successful machine learning product, you need to understand how to manage a machine learning project. This takes a lot of soft skills, from product discovery passing by the project planning and finally the execution, there is a lot more to building a machine learning solution than just knowing some algorithms!

Watch the video for the full content!

Machine learning is all about developing models? Think again! Machine learning is risky and costly, and we cannot get started on a project without planning, coordination, and collaboration. Here is my typical template for leading a machine learning project to success. Here are the critical steps, but each project may need some adjustment depending on the specificity of the project.

Product Discovery: What is the size of your market, and for what ML solution?

Like any business endeavor, building new software requires a deep understanding of your customer's problems and how likely those problems are to be addressed by a machine learning solution. This understanding of the problem is critical to estimating the potential monetary gain that such a new product or feature is likely to generate if implemented. It is also important to ideate if there is a feasible ML method that is likely to solve the established problem. This step is typically done as a collaboration between a technical lead and a product manager.

Assess the data infrastructure: This step will really define the project's complexity

It is critical to assess the quality of the data and the data infrastructure as soon as possible. This step will heavily influence the cost of the project or even its feasibility. The faster this assessment is done, the faster engineering work can be planned accordingly. This step is typically performed as a collaboration between a technical lead and the data engineering team.

Work in stages: Reduce the risk

Machine Learning projects can easily be broken into 3 stages:

The Minimum Viable Product (MVP): fast development, low cost, low performance. To assess the viability of such a project.

The growth stage: higher costs, greater returns. To establish the foundations of a successful product.

The maturity stage: marginal gains, high costs. To squeeze as much gain as possible.

The investment in each stage and prioritization should be motivated by the previous stage's success. The MVP stage may require more time in the discovery of the data.

Prioritize: Maximize gains, Minimize cost

Not all machine learning projects are created equal! Utilize the product discovery and infrastructure assessment steps to estimate potential gains and costs. Optimize for high gain and low complexity/cost. Prioritize the projects with a high propensity for success and discard the others!

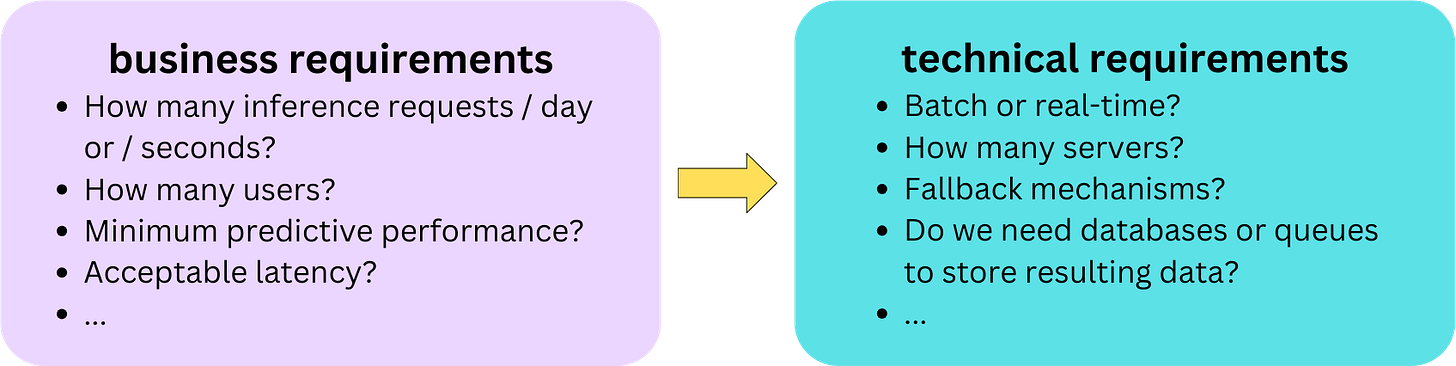

Architect the Solution: Get the business requirements, and translate them into technical requirements

That is the time to dig into the details and refresh your System Design skills!

Get the business requirements:

How many inference requests/day or / seconds?

How many users?

Minimum predictive performance?

Acceptable latency?

...

Translate into technical requirements:

Batch or real-time?

How many servers?

Fallback mechanisms?

Do we need databases or queues to store the resulting data?

...

This work is typically performed in collaboration with a product manager.

Strategic Planning: Setup the long-term vision

Establish success metrics: those are your North Star goals!

Establish milestones: what are the mandatory steps to reach the North Star goals?

Establish timelines: make sure those steps are feasible in a finite amount of time! Optimize for speed to market.

Establish head count: how many people and skills do you need to succeed in those steps?

Establish the required resources: what tools do you need to succeed?

More importantly, put everything together: establish a budget: how much money do you need to implement the solution in a timely fashion?

Typically, that work is done in collaboration with multiple teams and the leaders in charge of the budget.

Tactical Planning: How do you execute?

That is where Agile development strategies can be useful!

When does the model need to be developed, and by whom?

When do the data pipelines need to be built, and by whom?

When does the model need to be deployed, and by whom?

...

How do we coordinate all the different people across teams so the project moves smoothly without delays? This work is typically done with people from different engineering teams and skill sets.

Data Pipeline work: This is the fuel for your ML project

Based on the Data Infrastructure assessment completed so far, we can now mandate the required work on the data side.

Do you need to buy data?

Do you need a feature store?

How will you pipe the data into your training environment?

How will you pipe the data into your production environment?

Do you need to follow data protection regulations?

What data pipelines do you need to monitor your data and model in production?

...

This work is typically done with the Data Engineering team.

Build the training pipeline: Drop the notebooks!

In this Era of automation, we need to ensure that a model can be retrained or fine-tuned at will or on a schedule with a high level of control and then productionized at a simple click of a button. Of course, this level of automation is highly dependent on the current stage of project development (MVP, growth, maturity). However, at any stage, the training pipeline and the production pipeline should be highly coupled and developed by the same people or by people working closely together.

Build the Serving Pipeline: What actually provides value to the users!

The serving pipeline is an extension of the training pipeline and is driven by the business use case. How do you expose the model to your users? Do you need to build an API to access the data? What level of security do you require? Do you need a post-processing module to make model inference useful? This work is usually done in collaboration with machine learning engineers, MLOps engineers, backend (and sometimes frontend) engineers, and sometimes mobile engineers, depending on how the model is deployed.

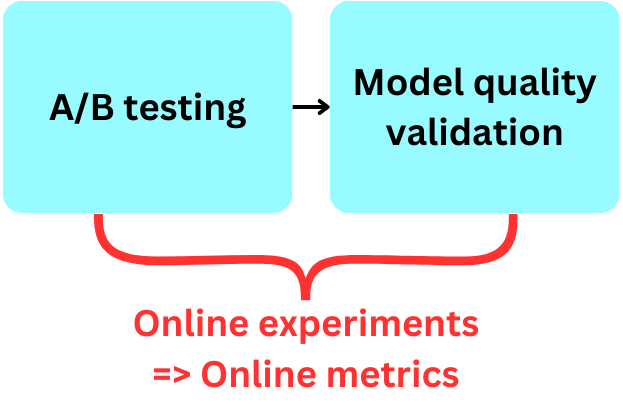

Test in production: Is your model actually good?

Testing in production is the only way you can determine if your model is providing the expected value. The pipelines to perform A/B testing and Canary deployments need to be carefully designed to quickly detect if a model is unexpectedly underperforming and remove it without harming the user experience or revenue. This is typically done in collaboration with MLOps engineers.

Monitoring in Production: What about if your model deteriorates tomorrow?

In production, everything can happen! Data or concepts can shift. Servers can break. The software can have undetected bugs. You need to monitor those different components and implement plans of action (automated or not) as fallback mechanisms in case something gets wrong. This work is typically done in collaboration with MLOps engineers.

Documenting: The most critical step nobody likes!

This part of the work might be the most underrated part of a project, along with writing unit tests.

Iterate: Return to step 1!

If more improvements make sense financially, you are just getting started! The field is moving so fast that by the time you're done with one iteration, you likely have tools and techniques that you haven't had the time to test or implement yet! You may need to invest in the next stage of development or simply update the current one.

This structure comes from my years of experience and has been a successful one so far. Let me know if you have a different flow!