How to Build an Image Retrieval System like Google Images

Machine Learning System Design

If I had to build an image retrieval system like Google Images, this is how I would start! I love starting from a business problem and deriving logically how it would translate as a Machine Learning solution! Here is the outline:

What do we want to build?

The requirements

Framing the problem as a Machine Learning solution

The architectural system components

The indexing pipeline

The inference pipeline

The problem with that architecture

Evaluating the model

Online metrics

Offline metrics

Constructing the training data

With Human labelers

With the ImageNet dataset and image classifiers

With data augmentation

With user-SERP interactions

Training the models

The image model

The loss function

the text model

What do we want to build?

The requirements

Let’s assume we want to build an image retrieval system similar to Google Images:

Google Image is a search engine where we input a text or an image query, and we are presented with a ranked list of related images. If we use text as input, we want to make sure that the images are well described by the text, and if we use an image as input, we want to make sure to present the most similar images. The latency is sub-second, so let’s assume that we fix 100 ms as the maximum required latency. Google Images contains ~150B images, so we need a strategy to index the images for fast retrieval. Around 1B users are using Google Images daily (11.5K people every second), so we need the system to be scalable.

Let’s summarize the requirements:

We can have text or image input as a query

The maximum latency is 100 ms

We need a system that can scale to ~150B images

We need a system that can scale to 1B users daily

Let’s assume that the results are not personalized to the specific user

Framing the problem as a Machine Learning solution

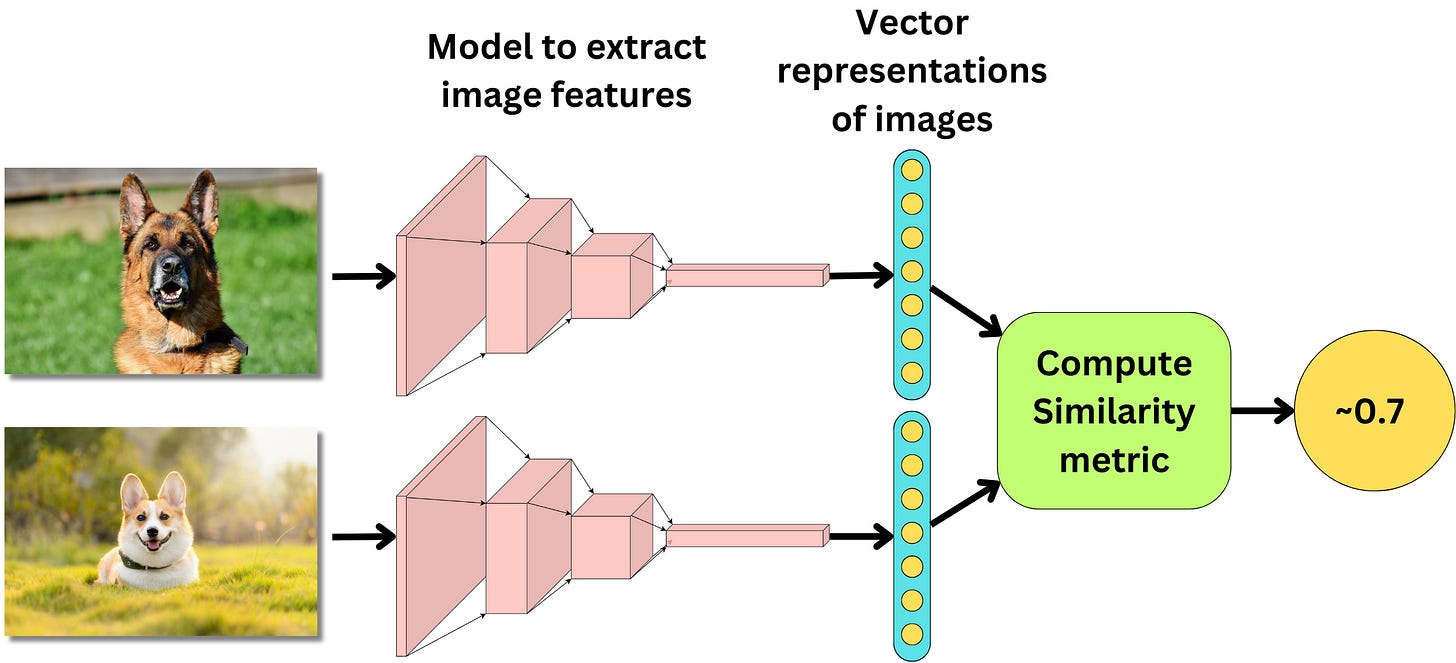

We can frame this problem as a ranking problem. We need a model that takes as input two images and returns a similarity score.

Using that model, we can then rank the images based on that similarity score.

A typical modeling approach is to utilize models that can learn a vectorial representation (embedding) of the images and compute a similarity metric on those vectors.

As soon as we translate the images into vectors, it becomes easy to include text as inputs. We need a model that can extract the image features to learn a vector representation of images, and we need a model that can extract the text features to learn a vector representation of text inputs. We need to co-train the image and text models such that the vector representations are semantically aligned, meaning that a text input that describes an image correctly should have a high similarity metric to the image: