How to train ChatGPT

This article has not been written by ChatGPT (although you will never know!)

What is it about ChatGPT we get so impressed by? GPT-3's output is no less impressive but why does ChatGPT's outputs feel "better"? The main difference between ChatGPT and GPT-3 is the tasks they are trying to solve. GPT-3 is mostly trying to predict the next token based on the previous tokens, including the ones from the user's prompt, where ChatGPT tries to "follow the user's instruction helpfully and safely". ChatGPT is trying to align to the user's intention (alignment research). That is the reason InstructGPT (ChatGPT's sibling model) with 1.3B parameters give responses that "feel" better than GPT-3 with 175B parameters.

The Training

ChatGPT is "simply" a fined-tuned GPT-3 model with a surprisingly small amount of data! It is first fine-tuned with supervised learning and then further fine-tuned with reinforcement learning. In the case of InstructGPT, they hired 40 human labelers to generate the training data. Let's dig into it (the following numbers were the ones used for InstructGPT)!

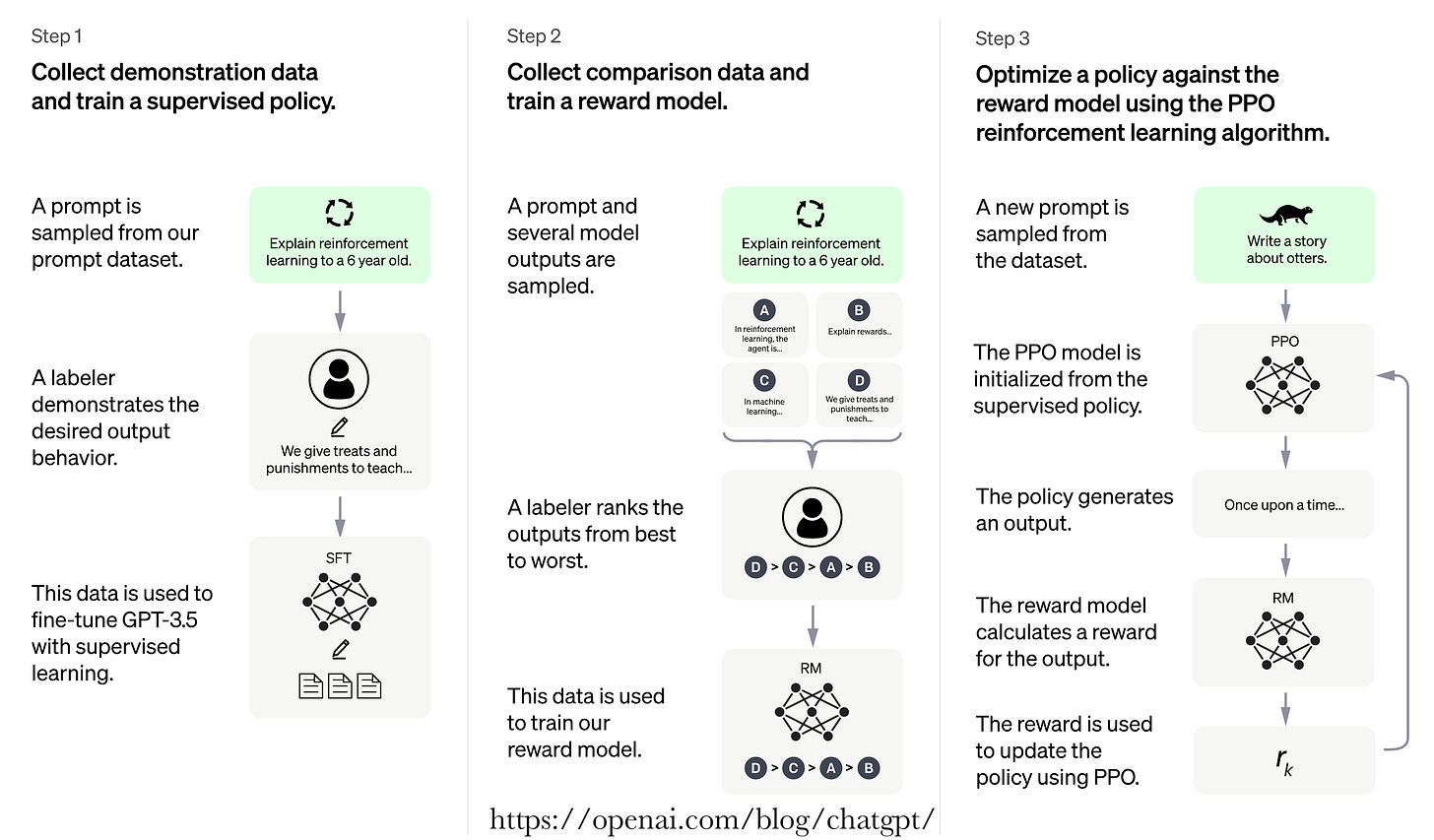

First, they started by a pre-trained GPT-3 model trained on a broad distribution of Internet data (GPT-3 article). Then sampled typical human prompts used for GPT-3 collected from the OpenAI website and asked labelers and customers to write down the correct outputs. They fine-tuned the model in a supervised learning manner using 12,725 labeled data point.

Then, they sampled human prompts and generated multiple outputs from the model. A labeler is then asked to rank those outputs. The resulting data is used to train a Reward model (https://arxiv.org/pdf/2009.01325.pdf) with 33,207 prompts and ~10 times more training samples using different combinations of the ranked outputs.

They then sampled more human prompts and they were used to fine-tuned the supervised fine-tuned model with Proximal Policy Optimization algorithm (PPO) (https://arxiv.org/pdf/1707.06347.pdf), a Reinforcement Learning algorithm. The prompt is fed to the PPO model, the Reward model generates a reward value, and the PPO model is iteratively fine-tuned using the rewards and the prompts using 31,144 prompts data.

ChatGPT vs GPT-3

ChatGPT is simply a GPT3 model fine-tuned to human generated data with a reward mechanism to penalize responses that feel wrong to human labelers. They are a few advantages that emerged from that alignment training process:

ChatGPT provides answers that are preferred over the ones generated by GPT-3

ChatGPT generates right and informative answers twice as often as GPT-3

ChatGPT leads to a language generation that is less toxic than GPT3. However ChatGPT is still as biased!

ChatGPT adapts better to different learning tasks, generalize better to unseen data, or to very different instructions from the ones found in the training data. For example, ChatGPT can answer in different languages or efficiently code, even though, most of the training data is using natural English language.

For decades, language models were trained trying to predict sequences of words, where the key seemed to be in training to align to user's intent. It seems conceptually obvious, but it is the first time that an alignment process is successfully applied to a language model of this scale.

All the results presented in this post actually come from the InstructGPT article (InstructGPT article), and it is a safe assumption that those results carry to ChatGPT as well.

Language Models are Few-Shot Learners by Tom B. Brown et al: https://arxiv.org/pdf/2005.14165.pdf

Training language models to follow instructions with human feedback by Long Ouyang et al: https://arxiv.org/pdf/2203.02155.pdf

Learning to summarize from human feedback by Nisan Stiennon et al: https://arxiv.org/pdf/2009.01325.pdf

Proximal Policy Optimization Algorithms by John Schulman et al: https://arxiv.org/pdf/1707.06347.pdf