We continue our series of videos on Introduction to LangChain. Today we look at loading files and summarizing text data with LangChain. LangChain has a wide variety of modules to load any type of data which is fundamental if you want to build software applications.

Summarizing text with the latest LLMs is now extremely easy and LangChain automates the different strategies to summarize large text data.

We are going to go through a little tutorial to load data with different modules and I am going to show you how you can summarize the data you just loaded. Let's dive into it!

Loading Data

I am installing a couple of packages necessary to run some of the data loaders:

langchain

openai

tqdm: library to show the progress of an action (downloading, training, ...)

jq: lightweight and flexible JSON processor

unstructured: A library that prepares raw documents for downstream ML tasks

pypdf: A pure-python PDF library capable of splitting, merging, cropping, and transforming PDF files

tiktoken: a fast open-source tokenizer by OpenAI.

pip install langchain openai tqdm jq unstructured pypdf tiktokenIn this tutorial, I used the following files available for free here:

The PDF version of The Elements of Statistical Learning

CSV files of Global Daily Weather Data

HTML pages of the Deep Learning course by Yann LeCun & Alfredo Canziani

Markdown files of the Deep Learning course repository

Load a CSV file

For example, to load a CSV file we just need to run the following:

from langchain.document_loaders.csv_loader import CSVLoader

file_path = ...

csv_loader = CSVLoader(file_path=file_path)

weather_data = csv_loader.load()The resulting data is a list of documents. Each document represents a row in that CSV file

Load a PDF file

We can also load PDF files. The load_and_split method is going to load and split the text data into small chunks and we can load a whole book:

from langchain.document_loaders import PyPDFLoader

file_path = ...

sl_loader = PyPDFLoader(file_path=file_path)

sl_data = sl_loader.load_and_split()

sl_data[0]We can customize the way we partition the data:

from langchain.text_splitter import (

CharacterTextSplitter,

RecursiveCharacterTextSplitter

)

# split on "\n\n"

splitter1 = CharacterTextSplitter(

chunk_size=1000,

chunk_overlap=0,

)

# split ["\n\n", "\n", " ", ""]

splitter2 = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=0,

)

sl_data1 = sl_loader.load_and_split(text_splitter=splitter1)

sl_data2 = sl_loader.load_and_split(text_splitter=splitter2)Loading directories of mixed data

We can also load a whole directory of files with one function:

from langchain.document_loaders import DirectoryLoader

folder_path = ...

mixed_loader = DirectoryLoader(

path=folder_path,

use_multithreading=True,

show_progress=True

)

mixed_data = mixed_loader.load_and_split()Summarizing text data

The “Stuff” chain

With LangChain, it is not difficult to summarize text of any length. To summarize text with an LLM, there are a few strategies.

If the whole text fits in the context window, then you can simply feed the raw data and get the result. LangChain refers to that strategy as the “stuff“ chain type.

from langchain.chains.summarize import load_summarize_chain

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI()

chain = load_summarize_chain(

llm=llm,

chain_type='stuff'

)

chain.run(sl_data[:2])Unfortunately for the Stuff chain, it is going to break if the data is too large because the number of tokens sent to the LLM is larger than the context window:

chain.run(sl_data[:20])The stuff chain uses a specific prompt template:

chain.llm_chain.prompt.templateWrite a concise summary of the following:

"{text}"

CONCISE SUMMARY:

And we can use custom prompts:

from langchain.prompts import PromptTemplate

template = """

Write a concise summary of the following in spanish:

"{text}"

CONCISE SUMMARY IN SPANISH:

"""

prompt = PromptTemplate.from_template(template)

chain = load_summarize_chain(

llm=llm,

prompt=prompt

)

chain.run(sl_data[:2])The “Map-reduce“ chain

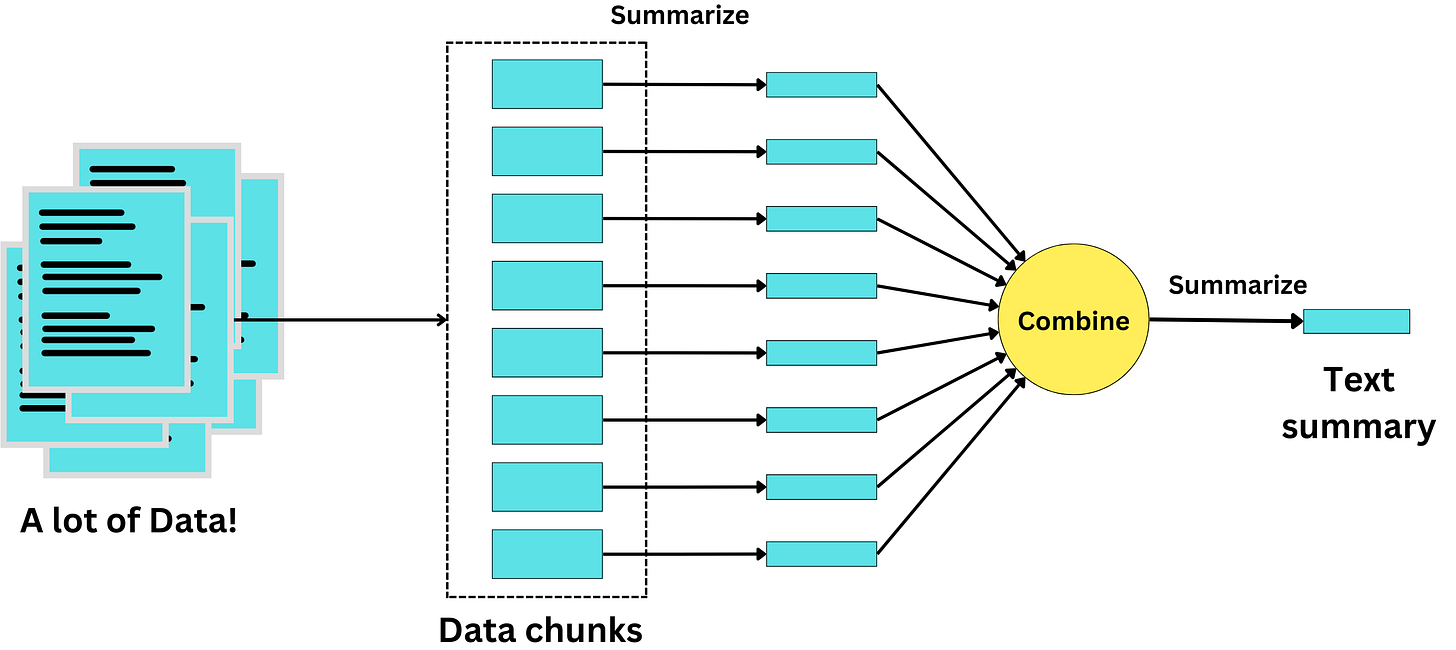

When we want to summarize a lot of data, we can use the Map-reduce strategy. We break down the data into multiple chunks, summarize each chunk, and summarize the concatenated summaries in a final "combine" step:

In Langchain, we just run:

chain = load_summarize_chain(

llm=llm,

chain_type='map_reduce',

)

chain.run(sl_data[:20])The Map-reduce chain has a prompt for the map step:

chain.llm_chain.prompt.templateWrite a concise summary of the following:

"{text}"

CONCISE SUMMARY:

And a prompt for the combine step:

chain.combine_document_chain.llm_chain.prompt.templateWrite a concise summary of the following:

"{text}"

CONCISE SUMMARY:

We can change the behavior of this chain by using custom prompts

map_template = """The following is a set of documents

{text}

Based on this list of docs, please identify the main themes

Helpful Answer:"""

combine_template = """The following is a set of summaries:

{text}

Take these and distill it into a final, consolidated list of the main themes.

Return that list as a comma separated list.

Helpful Answer:"""

map_prompt = PromptTemplate.from_template(map_template)

combine_prompt = PromptTemplate.from_template(combine_template)

chain = load_summarize_chain(

llm=llm,

chain_type='map_reduce',

map_prompt=map_prompt,

combine_prompt=combine_prompt,

verbose=True

)

chain.run(sl_data[:20])The “Refine“ chain

Another strategy to summarize text data is the Refine chain. We begin the summary with the first chunk and refine it little by little with each of the following chunks.

In LangChain we just run the following:

chain = load_summarize_chain(

llm=llm,

chain_type='refine',

verbose=True

)

chain.run(sl_data[:20])This chain has the tendency to fail as shown above. This is due to the LLM sometime failing to understand the prompt. We have an initial prompt for the first summary:

chain.initial_llm_chain.prompt.templateWrite a concise summary of the following:

"{text}"

CONCISE SUMMARY:

And a prompt for the refine step:

chain.refine_llm_chain.prompt.templateYour job is to produce a final summary

We have provided an existing summary up to a certain point: {existing_answer}

We have the opportunity to refine the existing summary(only if needed) with some more context below.

------------

{text}

------------

Given the new context, refine the original summary

If the context isn't useful, return the original summary.

But the LLM sometimes fails to execute correctly that refine step, breaking the whole chain. We can create custom prompts to modify the behavior of that chain:

initial_template = """

Extract the most relevant themes from the following:

{text}

THEMES:"""

refine_template = """

Your job is to extract the most relevant themes

We have provided an existing list of themes up to a certain point: {existing_answer}

We have the opportunity to refine the existing list(only if needed) with some more context below.

------------

{text}

------------

Given the new context, refine the original list

If the context isn't useful, return the original list and ONLY the original list.

Return that list as a comma separated list.

LIST:"""

initial_prompt = PromptTemplate.from_template(initial_template)

refine_prompt = PromptTemplate.from_template(refine_template)

chain = load_summarize_chain(

llm=llm,

chain_type='refine',

question_prompt=initial_prompt,

refine_prompt=refine_prompt,

verbose=True

)

chain.run(sl_data[:20])This concludes our introduction to Loading Data and Summary Strategies. I hope you had some fun. We will continue our introduction to Langchain in the following video. See you there!