The AiEdge+: Algorithms for Hyperparameter Optimization

Going beyond grid search

For me training a model is as simple as clicking a button! I have spent many years automating my model development. I really think data scientists / machine learning engineers should not waste time rewriting the same code over and over to develop different (but similar) models. Today we dig into hyperparameter optimization techniques going beyond a simple grid-search. We cover:

Bayesian optimization

Optimization with Reinforcement Learning

Learn more about Hyperparameter Optimization

Bayesian optimization

For me training a model is as simple as clicking a button! I have spent many years automating my model development. I really think data scientists / machine learning engineers should not waste time rewriting the same code over and over to develop different (but similar) models. When you reframe the business problem as a machine learning problem, you should be able to establish an effective experiment design, generate relevant features, and fully automate the development of the model by following basic optimization principles.

When it comes to automation, you should think about:

Feature selection

Imputation

Feature transformations

Hyperparameter tuning

All of which can be easily automated. For example, a typical approach to hyperparameter tuning is Bayesian Optimization Hyper Band (BOHB). The Bayesian optimization algorithm is usually done through the Tree-structured Parzen Estimator method:

You select a few sets of hyperparameters and compute their related performance metrics. This gives you a prior on the distribution of the performance metric in the hyperparameter space.

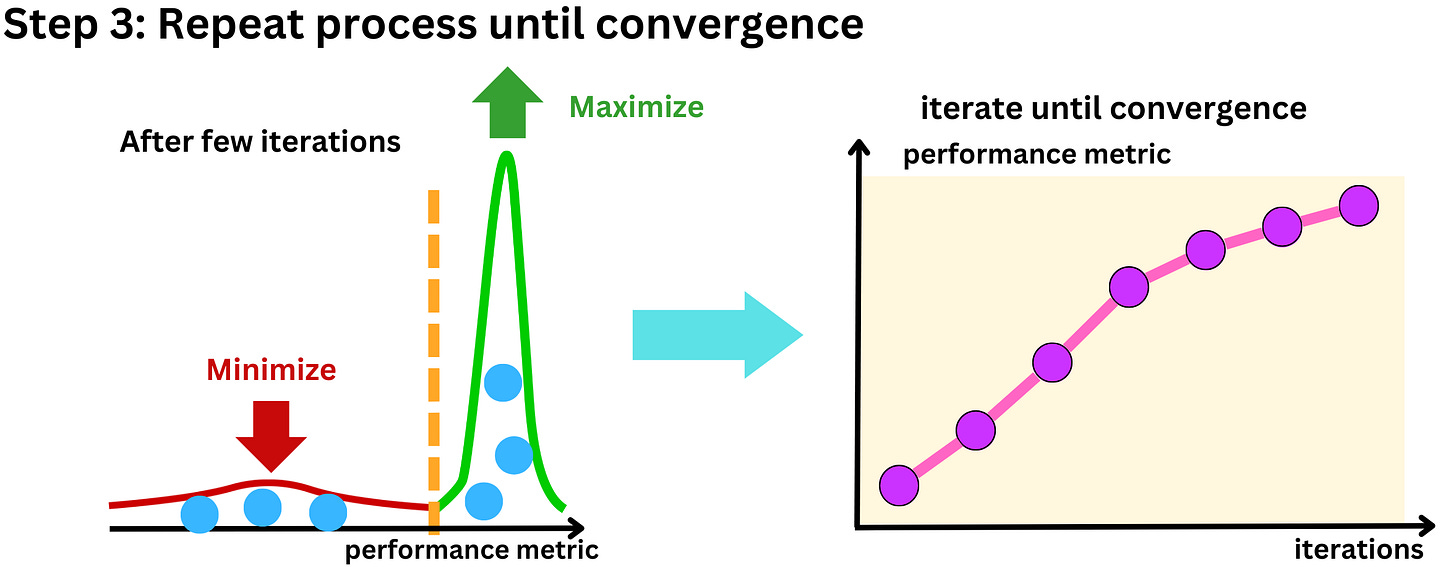

Some will perform well and some will perform poorly! So split the samples into "good" and "bad" hyperparameter sets. Intuitively, we want to search closer to good ones. Typically, we fit a kernel density estimator and sample new hyperparameter sets from the "good" distribution and choose the one that maximizes the distribution of "good" hyperparameters and minimizes the bad ones.

We iterate this process until convergence or until you blow your AWS budget!

Naive optimization search can be very expensive in terms of time and money and there are strategies to improve search efficiency. Hyperband is a method that prevents allocating too much time to obviously bad candidates. There are some hyperparameters that are clearly bad after a few epochs, why do you need to train the model for 100 more epochs? The idea is that we can quickly understand what domain of the hyperparameter space we should focus on by training the model with a fraction of the data or epochs. For example you could do as follows:

Select a few hyperparameter candidates and train the model with ⅛ of the data.

Discard half of the candidates (the worst ones) and sample new candidates around the better ones using Bayesian Optimization.

Now train the model with ¼ of the data and those candidates to find the better ones among those.

Iterate by increasing the data size, discarding the worst samples, and finding better ones using BO.

This was the simplified version of BOHB. Of course, I am not suggesting writing those algorithms yourself and here are some hyperparameter optimization packages you can use:

Scikit-Optimize: https://scikit-optimize.github.io/stable/index.html

Optuna: https://optuna.org/

Hyperopt: http://hyperopt.github.io/hyperopt/

Keras tuner: https://keras.io/keras_tuner/

BayesianOptimization: https://github.com/fmfn/BayesianOptimization

Metric Optimization Engine (MOE): https://github.com/Yelp/MOE

Spearmint: https://github.com/HIPS/Spearmint

SigOpt: https://sigopt.com/

Optimization with Reinforcement Learning

Reinforcement Learning tends to be more difficult to approach than typical supervised learning! It is not widely used but it is a great framework for hyperparameter optimization. The idea is to follow a Markov chain of better and better states of model performance.