The AiEdge+: Embeddings, the Superpower of Deep Learning

Representation learning

Today we dig into a special ability of Deep Learning: learning latent representation of the data commonly called Embeddings. Those embeddings can be used as features in other models or as a way to perform dimensionality reduction. We are going to look at

The different kind of Embeddings

The Hashing trick: how to build a recommender engine for billions of users

Github repositories, articles and Youtube videos about Embeddings

The different kind of Embeddings

I think Deep Learning finds its strength in its ability to model efficiently with different types of data at once. It is trivial to build models from multimodal datasets nowadays. It is not a new concept though, nor was it impossible to do it prior to the advent of Deep Learning, but the level of complexity of feature processing and modeling was much higher with much lower performance levels!

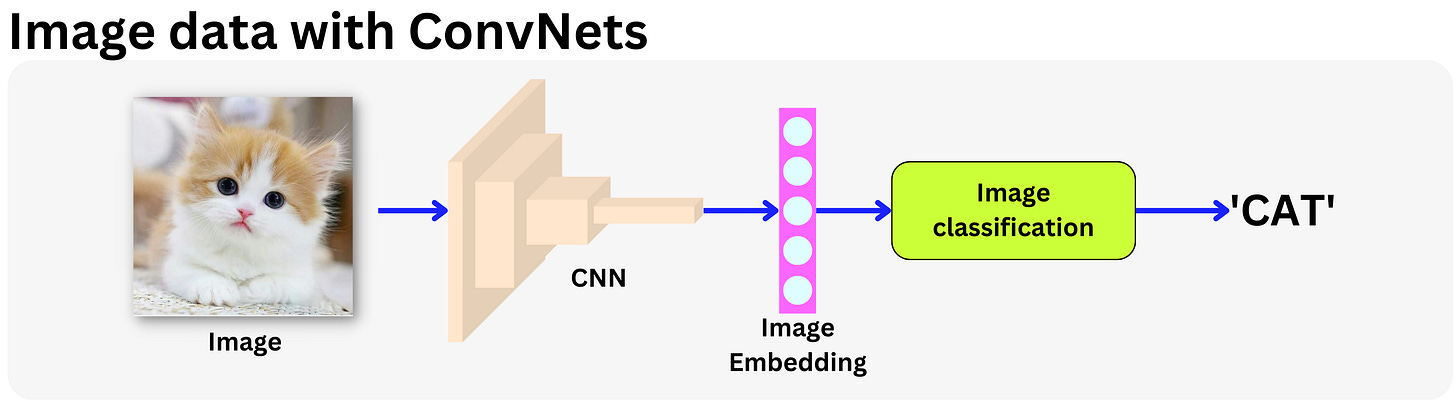

One key aspect of this success is the concept of Embedding: a lower dimensionality representation of the data. This makes it possible to perform efficient computations while minimizing the effect of the curse of dimensionality, providing more robust representations when it comes to overfitting. In practice, this is just a vector living in a "latent" or "semantic" space.

The first great success of embedding for word encoding was Word2Vec back in 2013 (“Efficient Estimation of Word Representations in Vector Space”) and later GloVe in 2014 (“GloVe: Global Vectors for Word Representation”). Since AlexNet back in 2012 (“ImageNet Classification with Deep Convolutional Neural Networks”), many Convolutional network architectures (VGG16 (2014), ResNet (2015), Inception (2014), …) were used as feature extractors for images. As of 2018 starting with BERT, Transformer architectures have been used quite a bit to extract semantic representations from sentences.

One domain where embeddings changed everything is recommender engines. It all started with Latent Matrix Factorization methods made popular during the Netflix movie recommendation competition in 2009. The idea is to have a vector representation for each user and product and use that as base features. In fact, any sparse feature could be encoded within an embedding vector and modern recommender engines typically use hundreds of embedding matrices for different categorical variables.

Dimensionality reduction is by all accounts not a new concept in Unsupervised Learning! PCA for example dates back to 1901 (“On lines and planes of closest fit to systems of points in space“), the concept of Autoencoder was introduced in 1986, and the variational Autoencoders (VAE) were introduced in 2013 (“Auto-Encoding Variational Bayes“). For example, VAE is a key component of Stable Diffusion. The typical difficulty with Machine Learning is the ability to have labeled data. Self-supervised learning techniques like Word2Vec, Autoencoders, generative language models allow us to build powerful latent representations of the data at low cost. Meta recently came out with Data2Vec 2.0 to learn latent representations of any data modality using self-supervised learning (“Efficient Self-supervised Learning with Contextualized Target Representations for Vision, Speech and Language“).

Beside learning latent representations, a lot of work is being done to learn aligned representations between different modality. For example, CLIP (“Learning Transferable Visual Models From Natural Language Supervision“) is a recent contrastive learning method to learn semantically aligned representations between text and image data.

The Hashing trick: how to build a recommender engine for billions of users

One typical problem with embeddings is that they can consume quite a bit of memory as the whole matrix tends to be loaded at once.

I once had an interview where I was asked to design a model that would recommend ads to users. I simply drew on the board a simple Recommender engine with a User embedding and an Ads embedding, a couple of non-linear interactions and a "click-or-not" learning task. But the interviewer asked "but wait, we have billions of users, how is this model going to fit on a server?!". That was a great question, and I failed the interview!