The AiEdge+: How Pinterest uses Transformers

In this blog post, I had a chance to explore how Pinterest is really pushing the boundaries in machine learning with their innovative use of Transformers. We cover:

How Pinterest Personalizes Ads and Homefeed Ranking with Transformers

How Pinterest uses Transformers for image retrieval

Discover machine learning at Pinterest!

How Pinterest Personalizes Ads and Homefeed Ranking with Transformers

Transformers are really taking over every industry and machine learning applications! Not too long ago, Transformers were just the next evolution in NLP research. Now it is difficult to find a ML domain where Transformers didn't beat SOTA performances. Pinterest is quite up to date when it comes to its ML research! They recently completely rethought their approach to personalized recommendation by encoding long-term user behaviors with Transformers.

Pinterest items are called pins. They are visual representations of products or ideas that people can save on their boards. Pins are a combination of an image, text description and an external link to the actual product. Users can interact with those pins by clicking, saving and closeups. Each of the pins is represented by an embedding which is an aggregation of visual, text annotations, and engagement information on that pin.

In recommender systems, it is typical to learn latent representations of the users and items we recommend. We learn a user representation that depends on specific training data. Considering the amount of data that a platform like Pinterest sees every day, it is common that the training data for a ML model doesn't spam longer than 30 days, in which case we learn a user behavior which only captures the last 30 days. Whatever behavior the user had prior to that is forgotten and any short term interests (the past hour let's say) are not understood by the model. In typical model development, the problem is a classification problem where we try to predict if a user will interact with an item or not. We build features to capture past actions but we don't take into account the intrinsic time-series structure of the problem

Pinterest goes about that problem differently! They learn long and short term interests separately. They first train the PinnerFormer Transformer (“PinnerFormer: Sequence Modeling for User Representation at Pinterest“) by looking at user-pins engagement time series back 1 year. The Transformer is able to learn the relationship between different engagements at different points in time in order to predict the future. Instead of predicting only the next engagement, they built a loss function that takes into account all future engagements in the next 14 or 28 days. The last attention layer of the model is used as an encoding for long term user interests and captured into a database.

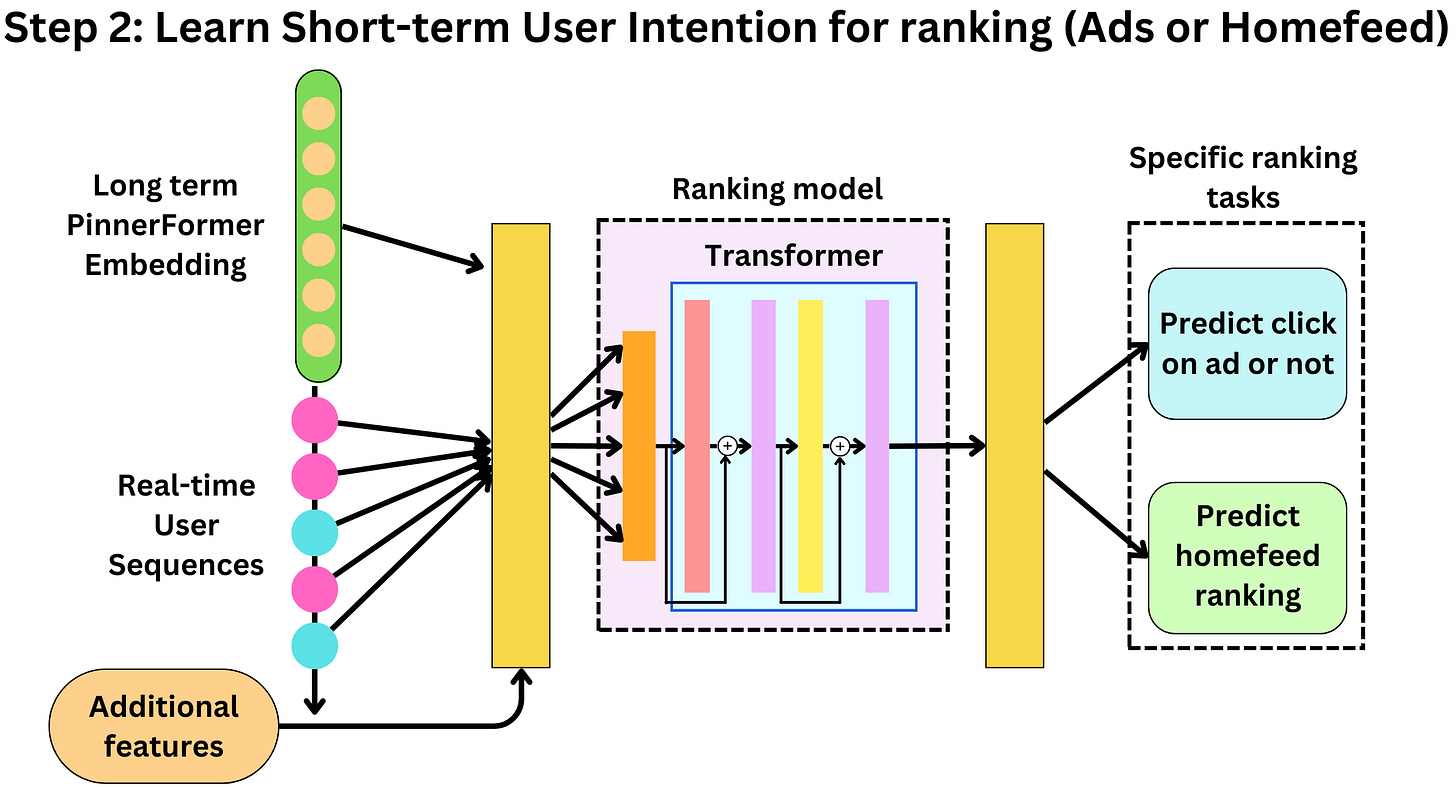

Depending on the ranking problem, another Transformer uses long-term user embeddings and short-term past actions (hours or minutes ago) to predict next pin or ad interaction. To recommend products to users, the Transformer learns the relationship between short-term actions and long-term interests. Check out the article: “Rethinking Personalized Ranking at Pinterest: An End-to-End Approach“.

How Pinterest uses Transformers for Image retrieval

Machine Learning innovations are driven by the need to build ever more useful products for users. Under the assumption that "more useful" equates to "more profitable"!