The AiEdge+: How to Augment Large Language Models

Going beyond ChatGPT

What makes Large Language Models different from other machine learning algorithms is the model's ability to evolve beyond its initial programming. We typically think about machine learning models as a way to encapsulate the information contained in the training data, but LLMs go beyond that. That can be augmented with tools and they can reason with new information. Today we cover:

How to teach Large Language models using plugins

How are Language Models able to learn in context?

Learn more about enhancing LLMs (articles, Github repositories, YouTube videos)

How to Teach Large Language models using plugins

How is ChatGPT suddenly able to use plugins? It is actually not that surprising considering it has been in the works for quite some time now. Everybody's first reaction to using ChatGPT was "if only it could connect to Google Search"! But how does it know what tool to call and how? Of course, OpenAI is keeping its own implementation a secret but we could make an educated guess by looking at Meta AI's approach, ToolFormer!

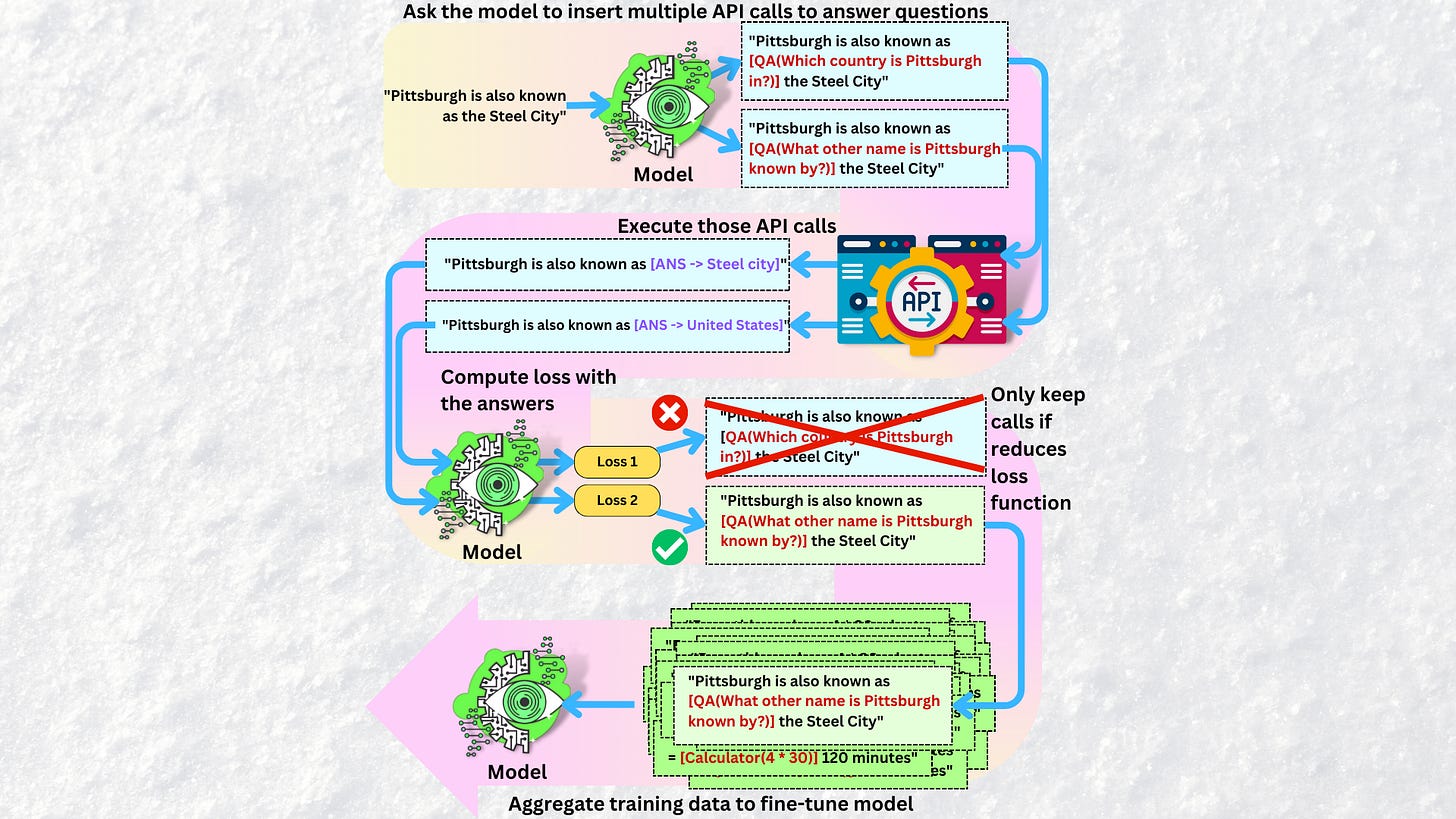

Like always, it is about fine-tuning the model with the right data. So we need data with plugins' API calls. Let's create it then:

We first use the Large Language Model (LLM)'s ability to learn in context and create data with API calls. We present it with a few examples of what to do within the prompts followed by the input data we need modified. For example:

Can you modify this input following this example?

Example input: "Pittsburgh is also known as the Steel City"

Example output: "Pittsburgh is also known as [QA(What other name is Pittsburgh known by?)] the Steel City"

Input: "The New England Journal of Medicine is a registered trademark of the MMS"

Output: ?Here “QA” represents a call to a Question Answering API, but you can create as many examples as plugins you want to include. The idea is to insert API call markers in sentences just before the actual information. Because this is done in a self-supervised learning manner, you can annotate as much data as you want for cheap.

Now we are left with many guesses by the LLM on where to potentially call an API but many will be incorrect, so we need to filter some of them. For that, we execute the API call and get the responses. The responses are text so we can include them in the original sentences where the original information was and we can compute the probability of the sentence by probing the LLM. If the loss function computed with the API's response is lower than the loss function computed without, we keep the LLM's guess. If the loss function is lower that means the model will learn with that data as input.

At this point, we created a new dataset with API call makers whose responses the model believes would produce the right answers. We can now use that dataset to fine-tune the model to learn to call APIs instead of producing sentences with low confidence.

ToolFormer is a framework to teach LLM to use plugins and they showed how a small model can outperform a larger one by augmenting it with a few tools: “Toolformer: Language Models Can Teach Themselves to Use Tools“. If you want to read more about augmented LLMs, this following survey showcases the different works on how to augment LLMs: “Augmented Language Models: a Survey“. If you want to start creating plugins for ChatGPT here is the documentation: Chat Plugins.

How are Language Models able to learn in context?

Have you noticed that when we correct ChatGPT, it apologizes and it tries to go your way? This is a bit surprising since it is a model with a fixed set of parameters and we could expect it to always output the same information. Why is there such a thing as "prompt engineering"? Why would different information come out depending on additional context provided in the prompts? That is the Large Language Model's ability to learn in context. The longer model's context window, the longer it can remember additional context. For example GPT-3 has a context window of ~1000 words where GPT-4's is ~25,000 words.