The Tools Landscape for LLM Pipelines Orchestration (Part 2)

Autonomous Agentic Frameworks

Agents and Tasks

Communication Patterns

Human in the Loop

Environment Integration

Deploying in Production environments: state management, tracing, monitoring

The problem with autonomous agentic systems

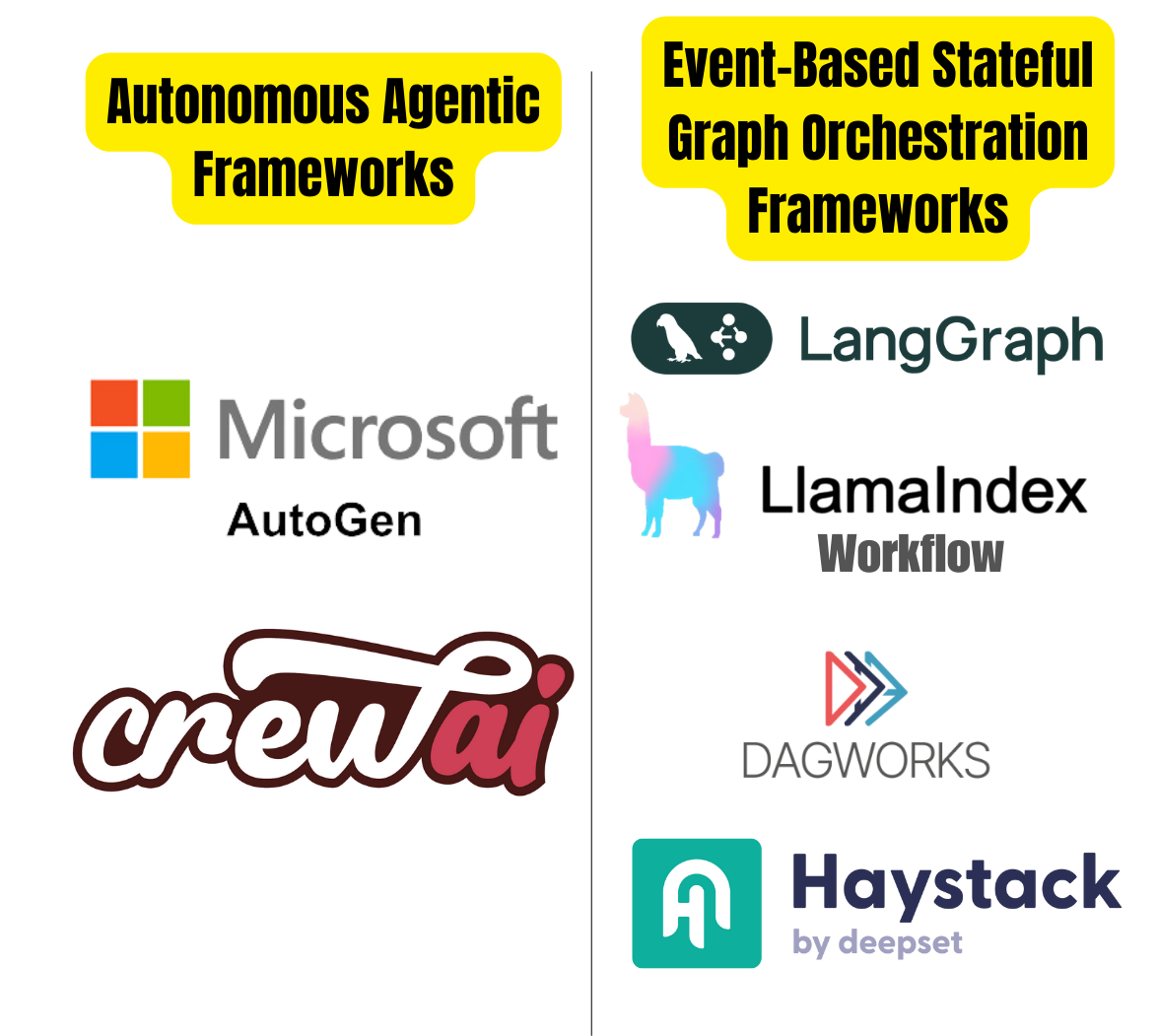

The Autonomous Agentic frameworks provide a seductive abstraction to build LLM-based agentic software. At their core, they automated a lot of the complexity around the implementation of agents and the way they interact. They can actually be considered as wrappers around event-based stateful graph orchestrators (I tend to call that “macro-orchestration“) to handle the complexity around the application flow and around micro-orchestrators to handle LLM-specific flow of data and API connections.

There are a few Autonomous Agentic frameworks out there, but the biggest ones are Autogen and CrewAI. LangRoid and Genworlds are simpler options that start to have some success as well. We can also note the newer OpenAI Swarm package that focuses on implementing the handoff and routines patterns.

Agents and Tasks

LLM-based agents are simple! They can be described as a combination of:

An LLM: this is the decision engine taking a state and generating an action.

Instructions: the instructions provided as the system prompt will define the goal of the agent. It plays a similar role as the reward function in reinforcement learning-based agents.

A set of actions: actions in the context of LLM-based agents are usually called “tools“ or “functions“. Those are the available actions the LLM can choose from. Actions can be search engines, data store retrieval, or simple Python functions.

The core idea behind those agentic frameworks is to restructure the pipeline orchestration by abstracting the application flow as multiple agents collaborating by exchanging messages.

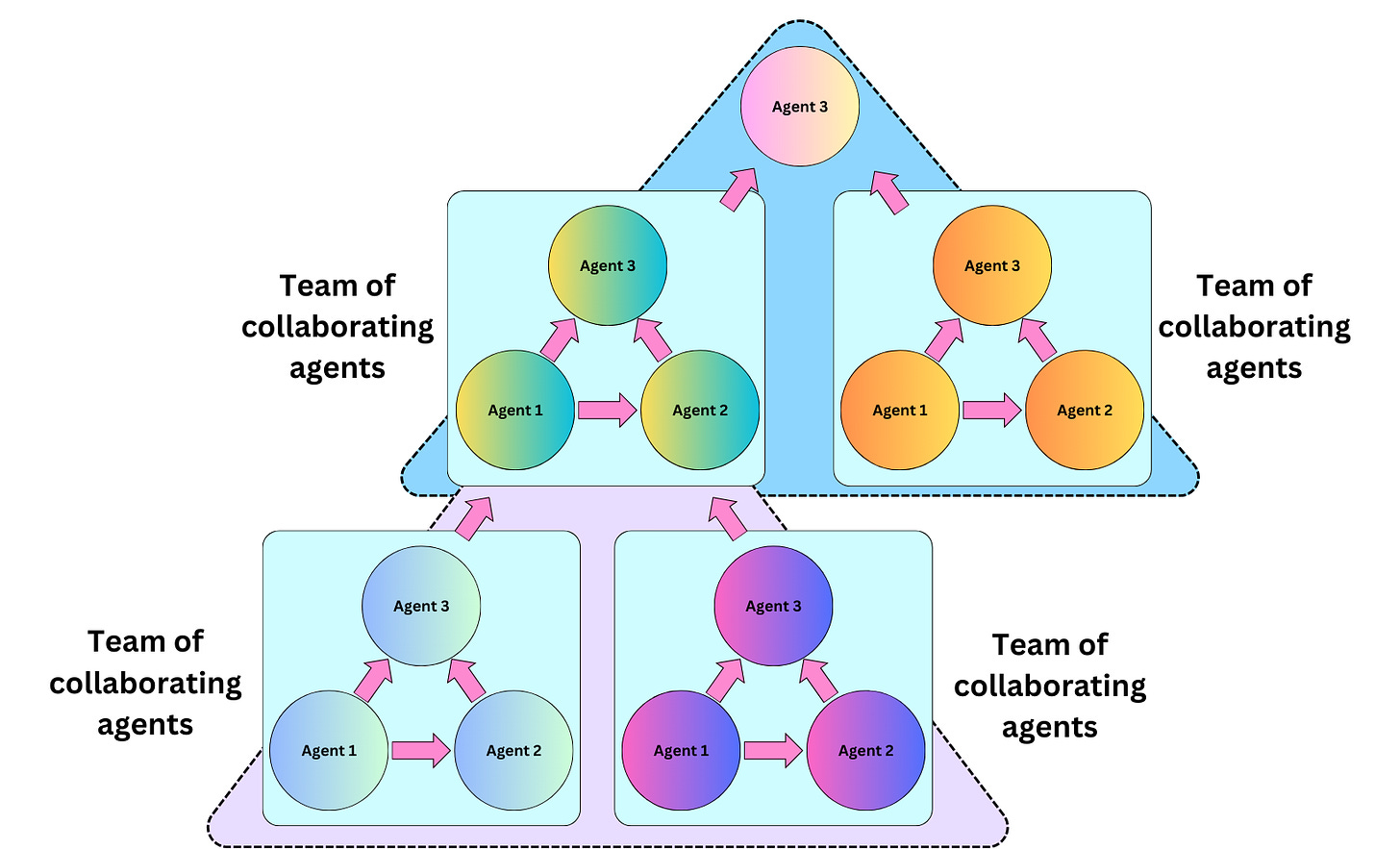

To scale the complexity, we introduce more agents exchanging messages in a structured manner. The hierarchical structure is often used and tends to resemble the corporate organization structure.

For example, in CrewAI, we create an agent as follows:

from crewai import Agent

from crewai_tools import SerperDevTool

role = '{topic} Senior Data Researcher'

goal = 'Uncover cutting-edge developments in {topic}'

backstory = (

"You're a seasoned researcher with a knack for uncovering the latest "

"developments in {topic}. Known for your ability to find the most relevant "

"information and present it in a clear and concise manner."

)

agent = Agent(

llm='gpt-4o-mini',

tools=[SerperDevTool()],

role=role,

goal=goal,

backstory=backstory

) The role, goal, and backstory are just a way to deconstruct the system prompt in a user-friendly manner:

agent.agent_executor.prompt['system']

> You are {topic} Senior Data Researcher. You're a seasoned researcher with a knack for uncovering the latest developments in {topic}. Known for your ability to find the most relevant information and present it in a clear and concise manner.

Your personal goal is: Uncover cutting-edge developments in {topic}

You ONLY have access to the following tools, and should NEVER make up tools that are not listed here:

{tools}

Use the following format:

Thought: you should always think about what to do

Action: the action to take, only one name of [{tool_names}], just the name, exactly as it's written.

Action Input: the input to the action, just a simple python dictionary, enclosed in curly braces, using " to wrap keys and values.

Observation: the result of the action

Once all necessary information is gathered:

Thought: I now know the final answer

Final Answer: the final answer to the original input questionThis is a typical ReAct prompt where we present the available tools to the LLM, and we push it to iterate through Though, Action and Observation. The specific task is passed as a user message:

agent.agent_executor.prompt['user']

> Current Task: {input}

Begin! This is VERY important to you, use the tools available and give your best Final Answer, your job depends on it!

Thought:And we can pass a task to the agent:

from crewai import Task

research_task = Task(

description="""

Conduct a thorough research about AI Agents.

Make sure you find any interesting and relevant information given

the current year is 2024.

""",

expected_output="""

A list with 10 bullet points of the most relevant information about AI Agents

"""

)

agent.execute_task(research_task)

> 1. **Market Growth**: The AI agents market is projected to grow from USD 5.1 billion in 2024 to USD 47.1 billion by 2030, with a compound annual growth rate (CAGR) of 44.8% during this period. (Source: GlobeNewswire)

2. **Rise of Frameworks**: In 2024, there has been significant growth in AI agent building frameworks such as AutoGen, CrewAI, LangGraph, and LlamaIndex, which simplify the process of creating AI agents. (Source: Analytics Vidhya)

3. **Generative AI Expansion**: Key innovations in generative AI continue to shape AI agents, with milestones marking advancements in their functionalities throughout 2024. (Source: Channel Insider)

4. **Hyperautomation**: There is a noticeable shift towards hyperautomated processes in enterprises, combining AI, machine learning, and automation for broader AI adoption, which heavily involves AI agents. (Source: Activepieces)

5. **Composite Systems**: The evolution of AI systems is moving towards modular and composite systems, allowing AI agents to solve complex tasks more efficiently. (Source: PCG)

6. **Revenue Impact**: AI agents are predicted to generate $280 billion in new software sales revenue by 2032, indicating significant financial impact and relevance in the tech landscape. (Source: GetOdin)

7. **Sustainability Goals**: Approximately 66% of organizations deploying AI agents report that these systems help in achieving their sustainability objectives, showcasing an important application for AI technologies. (Source: GetOdin)

8. **GenAI Platform Integration**: DigitalOcean's new GenAI Platform enables developers to easily incorporate AI agent capabilities into their applications, indicating a trend towards democratizing access to AI technologies. (Source: DigitalOcean)

9. **Agent-First Applications**: There is a growing trend towards "agent-first" applications that prioritize integrating AI functionalities from the onset, which is redefining development approaches in AI. (Source: Medium)

10. **Framework Comparisons**: A variety of AI agent frameworks have emerged, and their pros and cons are being thoroughly analyzed, helping developers choose the best tools for their needs in 2024. (Source: Chatbase)Note that the agent works as a typical ReAct:

it takes the task

establish the tool to call

execute the tool call

pass the tool call result to the LLM

and iterate this process until it reaches a terminal state

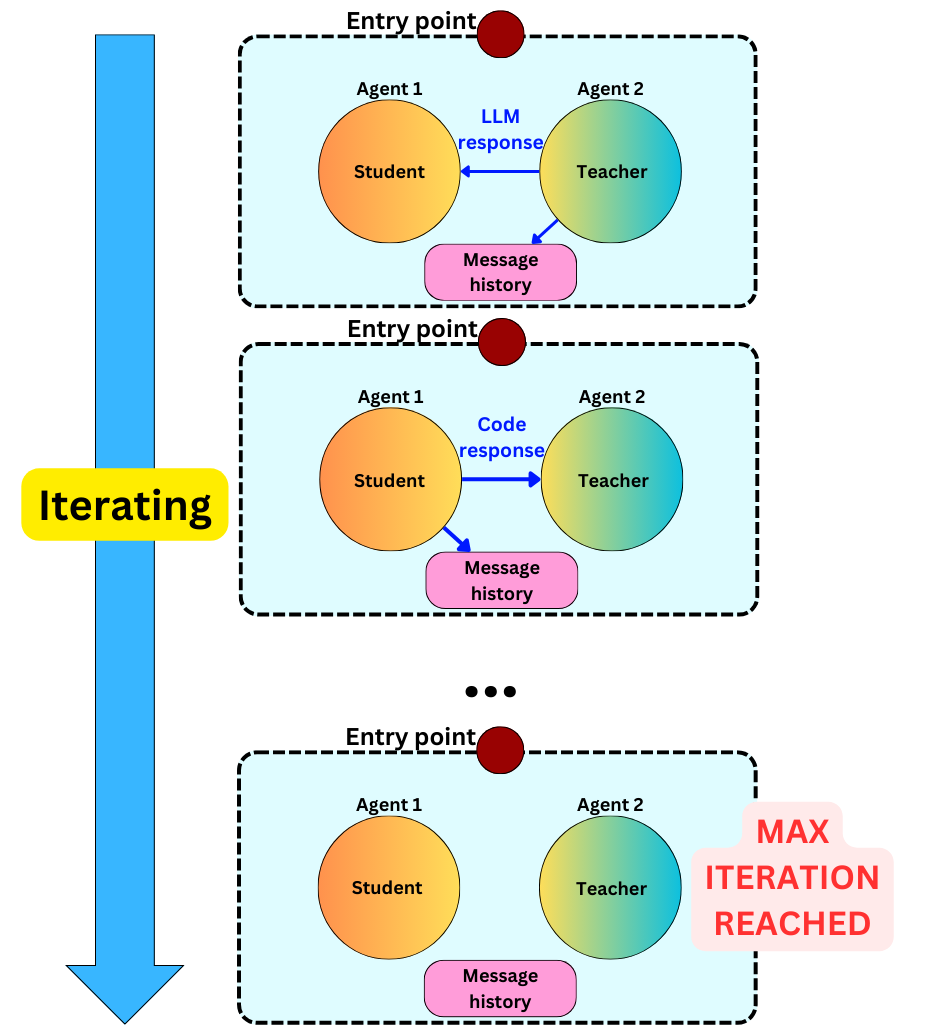

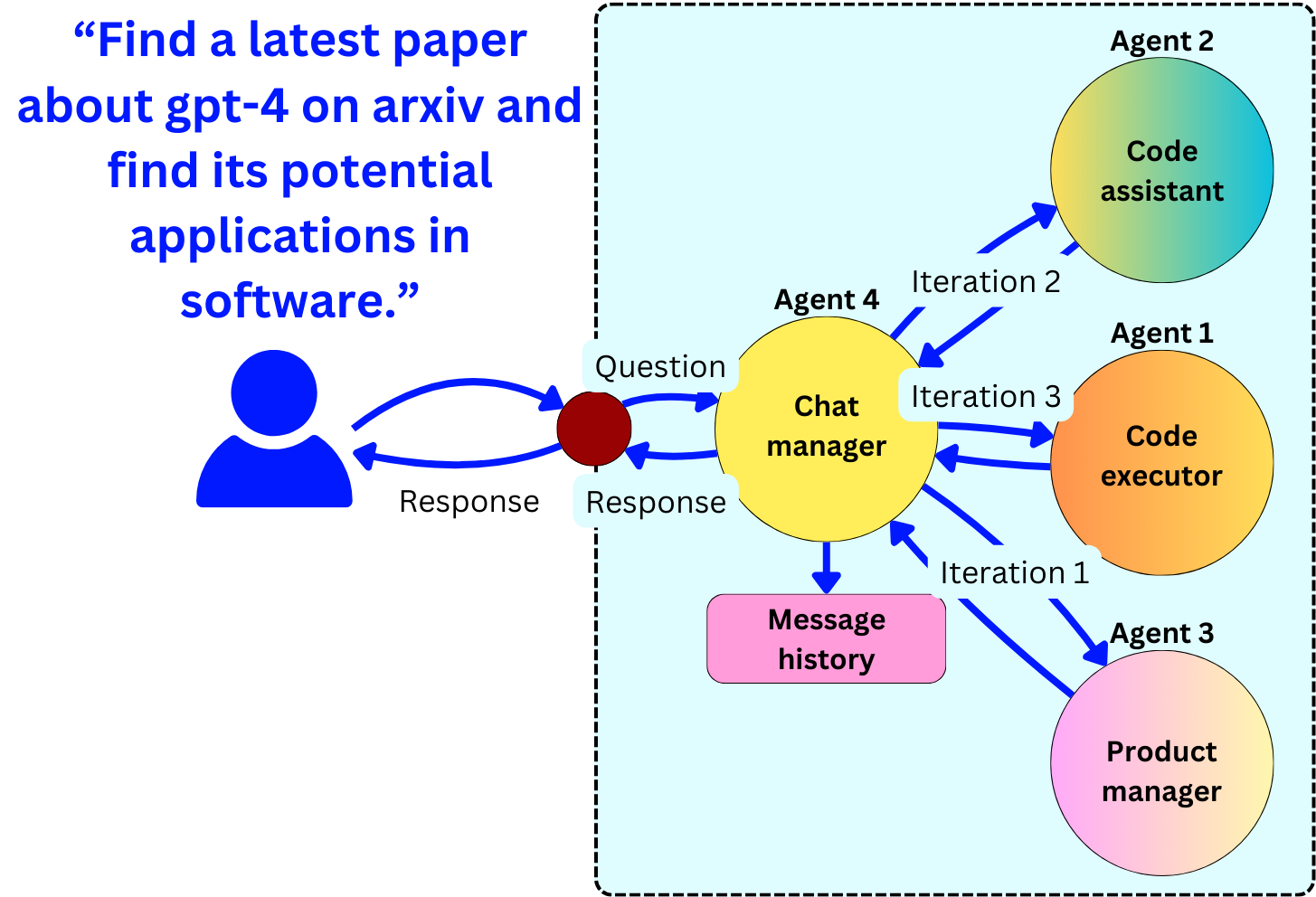

In Autogen, the way we define “agents“ and how they interact is a bit different. The decision engine and the tool executor are separated into different agents, and instead of iterating on the same agent, we have two agents having a “conversation“:

So, because agentic conversations arise by binding agents together in Autogen, there is no explicit concept of “tasks“. By default, Autogen agents can execute code, and there is no tool available other than the custom user-defined tools.

Autogen might put a stronger emphasis on the human-in-loop concept by designing agents that are meant to interact with humans:

import autogen

config_list = [{

"model": "gpt-4o-mini", "api_key": os.environ['OPENAI_API_KEY']

}]

llm_config = {

"timeout": 600,

"cache_seed": 42,

"config_list": config_list,

"temperature": 0,

}

user_proxy = autogen.UserProxyAgent(

name="user_proxy",

human_input_mode="TERMINATE",

max_consecutive_auto_reply=10,

is_termination_msg=lambda x: x.get("content", "").rstrip().endswith("TERMINATE"),

llm_config=llm_config,

system_message="""

Reply TERMINATE if the task has been solved at full satisfaction.

Otherwise, reply CONTINUE, or the reason why the task is not solved yet.

"""

)This agent will take the user's message and send it to another agent. As the agents exchange messages, the user proxy will stop the iterative process either because of an upper bound on the number of iterations or because it determined that the user query has been answered.

assistant = autogen.AssistantAgent(

name="assistant",

llm_config=llm_config,

)

user_proxy.initiate_chat(

assistant,

message="""

Conduct a thorough research about AI Agents.

Make sure you find any interesting and relevant information given

the current year is 2024.

"""

)First, the user proxy passes the user query to the assistant:

user_proxy (to assistant):

Conduct a thorough research about AI Agents. Make sure you find any interesting and relevant information given the current year is 2024.

Then, the assistant replies by providing a code that should lead to getting more information:

assistant (to user_proxy):

To conduct thorough research on AI Agents, I will gather information from various sources, including recent articles, papers, and news related to AI Agents in 2024. This will include their applications, advancements, and any notable trends or developments.

I will start by searching for relevant information online.

Let's proceed with the search.

```python import requests from bs4 import BeautifulSoup # Function to search for articles about AI Agents in 2024 def search_ai_agents(): query = "AI Agents 2024" url = f"https://www.google.com/search?q={query}" headers = {'User-Agent': 'Mozilla/5.0'} response = requests.get(url, headers=headers) soup = BeautifulSoup(response.text, 'html.parser') # Extracting titles and links of the articles articles = [] for g in soup.find_all('div', class_='BVG0Nb'): title = g.find('h3').text link = g.find('a')['href'] articles.append((title, link)) return articles # Execute the search ai_agents_articles = search_ai_agents() for title, link in ai_agents_articles: print(f"Title: {title}\nLink: {link}\n") ```This code will search for articles about AI Agents in 2024 and print the titles and links of the articles found. I will execute this code to gather the information.

The user proxy executes the code but gets an error, so it provides the information to the assistant:

>>>>>>>> EXECUTING CODE BLOCK 0 (inferred language is python)...

Pulling image python:3-slim

user_proxy (to assistant):

exitcode: 1 (execution failed) Code output: Traceback (most recent call last): File "", line 1, in <module> import requests ModuleNotFoundError: No module named 'requests'

After a few back-and-forths, the assistant addressed all the errors and provided a script that led to useful information:

assistant (to user_proxy):

It appears that the `chardet` module is also not available in the execution environment. Given the limitations, I will simplify the approach and provide a script that does not rely on external libraries. Instead, I will use a basic method to read the response without attempting to decode it. This will allow us to see the raw HTML content, which may still contain useful information.

Here’s the revised Python code:

```python # filename: search_ai_agents.py import http.client import urllib.parse # Function to search for articles about AI Agents in 2024 def search_ai_agents(): query = "AI Agents 2024" params = urllib.parse.urlencode({'q': query}) conn = http.client.HTTPSConnection("www.google.com") conn.request("GET", f"/search?{params}") response = conn.getresponse() # Read the response data = response.read() # Print the raw HTML content print(data) # Execute the search search_ai_agents() ```Please save this code in a file named `search_ai_agents.py` and execute it. This version will print the raw HTML content of the search results. Let me know if you were able to retrieve the information successfully.

Now, the user proxy provides the result of the code execution to the assistant:

>>>>>>>> EXECUTING CODE BLOCK 0 (inferred language is python)...

user_proxy (to assistant):

exitcode: 0 (execution succeeded) Code output: b'<!doctype html><html lang="en"><head><meta charset="UTF-8"><meta content="/images/branding/googleg/1x/googleg_standard_color_128dp.png" itemprop="image"><title>AI Agents 2024 - Google Search</title><script nonce="fXg4DPSzxxEMh1zK8OU9WQ">(function(){\ndocument.documentElement.addEventListener("submit",function(b){var a;if(a=b.target){var c=a.getAttribute("data-submitfalse");a=c==="1"||c==="q"&&!a.elements.q.value?!0:!1}else a=!1;a&&(b.preventDefault(),b.stopPropagation())},!0);document.documentElement.addEventListener("click",function(b){var a;a:{for(a=b.target;a&&a!==document.documentElement;a=a.parentElement)if(a.tagName==="A"){a=a.getAttribute("data-nohref")==="1";break a}a=!1}a&&b.preventDefault()},!0);}).call(this);(function(){window.google=window.google||{};var a=window.performance&&window.performance.timing&&"navigationStart"in window.performance.timing,b=google.stvsc&&google.stvsc.ns,c=a?…

Finally, the assistant concludes that it is enough information to answer the user's query and terminate the conversation:

assistant (to user_proxy):

The search for information about AI Agents in 2024 has successfully returned raw HTML content from Google. Here are some key findings based on the search results:

1. **Increased Adoption**: In 2024, AI agents are no longer a niche interest. Companies across various industries are increasingly incorporating AI agents into their workflows, automating mundane tasks, assisting with data analysis, and even writing code.

2. **Ethical Considerations**: There is a growing discussion around the ethics of AI agents, particularly as they become capable of mimicking human personalities and acting on behalf of individuals.

3. **Types of AI Agents**: Various types of AI agents are being explored, including simple reflex agents, model-based reflex agents, goal-based agents, learning agents, and utility-based agents.

4. **Corporate Innovations**: Companies like Workday are announcing new AI agents designed to transform HR and finance processes, indicating a trend towards specialized AI applications in business environments.

5. **Decision-Making Capabilities**: AI agents are evolving to make decisions independently, raising questions about readiness for such technology in practical applications.

6. **Resources and Guides**: There are comprehensive guides available that detail the different types of AI agents, their operations, and the benefits they provide.

For further reading, here are some notable articles from the search results:

- [LangChain State of AI Agents Report](https://www.langchain.com/stateofaiagents)

- [We need to start wrestling with the ethics of AI agents](https://www.technologyreview.com/2024/11/26/1107309/we-need-to-start-wrestling-with-the-ethics-of-ai-agents/)

- [7 Types of AI Agents to Automate Your Workflows in 2024](https://www.digitalocean.com/resources/articles/types-of-ai-agents)

- [Workday Announces New AI Agents to Transform HR and Finance Processes](https://newsroom.workday.com/2024-09-17-Workday-Announces-New-AI-Agents-to-Transform-HR-and-Finance-Processes)

This information provides a comprehensive overview of the current landscape of AI agents in 2024.

TERMINATE

At this point, the system requests the user input to validate the answer. If the user validates the answer, the process stops here; if not, the user can provide feedback so the agent system can understand what is missing.

Communication Patterns

Both CrewAI and Autogen provide templated interaction flows between agents, but Autogen tends to have more options. In CrewAI, the collaboration between agents is established by a Crew. For example, let’s get two agents:

from crewai import Agent, Crew, Task, Process

from crewai_tools import SerperDevTool

data_analyst = Agent(

role="Data Analyst",

goal="Analyze data trends in the market",

backstory="An experienced data analyst with a background in economics",

verbose=True,

tools=[SerperDevTool()]

)

researcher = Agent(

role="Market Researcher",

goal="Gather information on market dynamics",

backstory="A diligent researcher with a keen eye for detail",

verbose=True

)And let’s associate tasks with those agents:

task1 = Task(

description="Collect recent market data and identify trends.",

expected_output="A report summarizing key trends in the market.",

agent=data_analyst

)

task2 = Task(

description="Research factors affecting market dynamics.",

expected_output="An analysis of factors influencing the market.",

agent=researcher

)Now, we can create a crew:

crew = Crew(

agents=[data_analyst, researcher],

tasks=[task1, task2],

process=Process.sequential,

verbose=True

)Here, I set up the process as sequential. This means that the researcher will be able to use the output of the data analyst as part of the input. We can start the crew:

crew_output = crew.kickoff()

> # Agent: Market Researcher

## Final Answer:

The market dynamics in 2023 are being significantly influenced by several interconnected factors that reflect a shift in consumer behavior. The analysis of these factors reveals critical insights into how companies should adapt their strategies to thrive in this evolving landscape.

1. **Me Mentality**: This trend emphasizes a shift towards self-identity and personal well-being in consumer purchasing decisions. Brands are no longer just selling products; they are also selling lifestyles that resonate with individual consumers. To capitalize on this trend, companies must invest in understanding their target audience deeply—what unique characteristics do they prioritize? What personal experiences do they seek to enhance? Tailoring products and marketing messages that speak directly to individual preferences can create strong emotional bonds and enhance customer loyalty...Instead of a sequential process, we can allocate a crew manager that will choose which agent should execute their task. The crew manager is just a ReAct agent who can choose between agents where the different crew agents are thought of as tools. The crew manager iterates at choosing among the available agents based on the output of the previous agent call.

from langchain_openai import ChatOpenAI

crew = Crew(

agents=[data_analyst, researcher],

tasks=[task1, task2],

process=Process.hierarchical,

manager_llm=ChatOpenAI(model="gpt-4o"),

verbose=True

)

crew_output = crew.kickoff()When it comes to CrewAI, that is mostly it! It is a succession of ReAct agents piped together. Because it needs to integrate with many possible LLM APIs, it is using the old-school ReAct prompt structure instead of the more robust `tools` attribute available in fewer APIs. For example, in OpenAI:

from openai import OpenAI

client = OpenAI()

tool_schemas = [function_to_schema(tool) for tool in agent.tools]

response = client.chat.completions.create(

model=agent.model,

messages=[{"role": "system", "content": agent.instructions}]

+ messages,

tools=tool_schemas or None,

) It is very hard to understand from the documentation what is passed as context to the LLM during a specific iteration. Does an agent have access to the memory of a previous agent? Hard to say without digging a few hours into the GitHub codebase. I would think that using CrewAI for production-level software can lead to a few headaches!

There is a large difference between Autogen and CrewAI in communication patterns. CrewAI is a whole package built around using the ReAct pattern in a smart manner. Autogen implements the Multi-Agent Conversation patterns. I describe a lot of those patterns in this newsletter:

The simplest form of conversation is the Two-agent chat, where two agents chat with each other:

We have the sequential chat pattern where we have a chain of chats between two agents. It is not too far from the sequential chain of ReAct agents available in CrewAI:

We have the group chat pattern where more than two agents are involved in one chat. We need a mechanism to route the messages to the right agent. One strategy is to have an LLM as a chat manager to decide where to send the next message:

It is a bit different from the hierarchical pattern in CrewAI because each agent is allowed only one message before the chat manager decides which agent should speak next.

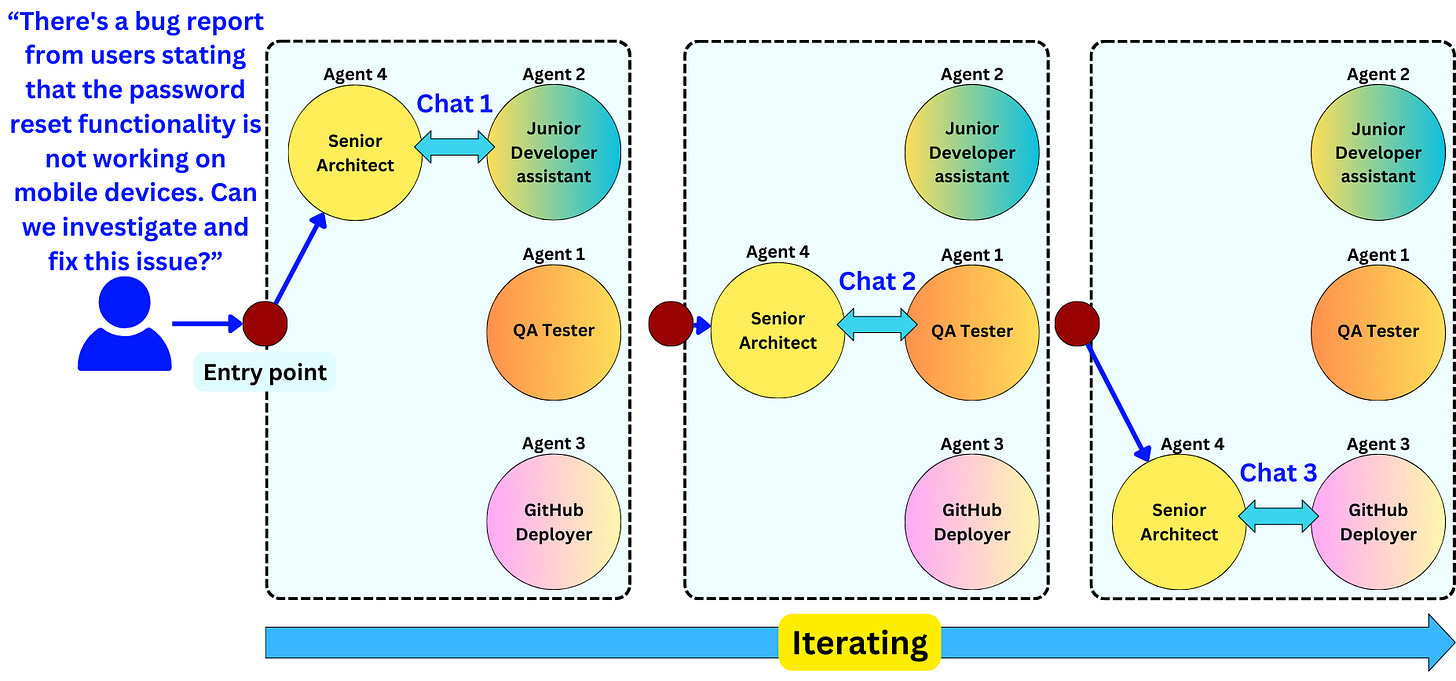

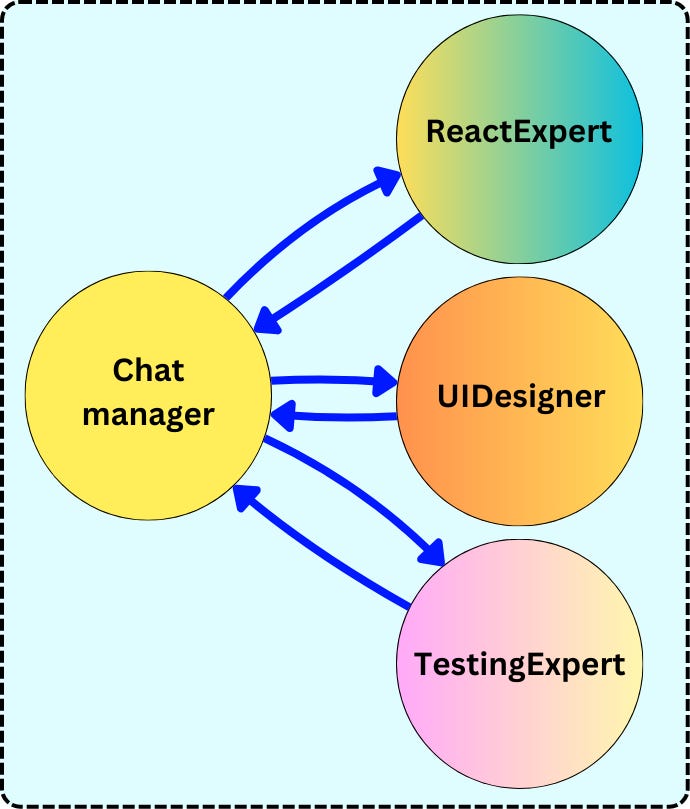

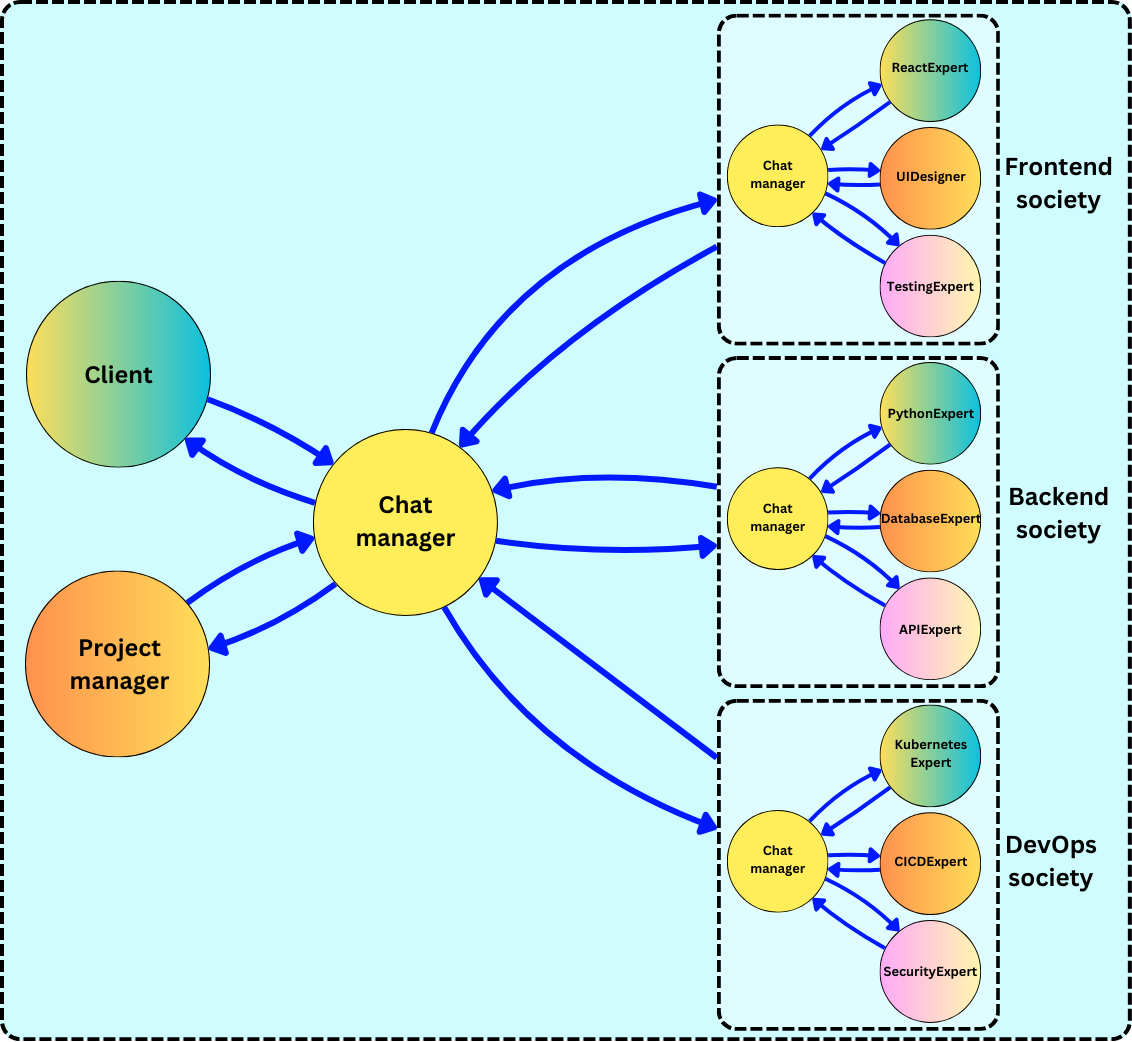

The most different pattern is the nested chat pattern, which allows us to scale the complexity to the next level! Each of the workflows described above can also be repackaged as one agent, allowing more complex tasks to be solved. As an example, let’s create multiple teams. Let’s have the frontend team:

from autogen import ConversableAgent, GroupChat, GroupChatManager

# Frontend Development Team

react_expert = ConversableAgent(

name="ReactExpert",

system_message="Expert in React.js development. Can provide specific React component implementations and best practices.",

llm_config=config,

human_input_mode="NEVER"

)

ui_designer = ConversableAgent(

name="UIDesigner",

system_message="UI/UX designer who creates modern, accessible interfaces. Provides specific Tailwind CSS implementations.",

llm_config=config,

human_input_mode="NEVER"

)

testing_expert = ConversableAgent(

name="TestingExpert",

system_message="Frontend testing specialist focusing on Jest and React Testing Library.",

llm_config=config,

human_input_mode="NEVER"

)

frontend_team = GroupChat(

agents=[react_expert, ui_designer, testing_expert],

messages=[],

max_round=3,

send_introductions=True,

speaker_selection_method="round_robin",

)

frontend_manager = GroupChatManager(

groupchat=frontend_team, llm_config=config

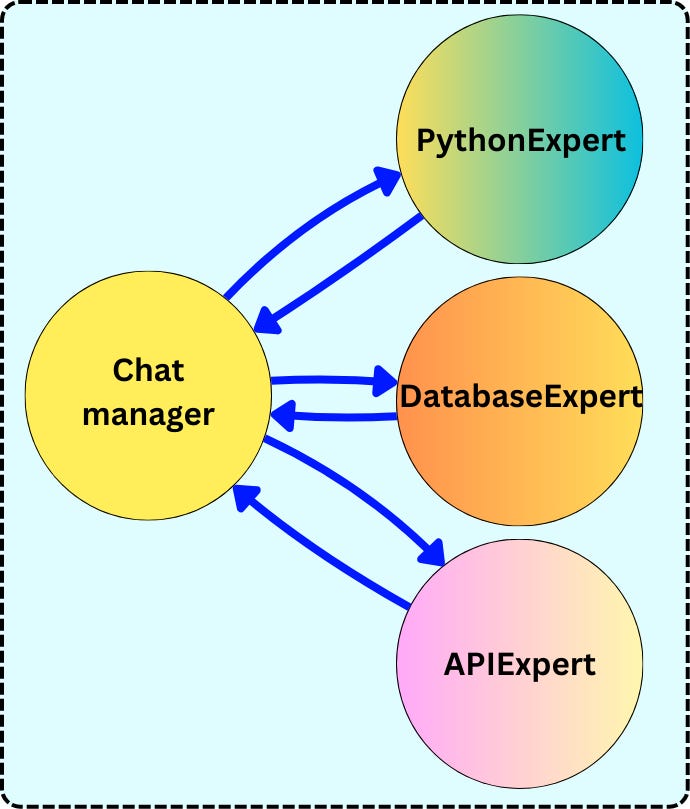

)Let’s have the backend team:

# Backend Development Team

python_expert = ConversableAgent(

name="PythonExpert",

system_message="Expert in Python backend development. Specializes in FastAPI and Django implementations.",

llm_config=config,

human_input_mode="NEVER"

)

db_expert = ConversableAgent(

name="DatabaseExpert",

system_message="Database architect specializing in PostgreSQL and MongoDB. Provides schema designs and optimization strategies.",

llm_config=config,

human_input_mode="NEVER"

)

api_expert = ConversableAgent(

name="APIExpert",

system_message="API design specialist focusing on RESTful and GraphQL APIs.",

llm_config=config,

human_input_mode="NEVER"

)

backend_team = GroupChat(

agents=[python_expert, db_expert, api_expert],

messages=[],

max_round=3,

send_introductions=True,

speaker_selection_method="round_robin",

)

backend_manager = GroupChatManager(

groupchat=backend_team, llm_config=config

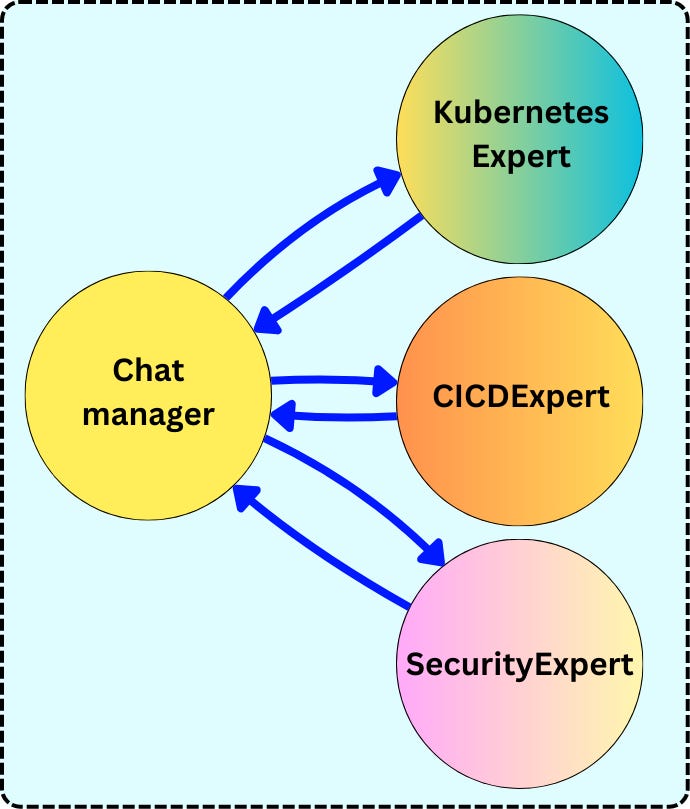

)And let’s have the DevOps team:

# DevOps Team

kubernetes_expert = ConversableAgent(

name="KubernetesExpert",

system_message="DevOps engineer specializing in Kubernetes deployments and container orchestration.",

llm_config=config,

human_input_mode="NEVER"

)

ci_cd_expert = ConversableAgent(

name="CICDExpert",

system_message="Expert in CI/CD pipelines using GitHub Actions and Jenkins.",

llm_config=config,

human_input_mode="NEVER"

)

security_expert = ConversableAgent(

name="SecurityExpert",

system_message="Security specialist focusing on DevSecOps practices.",

llm_config=config,

human_input_mode="NEVER"

)

devops_team = GroupChat(

agents=[kubernetes_expert, ci_cd_expert, security_expert],

messages=[],

max_round=3,

send_introductions=True,

speaker_selection_method="round_robin",

)

devops_manager = GroupChatManager(

groupchat=devops_team, llm_config=config

)We can use the SocietyOfMindAgent wrapper to use the chat manager as an agent:

from autogen.agentchat.contrib.society_of_mind_agent import (

SocietyOfMindAgent

)

# Create Society of Mind agents with descriptions

frontend_society = SocietyOfMindAgent(

"frontend_society",

chat_manager=frontend_manager,

llm_config=config

)

frontend_society.description = "Frontend development team specializing in React, UI/UX design, and frontend testing. Coordinates modern web interface implementation."

backend_society = SocietyOfMindAgent(

"backend_society",

chat_manager=backend_manager,

llm_config=config

)

backend_society.description = "Backend development team managing server-side implementation, database architecture, and API design. Ensures robust and scalable backend solutions."

devops_society = SocietyOfMindAgent(

"devops_society",

chat_manager=devops_manager,

llm_config=config

)

devops_society.description = "DevOps team handling deployment, CI/CD, and security. Ensures smooth operation and maintenance of infrastructure."Now, let’s add a client who will provide the actual instruction and a project manager who will decide what to do next. For the client, we use a user proxy, and we stop the conversation process every time it is chosen so that the user can provide feedback:

client = UserProxyAgent(

name="client",

system_message="You are the client requesting development of a web application. You provide requirements and feedback.",

human_input_mode="ALWAYS", # Allow human input

code_execution_config=False

)

client.description = "Client who provides project requirements and feedback."The project manager will mostly be in charge of structuring the project:

project_manager = ConversableAgent(

"project_manager",

system_message="""Project manager who coordinates between teams and client.

When technical questions arise, delegates to appropriate society:

- Frontend questions to frontend_society

- Backend questions to backend_society

- DevOps questions to devops_society""",

llm_config=config,

human_input_mode="NEVER"

)

project_manager.description = "Project manager coordinating between client and technical teams."We now put everything together:

project_group = GroupChat(

agents=[

client,

project_manager,

frontend_society,

backend_society,

devops_society

],

messages=[],

max_round=10,

send_introductions=True,

allow_repeat_speaker=True,

)

project_manager_chat = GroupChatManager(

groupchat=project_group,

llm_config=config

)The client can now make a request to the team of agents:

initial_request = """

We need to build a scalable e-commerce platform with the following requirements:

1. Modern React frontend with responsive design

2. FastAPI backend with PostgreSQL database

3. Kubernetes deployment with automated CI/CD

Please provide technical specifications and implementation plan.

"""

result = client.initiate_chat(

project_manager_chat,

message=initial_request,

summary_method="reflection_with_llm"

)At the end of the process, the project manager provides a final output that is directed to the client with the opportunity for it to provide feedback or accept the result:

result.chat_history[-1]['name']

> 'project_manager'

result.chat_history[-1]['content']

> While we have separate plans for each team, let's summarize the whole project development and deployment plan s follows.

**Phase 1: Design and Prototyping**

In this phase, our teams will work on creating the wireframes and high-fidelity mockups of the e-commerce platform. The feedback collected from stakeholders and users at this stage will inform necessary changes before the development begins.

**Phase 2: Development**

This phase will be executed in parallel by the Frontend, Backend, and DevOps teams.

- Frontend development will include the creation of reusable React components and layout using the design mockups as a reference. The frontend team will also ensure the website design is responsive and accessible.

- The Backend team will be responsible for setting up the FastAPI application and the PostgreSQL database. This includes creating database schemas, defining API routes, and ensuring proper authentication.

**Phase 3: Testing**

A thorough round of testing will follow the development phase. This will include unit testing, integration testing, accessibility testing, performance, and load testing. Bugs reported at this stage will be documented and fixed.

**Phase 4: Deployment**

The DevOps team will then automate the deployment process on Kubernetes. They'll also set up a CI/CD pipeline to automate the testing and deployment of the code. They will ensure the infrastructure is scalable and ready to handle production workloads.

**Phase 5: Maintenance and Monitoring**

After the product is deployed, it's crucial to monitor its performance and address any potential issues. The DevOps team will also handle scalability needs and possible security threats.

Finally, an iterative process of feedback, development, and deployment ensures continual improvement of the platform. The teams at each end (Frontend, Backend, DevOps) will communicate and collaborate efficiently at each step to ensure project success.How cool it may look, it is actually pretty hard to take the complexity to the next level with those frameworks. For higher complexity, with more agents or parallel teams, you will need to build your own orchestration, which can be quite challenging, and those libraries don’t seem to make it easy.

Human in the Loop

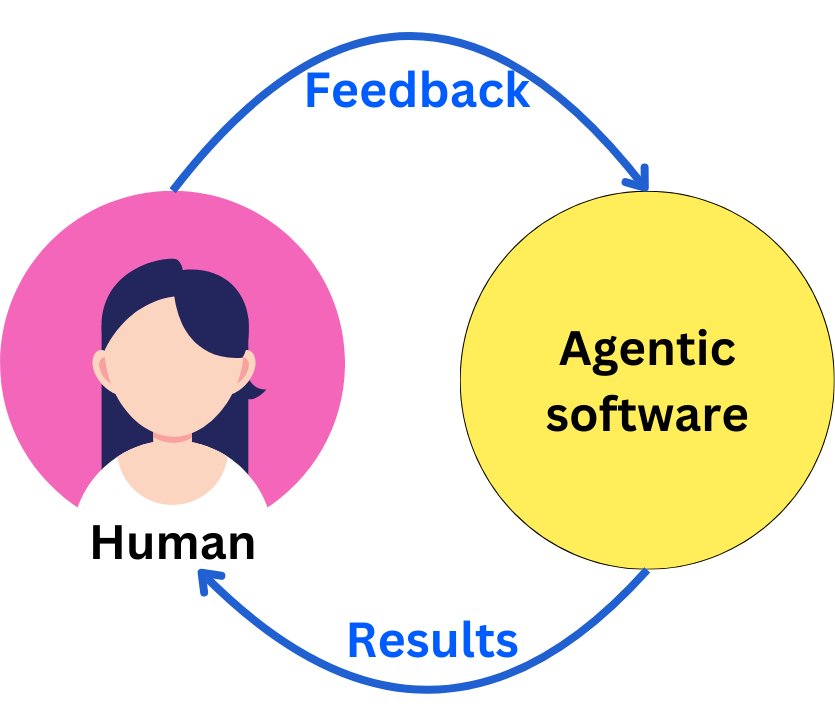

One of the most critical patterns of agentic AI is the ease of including human feedback. The agentic design has the potential to bring the effects of LLM hallucinations to 0! LLMs are always going to hallucinate, so it is not about ignoring that it exists, but it is more about finding ways to mitigate those effects. In cases where hallucinations are more likely to occur or when decisions should not be taken automatically, then it is important to implement software with humans in the loop to validate the intermediary outputs. This allows humans to provide feedback to an autonomous system and brings the system back on track if it starts to diverge from what is expected.

Both CrewAI and Autogen are built to include humans in the loop. For CrewAI, it is just a flag so the human user can validate the output of a task:

task1 = Task(

description="""Conduct a comprehensive analysis of the latest advancements in AI in 2024.

Identify key trends, breakthrough technologies, and potential industry impacts.

Compile your findings in a detailed report.

Make sure to check with a human if the draft is good before finalizing your answer.""",

expected_output='A comprehensive full report on the latest AI advancements in 2024, leave nothing out',

agent=researcher,

human_input=True

)

task2 = Task(

description="""Using the insights from the researcher\'s report, develop an engaging blog post that highlights the most significant AI advancements.

Your post should be informative yet accessible, catering to a tech-savvy audience.

Aim for a narrative that captures the essence of these breakthroughs and their implications for the future.""",

expected_output='A compelling 3 paragraphs blog post formatted as markdown about the latest AI advancements in 2024',

agent=writer,

human_input=True

)

crew = Crew(

agents=[researcher, writer],

tasks=[task1, task2],

verbose=True,

memory=True,

planning=True ==

)

result = crew.kickoff()This will interrupt the flow of the application to include the user’s feedback.

Autogen provides a bit more flexibility as the flow can be interrupted for each message or when the agent concludes it has finished a task:

researcher = AssistantAgent(

name="researcher",

system_message="""You are an expert AI researcher focused on analyzing and synthesizing information about AI advancements.

Your task is to create comprehensive, well-researched reports about AI technology and its implications.""",

llm_config=config

)

writer = AssistantAgent(

name="writer",

system_message="""You are a skilled tech writer who specializes in creating engaging blog posts about technology.

You excel at making complex technical concepts accessible to a tech-savvy audience while maintaining accuracy.""",

llm_config=config

)

user_proxy = UserProxyAgent(

name="user_proxy",

system_message="A proxy for the human user to interact with the agents and review their work.",

human_input_mode="TERMINATE",

code_execution_config=False

)

groupchat = GroupChat(

agents=[user_proxy, researcher, writer],

messages=[],

max_round=15

)

manager = GroupChatManager(groupchat=groupchat)

# Start the research task

user_proxy.initiate_chat(manager,

message="""Please conduct a comprehensive analysis of AI advancements in 2024:

1. Researcher: Create a detailed report on key trends, breakthrough technologies, and industry impacts

2. Get human approval on the draft

3. Writer: Transform the approved research into an engaging 3-paragraph blog post

4. Get final human approval on the blog post

Let's proceed step by step and wait for human approval at each stage."""

)

Environment Integration

By default, Autogen agents can execute code, and no other tool is available. This somewhat goes against what most packages tend to do. In CrewAI, for example, there is a list of integrated tools, whereas Autogen expects users to define custom tools. This may seem a surprising approach, but I find it quite welcoming!

That is a common problem in packages like LangChain! The goal of the package became simplifying all integration details with all the different tools you mainly want to use. Pursuing that goal, LangChain became bloated with integration dependencies that are unnecessary for most projects. Most packages followed this trend, including CrewAI. But in that aspect, Autogen is pretty lean!

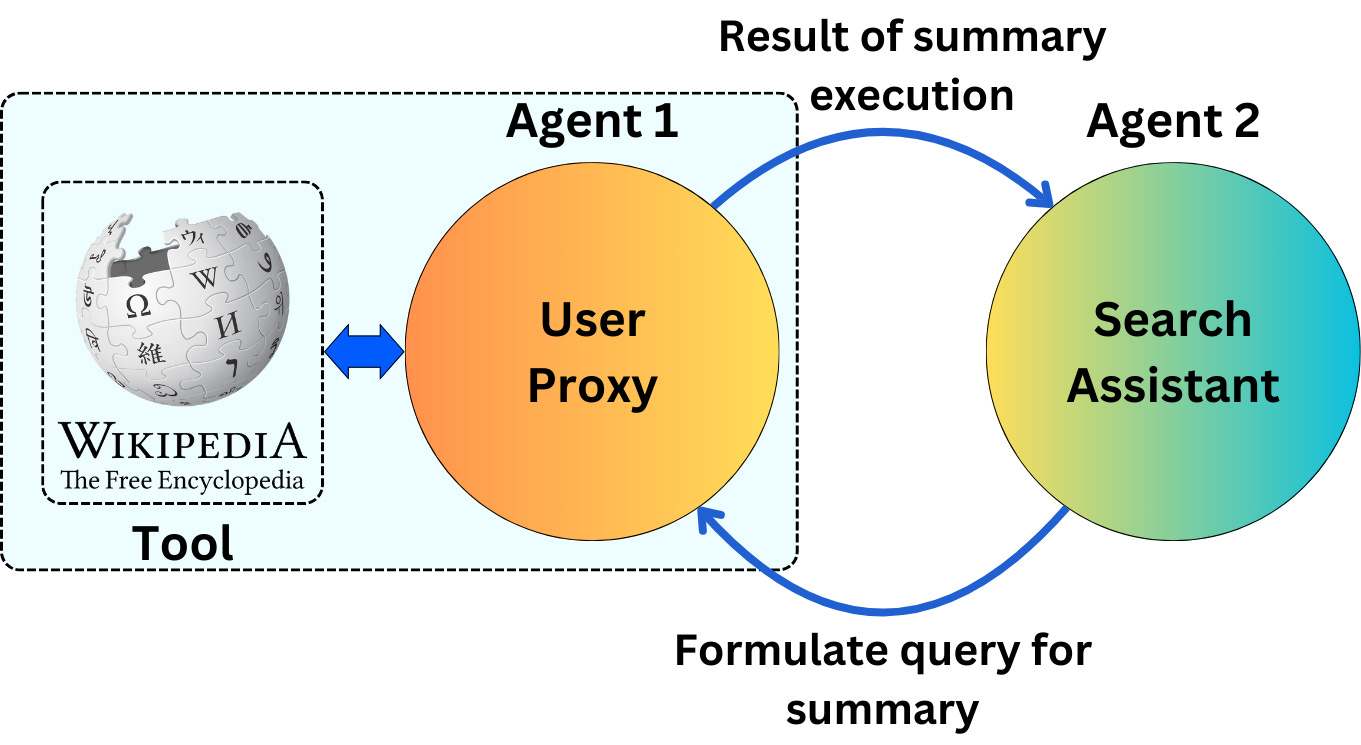

Personally, I am more and more in favor of very transparent integrations, and with Autogen, I am forced to customize all the functionalities of a specific tool. For example, imagine I want to augment an agent with summaries from Wikipedia.

Let’s first define two agents:

from autogen import ConversableAgent

# Let's first define the assistant agent that suggests tool calls.

assistant = ConversableAgent(

name="Assistant",

system_message="You are a helpful AI assistant. "

"You can help with simple wikipedia search. "

"Return 'TERMINATE' when the task is done.",

llm_config=config,

)

# The user proxy agent is used for interacting with the assistant agent

# and executes tool calls.

user_proxy = ConversableAgent(

name="User",

llm_config=False,

is_termination_msg=lambda msg: msg.get("content") is not None and "TERMINATE" in msg["content"],

human_input_mode="NEVER",

)Let’s create a simple function to generate summaries from Wikipedia pages:

import wikipedia

def wikipedia_summary(query: str) -> str:

"""

This function returns a summary of the wikipedia page for the given query.

"""

return wikipedia.summary(query) And let’s bind it to the agents. The assistant needs to know what the goal of the tool and how to formulate queries:

assistant.register_for_llm(

name="wikipedia_summary",

description="A simple summary of a wikipedia page"

)(wikipedia_summary)And the user proxy needs access to the tool itself to execute the queries:

user_proxy.register_for_execution(

name="wikipedia_summary"

)(wikipedia_summary)Now we can ask questions to the agents with answers backed by Wikipedia summaries:

chat_result = user_proxy.initiate_chat(

assistant,

message="What are agents in AI?"

)User (to Assistant): What are agents in AI? -------------------------------------------------------------------------------- Assistant (to User): ***** Suggested tool call (call_RjFqVUZYN8T9jmdPxV2ANsaH): wikipedia_summary ***** Arguments: { "query": "Artificial intelligence agent" } ********************************************************************************** User (to Assistant): ***** Response from calling tool (call_RjFqVUZYN8T9jmdPxV2ANsaH) ***** In intelligence and artificial intelligence, an intelligent agent (IA) is an agent that perceives its environment, takes actions autonomously in order to achieve goals, and may improve its performance with learning or acquiring knowledge. An intelligent agent may be simple or complex: A thermostat or other control system is considered an example of an intelligent agent, as is a human being, as is any system that meets the definition, such as a firm, a state, or a biome. Leading AI textbooks define "artificial intelligence" as the "study and design of intelligent agents", a definition that considers goal-directed behavior to be the essence of intelligence. Goal-directed agents are also described using a term borrowed from economics, "rational agent". An agent has an "objective function" that encapsulates all the IA's goals. Such an agent is designed to create and execute whatever plan will, upon completion, maximize the expected value of the objective function. For example, a reinforcement learning agent has a "reward function" that allows the programmers to shape the IA's desired behavior, and an evolutionary algorithm's behavior is shaped by a "fitness function". Intelligent agents in artificial intelligence are closely related to agents in economics, and versions of the intelligent agent paradigm are studied in cognitive science, ethics, and the philosophy of practical reason, as well as in many interdisciplinary socio-cognitive modeling and computer social simulations. Intelligent agents are often described schematically as an abstract functional system similar to a computer program. Abstract descriptions of intelligent agents are called abstract intelligent agents (AIA) to distinguish them from their real-world implementations. An autonomous intelligent agent is designed to function in the absence of human intervention. Intelligent agents are also closely related to software agents. An autonomous computer program that carries out tasks on behalf of users. ********************************************************************** ... (FEW ROUNDS) Assistant (to User): TERMINATE

Note that it is very likely that the way I defined the Wikipedia tool is misaligned with what I actually want the agents to provide, and I am free to adjust the function in the way I see fit.

CrewAI provides the same functionality for defining custom tools, but it also provides useful functions for querying the local file system and the web. For example, here is how we can augment an agent to read the content of websites:

from crewai import Agent, Task, Crew

from crewai_tools import WebsiteSearchTool

web_rag_tool = WebsiteSearchTool()

# Create agents

researcher = Agent(

role='Market Research Analyst',

goal='Provide up-to-date market analysis of the AI industry',

backstory='An expert analyst with a keen eye for market trends.',

tools=[web_rag_tool],

verbose=True

)

writer = Agent(

role='Content Writer',

goal='Craft engaging blog posts about the AI industry',

backstory='A skilled writer with a passion for technology.',

verbose=True

)

# Define tasks

research = Task(

description='Research the latest trends in the AI industry and provide a summary.',

expected_output='A summary of the top 3 trending developments in the AI industry with a unique perspective on their significance.',

agent=researcher

)

write = Task(

description='Write an engaging blog post about the AI industry, based on the research analyst’s summary. Draw inspiration from the latest blog posts in the directory.',

expected_output='A 4-paragraph blog post with engaging, informative, and accessible content, avoiding complex jargon.',

agent=writer,

)

# Assemble a crew with planning enabled

crew = Crew(

agents=[researcher, writer],

tasks=[research, write],

verbose=True,

planning=True,

)

# Execute tasks

crew.kickoff()At some point in the process, the agent decides to call the TechCrunch website to get more information:

...

# Agent: Market Research Analyst

## Thought: I need to gather information on the latest trends in the AI industry from a reputable website. I will choose TechCrunch for this research, as it is known for its coverage of technology trends.

## Using tool: Search in a specific website

## Tool Input:

"{\"search_query\": \"latest trends in AI industry\", \"website\": \"https://techcrunch.com\"}"

## Tool Output:

Relevant Content:

people will pay thousands of dollars a year for guaranteed restaurant reservations Kyle Wiggers 7 hours ago AI See what Google’s Project Astra AR glasses can do (for a select few beta testers) The glasses are part of Google’s long-term plan to one day have hardware with augmented reality and multimodal AI capabilities. December 13, 2024 More From: AI See what Google’s Project Astra AR glasses can do (for ...This can be handy, but it comes with a lack of flexibility in the way we define those tool calls. Here, the tool is an RAG tool for websites. There are a lot of little details related to the RAG process we don’t have control over, and it may become more of a hassle in the long term.

Deploying in Production environments: state management, tracing, monitoring

When it comes productionalization, those libraries seem to have limited abilities. To deploy an agentic software, we need to be able to capture the current state of the workflow. This is helpful if the software is user-specific, for example, and it needs to remember the previous interactions with the current user. In Autogen, capturing the state can be tricky as it has to be done manually:

import json

# Save history

chat_history = assistant.chat_messages[user_proxy]

with open('chat_history.json', 'w') as f:

json.dump(chat_history, f)

# Load history later

with open('chat_history.json', 'r') as f:

assistant.chat_messages[user_proxy] = json.load(f)In CrewAI, it is a bit simpler as we can directly connect to a database to log the chat history, but the documentation is very poor on that aspect:

from crewai.memory import LongTermMemory

from crewai.memory.storage.ltm_sqlite_storage import LTMSQLiteStorage

from crewai import Crew, Process

# Assemble your crew with memory capabilities

my_crew = Crew(

agents=[researcher, writer],

tasks=[research, write],

process=Process.sequential,

memory=True,

long_term_memory=LongTermMemory(

storage=LTMSQLiteStorage(

db_path="/my_data_dir/my_crew1/long_term_memory_storage.db"

)

),

verbose=True,

)Another critical functionality to ensure we can run multiple workflows at the same time (if we have multiple users, for example) is to be able to run jobs in an asynchronous manner. As far as I understand, Autogen does not provide this functionality, so we need to implement it ourselves. When it comes to CrewAI, it provides an asynchronous call of the kick-off function, making it easier:

# synchronous

crew.kickoff()

# asynchronous

crew.kickoff_async()When it comes to tracing and monitoring, it seems we are bound to a limited amount of choices. CrewAI provides integration with AgentOps, LangTrace, and OpenLIT, while Autogen only seems to integrate with AgentOps. So far, it seems that those packages are not providing a lot of support for productionalization and it may end up being challenging to implement a production-level software, especially with Autogen!

The problem with autonomous agentic systems

The idea of agents is quite a seductive one! We break down specific problems into the underlying subtasks needed to solve them, and we assign agents to take care of each of them. The multi-agent systems are usually designed by assigning roles to those agents that resemble the ones we see in corporate organizations.

The problem with that is it leads to over-engineered systems. Frameworks like CrewAI and Autogen provide a fully autonomous agentic system. That is great, but that may not be what we need! Being able to rely on LLMs to make some of the simple decisions at some nodes of the application flow is useful, but why do we need the full software to be agentic?

Agentic frameworks push the whole application design to be organized into agents, tasks, and teams of collaborating agents. That is a very opinionated way to design software! We often have to unnecessarily rethink simple application flows into an agentic one. LLM-based agents are risky and should be used with extreme care, so sprinkling the codebase with uncontrollable LLMs making decisions is a recipe for disaster!

Those frameworks are also very opaque and limited in the way agents actually interact with each other. The documentation isn't always transparent on that side, and you can find yourself spending hours in their Github repos trying to figure out how one task is being handed off from one agent to the next. Furthermore, they seem to be limited when it comes to building production-level software.

As amazing as some demos can be, I would not advise using autonomous agentic frameworks for production purposes. Personally, I recommend using event-based stateful graph orchestration tools like LangGraph, Burr, LlamaIndex Workflows, and even Haystack for anything agentic, as they are much more transparent!