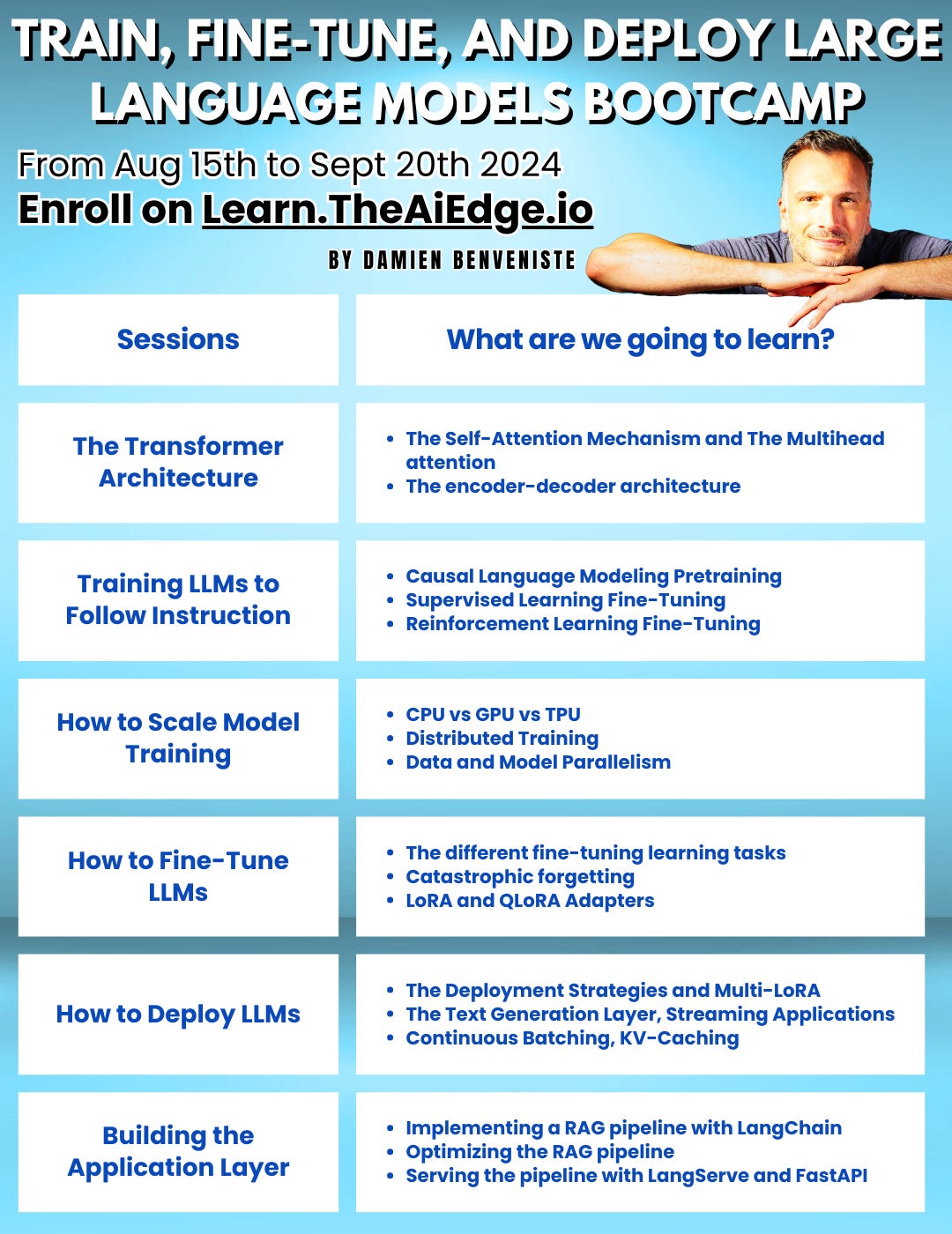

I am glad to introduce the Train, Fine-Tune, and Deploy Large Language Models Bootcamp. The BootCamp will start on August 15th, 2024. This is going to be 6 weeks of intense hands-on learning to become an expert in building LLM applications.

The early-bird pricing (25% off) will last until next Monday. Additionally, members of the AiEdge Newsletter get an additional 20% discount by applying the following coupon: NEWSLETTER. Make sure to register before it is too late:

You can get an additional 20% off if you enroll in the ML fundamentals BootCamp as well:

Who is this Bootcamp for?

This Bootcamp is meant for Engineers with experience in Data Science or Machine Learning Engineering who want to upgrade their skills in Large Language Modeling.

Be ready to learn!

This Bootcamp is not meant to be easy! Be ready to spend time and effort in learning the subject so that the certificate means something.

I won't promise you that you will get a job after graduating (because it depends on you), but I can promise you that your understanding of LLMs will be at a completely different level!

Prerequisites

Prior experience or knowledge of Machine Learning - at least 6 months. I expect people to feel comfortable with the concepts developed in the Machine Learning Fundamental Bootcamp.

Proficiency in Python - at least 1 year experience.

What is included!

40+ hours of recorded lectures

6 hands-on projects

Homework support

Certification upon graduation

Access to our online community

Lifetime access to course content

The Schedule

We are going to meet every Thursday and Friday between 9 am and 12 pm PST starting August 15th.

6 Weeks of Intense Learning!

The Transformer Architecture (1 week)

The Transformer is the fundamental Neural Network architecture that enabled the evolution of Large Language Models as we know them now.

The Self-Attention Mechanism

The Multihead attention

The encoder-decoder architecture

The position embedding

The layer-normalization

The position-wise feed-forward network

The cross-attention layer

The language modeling head

Training LLMs to Follow Instruction (1 week)

GhatGPT, Claude, or Gemini are LLMs trained to follow human instructions. We are going to learn how those are trained from scratch:

The Causal Language Modeling Pretraining Step

The Supervised Learning Fine-Tuning Step

The Reinforcement Learning Fine-Tuning Step

Implementing those Steps with HuggingFace

How to Scale Model Training (1 week)

More than ever, we need efficient hardware to accelerate the training process. We are going to explore the strategy of distributing training computations across multiple GPUs for different parallelism strategies:

CPU vs GPU vs TPU

The GPU Architecture

Distributed Training

Data Parallelism

Model Parallelism

Zero Redundancy Optimizer Strategy

How to Fine-Tune LLMs (1 week)

Fine-tuning a model means we continue the training on a specialized dataset for a specialized learning task. We are going to look at the different strategies to fine-tune LLMs:

The different fine-tuning learning tasks

Catastrophic forgetting

LoRA Adapters

QLoRA

How to Deploy LLMs (1 week)

The most important part of a machine learning model development is the deployment! A model that is not in production is a model that is costing money instead of generating money for the company. We are going to explore the different strategies to deploy LLMs:

The Deployment Strategies

Multi-LoRA

The Text Generation Layer

Streaming Applications

Continuous Batching

KV-Caching

The Paged-Attention and vLLM

Building the Application Layer (1 week)

A deployed LLM on its own is not really useful. We are going to look at how we can build an agentic application on top of the model with LangChain:

Implementing a Retriever Augmented Generation (RAG) pipeline with LangChain

Optimizing the RAG pipeline

Serving the pipeline with LangServe and FastAPI

Don’t hesitate to send me an email is you have more questions: damienb@theaiedge.io.

Can I still get the early bird discount, I was unfortunately in holidays and missed it.