I find the Vision Transformer to be quite an interesting model! The self-attention mechanism and the transformer architecture were designed to help fix some of the flaws we saw in previous models that had applications in natural language processing. With the Vision Transformer, a few scientists at Google realized they could take images instead of text as input data and use that architecture as a computer vision model. And they built a model that now has state of the art results on image classification and other computer vision learning tasks! Let me show you how to do that!

The Vision Transformer has been developed by Google and is a good example of how easy it is to adapt the Transformer architecture for any data type.

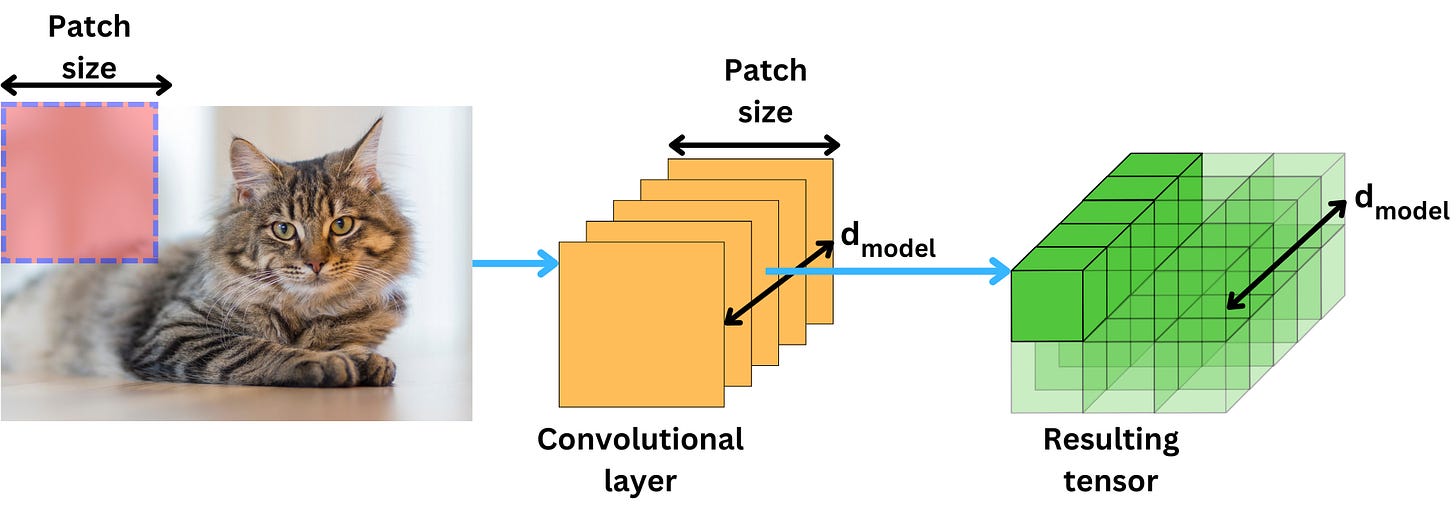

The idea is to break down the input image into small patches and transform each of the patches into an input vector for the Transformer model. It can be a simple linear transformation such that we obtain vectors in the right format.

If we use a convolution layer as a linear transformation, we can process the whole image in one-shot. The convolutional layer just needs to have the right dimension to get the vectors with the right format.

Once we transform the image into vectors, the process is very similar to the one we saw with the typical encoder transformer. We use a position embedding to capture the position of the patches, and we add it to the vectors coming from the convolutional layer. It is typical to add an additional learnable vector if we want to perform a classification task.

After that, we can feed those resulting vectors to the first encoder block.

We add as many encoder blocks in the encoder as we need. In the end, we obtain the encoder output.

A linear layer is used as the prediction head, and it projects from the hidden states dimension to the prediction vectors dimension. In the case of classification, we only use the first vector that corresponds to the classification token we added at the beginning of the model.

Watch the video for more information!

SPONSOR US

Get your product in front of more than 63,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - tens of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

To ensure your ad reaches this influential audience, reserve your space now by emailing damienb@theaiedge.io.