Why Deployment is the most important part of Machine Learning

The patterns, the architectures, and testing in production

Today we dig in the most critical piece of Machine Learning: exposing it to users! Without it, Machine Learning is just some math and algorithms in a textbook. We are going to look at:

The different deployment patterns

How to test you model in production

The different inference pipeline architectures

I think the 2010s marked the advent of Data Science / Machine Learning and its inflated hype. Tons of Data Science teams got created out of PhDs in stats and other mathematical domains that couldn't deploy a model even if their lives depended on it! Teams that got laid off for not being able to deliver anything. Projects that dragged on for years because they didn't have deployment strategies! Projects that got canceled after years of development because once they deployed, they realized the models were performing poorly in production or a feature was not available in production. To this day, those are typical stories of immature data science organizations!

The different Deployment patterns

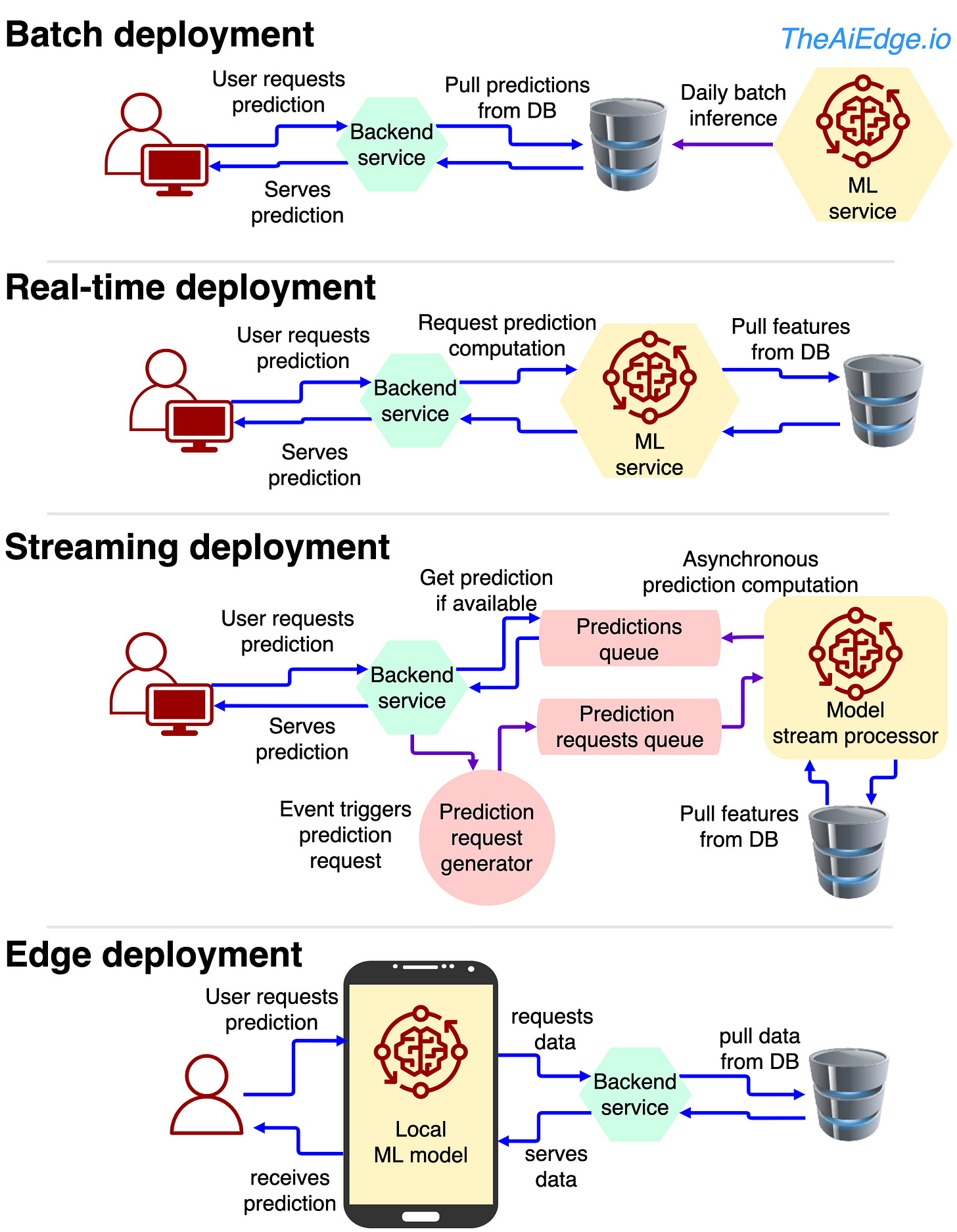

You should learn to deploy your Machine Learning models! The way to deploy is dictated by the business requirements. You should not start any ML development before you know how you are going to deploy the resulting model. There are 4 main ways to deploy ML models:

Batch deployment - The predictions are computed at defined frequencies (for example on a daily basis), and the resulting predictions are stored in a database and can easily be retrieved when needed. However, we cannot use more recent data and the predictions can very quickly be outdated. Look at this article on how AirBnB progressively moved from batch to real-time deployments: “Machine Learning-Powered Search Ranking of Airbnb Experiences“.

Real-time - the "real-time" label describes the synchronous process where a user requests a prediction, the request is pushed to a backend service through HTTP API calls that in turn will push it to a ML service. It is great if you need personalized predictions that utilize recent contextual information such as the time of the day or recent searches by the user. The problem is, until the user receives its prediction, the backend and the ML services are stuck waiting for the prediction to come back. To handle additional parallel requests from other users, you need to count on multi-threaded processes and vertical scaling by adding additional servers. Here are simple tutorials on real-time deployments in Flask and Django: “How to Easily Deploy Machine Learning Models Using Flask“, “Machine Learning with Django“.

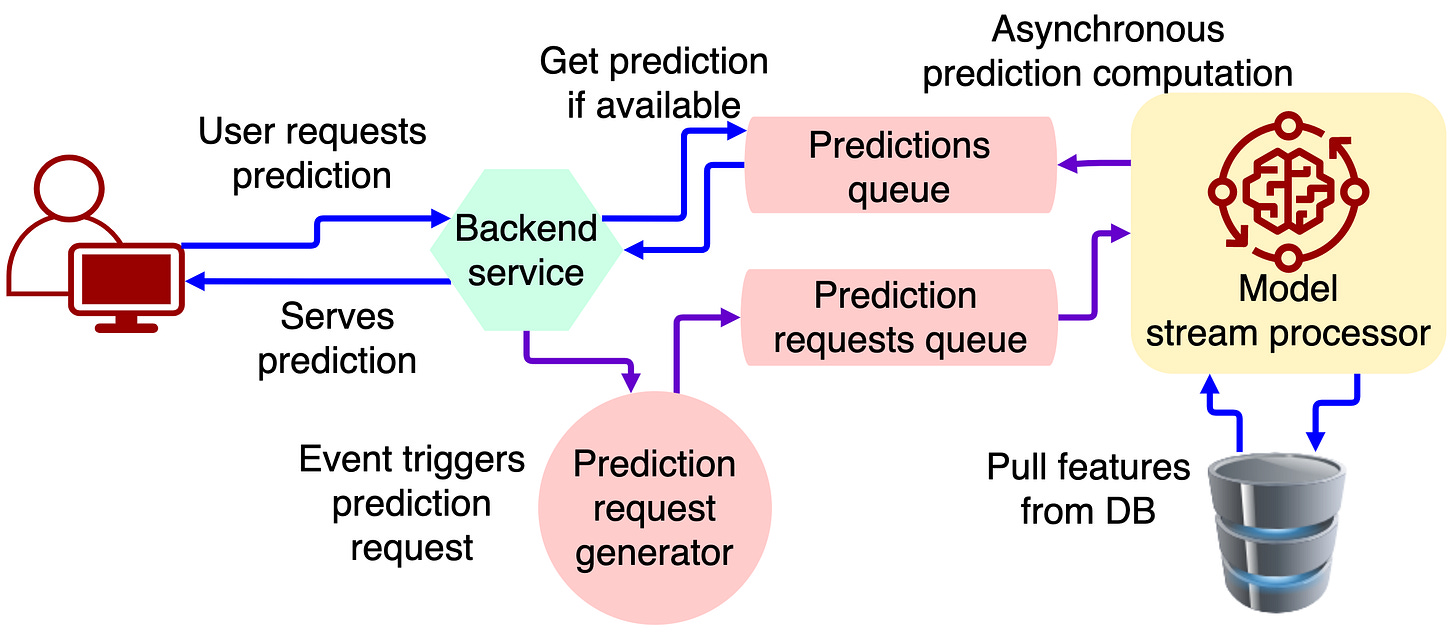

Streaming deployment - This allows for a more asynchronous process. An event can trigger the start of the inference process. For example, as soon as you get on the Facebook page, the ads ranking process can be triggered, and by the time you scroll, the ad will be ready to be presented. The process is queued in a message broker such as Kafka and the ML model handles the request when it is ready to do so. This frees up the backend service and allows to save a lot of computation power by an efficient queueing process. The resulting predictions can be queued as well and consumed by backend services when needed. Here is a tutorial in Kafka: “A Streaming ML Model Deployment“.

Edge deployment - That is when the model is directly deployed on the client such as the web browser, a mobile phone or IoT products. This results in the fastest inferences and it can also predict offline (disconnected from the internet), but the models usually need to be pretty small to fit on smaller hardware. For example, here is a tutorial to deploy YOLO on IOS: “How To Build a YOLOv5 Object Detection App on iOS“.

How to test your model in Production

I would really advise you to push your Machine Learning models to production as fast as possible! A model not in production is a model that does not bring revenue, and production is the only true way to validate your model: Trial by Fire! When it comes to testing your model in production, it is not one of the other, the more, the merrier!

Here are a couple of ways to test your models and the underlying pipelines:

Shadow deployment - The idea is to deploy the model to test if the production inferences make sense. When users request to be served model predictions, the requests are sent to both the production model and the new challenger model but only the production model responds. This is a way to stage model requests and validate that the prediction distribution is similar to the one observed at the training stage. This helps validate part of the serving pipeline prior to releasing the model to users. We cannot really assess the model performance as we expect the production model to affect the outcome of the ground truth.

Canary deployment - one step further than shadow deployment: a full end-to-end test of the serving pipeline. We release the model inferences to a small subset of the users or even internal company users. That is like an integration test in production! But it doesn't tell us anything about model performance.

A/B testing - one step further: we now test the model performance! Typically, we use business metrics that are hard to measure during offline experiments, like revenue or user experience. You route a small percentage of the user base to the challenger model and you assess its performance against the champion model. Test statistics and p-values are a good statistical framework to have a rigorous validation but I have seen cruder approaches.

Multi-armed bandit - That is a typical approach in Reinforcement Learning: you explore the different options and exploit the one that yields the best performance. If a stock in your portfolio brings more returns, wouldn't you buy more shares of it? The assumption is that relative performance can change over time and you may need to put more or less weight on one model or another. You don't have to use only 2 models, you could have hundreds of them at once if you wanted to. It can be more data efficient than traditional A/B testing: “ML Platform Meetup: Infra for Contextual Bandits and Reinforcement Learning“.

Interleaving experiments - Just show the both models' predictions and let's see what the user will choose. For example combine the ranked results of a search engine or a product recommender in the same list and let the user decide. The problem with that is that you are only assessing user preference and not another potentially more critical KPI metric: “Innovating Faster on Personalization Algorithms at Netflix Using Interleaving”.

"Designing Machine Learning Systems" is a good read if you want to learn more about those.

The different Inference pipelines architectures

Establishing a deployment strategy should be done before even starting any model development. Understanding the data requirements, data infrastructure, and the different teams that need to be involved should be planned in advance so that we optimize for the success of the project. One aspect to think about is the deployment pattern: where and how is the model going to be deployed?

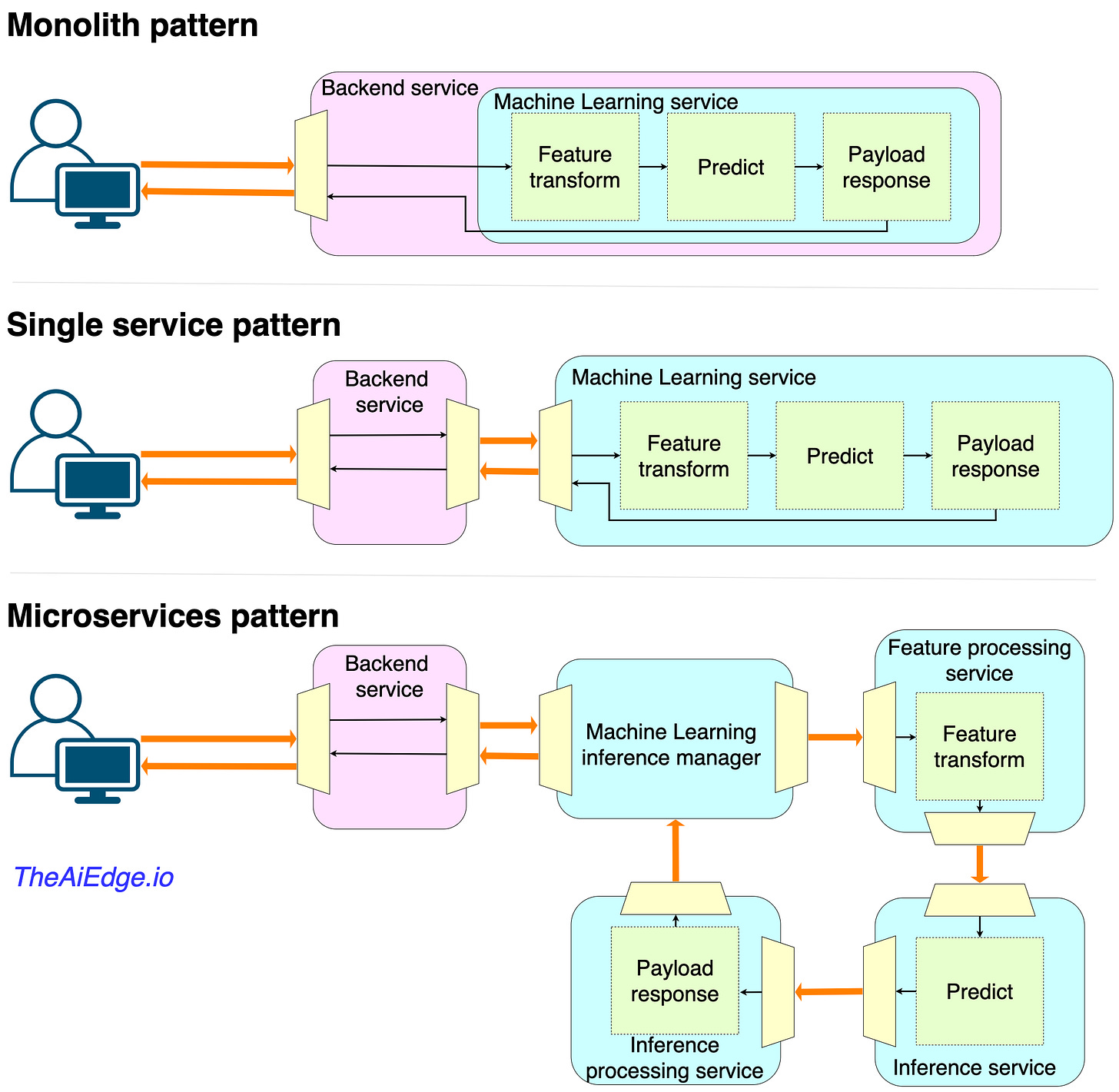

If you consider real-time applications, there might be 3 ways to go about it:

Deploying as a monolith - The machine learning service code base is integrated within the rest of the backend code base. This requires tight collaboration between the data scientists and the owners of the backend code base or related business service, the CI/CD process is further slowed down by the ML service unit tests, and the model size and computation requirements add additional load on the backend servers. This type of deployment should only be considered if the inference process is very light to run.

Deploying as a single service - The ML service is deployed on a single server, potentially with elastic load balancing if scale is needed. This allows engineers to build the pipeline independently of the ones owning the business service. Building monitoring and logging systems will be much easier. And ownership of the codebase will be less fuzzy. The model can be complex without putting load pressure on the rest of the infrastructure. This is typically the easiest way to deploy a model while ensuring scalability, maintainability and reliability.

Deploying as a microservice - The different components of the ML services get their own services. This requires a high level of maturity in ML and MLOps. This could be useful if for example the data processing component of the inference process needs to be reused for multiple models (e.g. a specific set of feature transformations). In Ads ranking for example, we could have multiple models (on different services then) that rank ads in different sub-domains (ads on FB, ads on Instagram, …) but that need to follow the same auction process that could be handled by a specialized Inference Processing service in charge of the auction. You better make sure to use an orchestrated service such as Kubernetes to handle the resulting complexity of microservice hell (“Dependency Hell in Microservices and How to Avoid It“).