The Different Agentic Patterns

What is an Agent?

The 2-agents conversation/collaboration pattern

Simple chats

Tool Use

LLM-based chat

The Collaboration Pattern with Three or more Agents

The Group Chat pattern

The Supervision pattern

The Hierarchical Teams pattern

Planning agents

Plan and Execute

Reasoning without Observation: Plan-Work-Solve Pattern

LLMCompiler: Tool calls optimization

Reflection & Critique

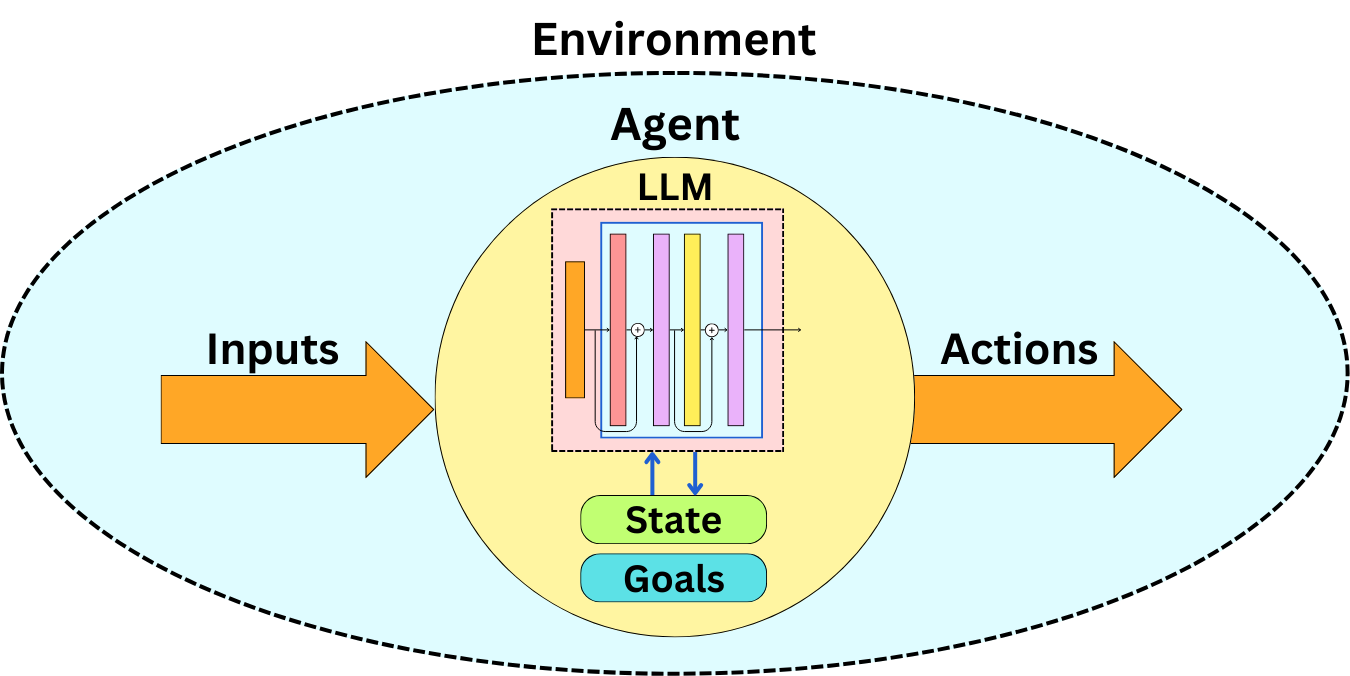

What is an Agent?

There is a fine line between an agent and a non-agentic pipeline. Let’s define an agent:

It perceives an environment: it can receive inputs from its environment. When we think about LLMs, the inputs typically need to be in a textual or image format.

It maintains an internal state: the internal state can be its original knowledge base with additional context that can be updated over time based on new information or experiences.

It has goals or objectives: they influence how the agent prioritizes actions and makes decisions in varying contexts.

It processes inputs using an LLM: not all agents are LLM-based, but as part of the agentic system, some of the agents will use LLMs as the decision engine.

It decides on actions: based on its inputs, its internal state, and its objective, the agent will take an action. The action taken is decided by the decision engine.

The action affects the environment: the actions taken will influence the environment either by creating new data, informing the user, or changing the internal state of other agents.

So why would we want an agent instead of a pipeline where we encode all the possible states and actions that can be taken in the environment? For example, in the previous newsletter, I showed how to implement a complex RAG pipeline.

There were goals and decisions taken, and by the definition, this seems to be an agentic design. Typically, when we talk about agentic patterns, we refer to patterns where the agents will choose their own paths.

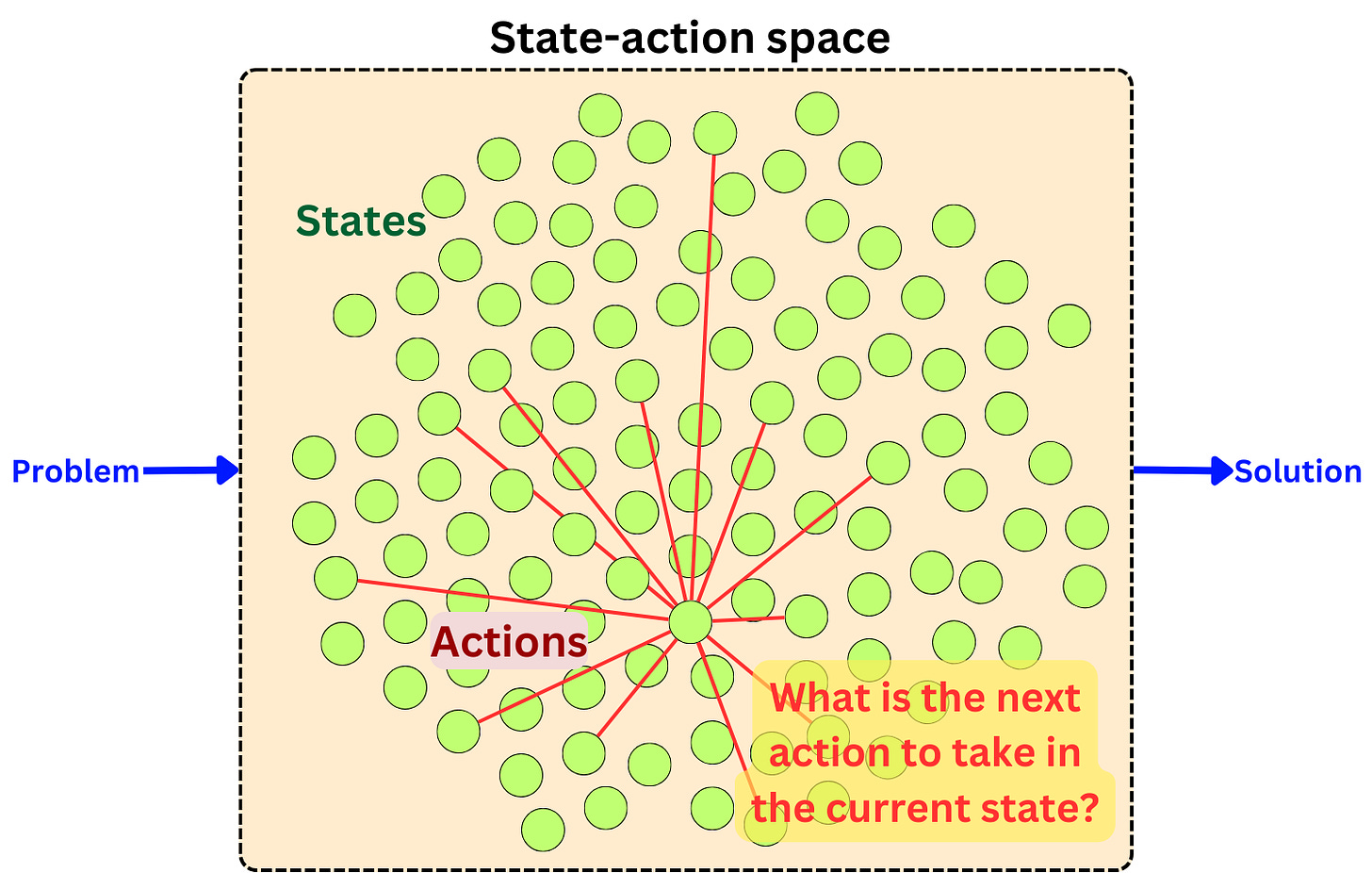

If we know of the possible states and action outcomes that can exist in this environment, and the number of state-action pairs is somewhat manageable for an engineer to implement, we are better served by a rigid pipeline. All the actions are pre-defined, and we can monitor the correct behavior of the pipeline. Leaving the choice of actions to an LLM is prone to errors and inefficiencies. LLMs hallucinate, and we are likely to encounter undesired outcomes if we don’t have control over the decisions taken. Here is an example of a simple problem:

We don’t need to leave the choice of action to a decision engine because the path is straightforward!

It might become a better option to use agents to choose the next best actions when the amount of possible state-action pairs is too large. Encoding all the possible outcomes based on all the possible inputs and internal states can become unmanageable in complex problems. That is why we think of agents when we want to solve complex problems. What does “complex problem” mean? It is a problem where the number of states that exist in the environment and the number of possible actions we could take from those can potentially grow infinitely large!

In a closely related subject, this is also the goal behind Reinforcement Learning. When it is hard to find the optimal state-action path for a complex problem, we use reinforcement learning agents to learn the right action to take in a specific state. In reinforcement learning, we use the concept of rewards to teach the objectives to the agents. For LLM agents, we can directly encode the objectives as part of the prompt.

LLMs are not good at making complex decisions. They easily hallucinate, and it is unavoidable. However, LLMs are quite consistent when making very simple decisions. When we engineer agents, we need to account for those weaknesses. Typically, the more complex the problems to solve become, the more complex the decisions that have to be taken. When the complexity increases, it becomes better to increase the number of agents. Each agent is specialized in achieving a specific objective, and the complexity is met by the collaboration of multiple agents that only need to make very simple decisions at each step.

Let’s see how agents can collaborate to solve complex problems!

The 2-agents conversation/collaboration pattern

Simple chats

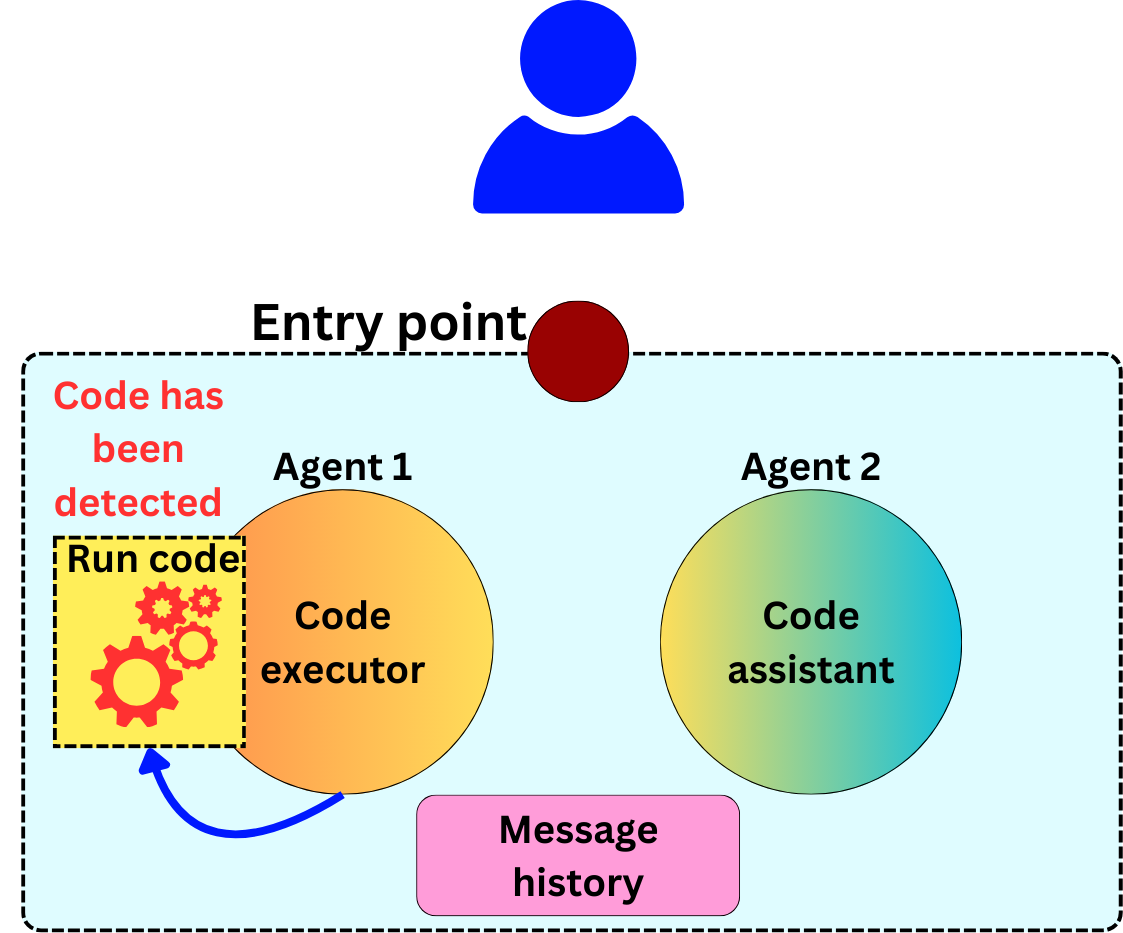

Let’s imagine we want to build a multi-agent system that can solve problems by writing code. We could have two agents, one that specializes in writing code if need be and another one that specializes in executing code.

They iteratively send messages to each other until one of them decides that the problem has been solved. The code assistant is powered by an LLM that can produce code. Here is the system prompt that Autogen uses for their code assistant agents:

You are a helpful AI assistant.

Solve tasks using your coding and language skills.

In the following cases, suggest python code (in a python coding block) or shell script (in a sh coding block) for the user to execute.

1. When you need to collect info, use the code to output the info you need, for example, browse or search the web, download/read a file, print the content of a webpage or a file, get the current date/time, check the operating system. After sufficient info is printed and the task is ready to be solved based on your language skill, you can solve the task by yourself.

2. When you need to perform some task with code, use the code to perform the task and output the result. Finish the task smartly.

Solve the task step by step if you need to. If a plan is not provided, explain your plan first. Be clear which step uses code, and which step uses your language skill.

When using code, you must indicate the script type in the code block. The user cannot provide any other feedback or perform any other action beyond executing the code you suggest. The user can't modify your code. So do not suggest incomplete code which requires users to modify. Don't use a code block if it's not intended to be executed by the user.

If you want the user to save the code in a file before executing it, put # filename: <filename> inside the code block as the first line. Don't include multiple code blocks in one response. Do not ask users to copy and paste the result. Instead, use 'print' function for the output when relevant. Check the execution result returned by the user.

If the result indicates there is an error, fix the error and output the code again. Suggest the full code instead of partial code or code changes. If the error can't be fixed or if the task is not solved even after the code is executed successfully, analyze the problem, revisit your assumption, collect additional info you need, and think of a different approach to try.

When you find an answer, verify the answer carefully. Include verifiable evidence in your response if possible.

Reply "TERMINATE" in the end when everything is done.

The code executor can be given very little agency and be mostly driven by regex pattern matching. If code is detected in the message sent by the sender, the code executor agent extracts, executes the code, and sends the standard output to the sender. The Code assistant can then utilize the potential error traces or success messages to decide on the next step.

Let’s see an example. A user asks a question, and it is sent to the code assistant:

The request can also be captured as part of a message history system to ensure that we don’t come back to a previously visited state. The code assistant, being prompted to solve the problem with code, will generate a response, including a script following the format imposed by the system prompt. For example, here is a possible response by the agent:

assistant (to executor)To determine today's date and compare the year-to-date gain for META (Meta Platforms, Inc.) and TESLA (Tesla, Inc.), I will follow these steps:

1. Get today's date.

2. Fetch the current stock prices for META and TESLA.

3. Fetch the stock prices for META and TESLA at the beginning of the year.

4. Calculate the year-to-date gain for both stocks.

Let's start by getting today's date. I will execute the code to print the current date.

```python from datetime import datetime # Get today's date today = datetime.now().date() print(today) ```

The message is sent to the code executor:

Now, the code executor can easily detect that code is part of the response and can extract it and run it:

And the code executor can respond to the code assistant. For example, it could respond:

user_proxy (to assistant):exitcode: 0 (execution succeeded)

Code output: 2024-09-17

Based on this feedback, the code assistant can go further in trying to solve the problem. Here is what the code assistant could answer:

assistant (to executor):Today's date is September 17, 2024.

Next, I will fetch the current stock prices for META and TESLA, as well as their stock prices at the beginning of the year (January 1, 2024). To do this, I will use the `yfinance` library to get the stock data.

I will write a Python script to perform these tasks. Please ensure you have the `yfinance` library installed. If you don't have it installed, you can do so by running `pip install yfinance`.

Here is the code to fetch the required stock prices and calculate the year-to-date gains:

```python # filename: stock_gain_comparison.py import yfinance as yf from datetime import datetime # Define the stock symbols stocks = ['META', 'TSLA'] # Get today's date today = datetime.now().date() # Fetch current prices and prices at the beginning of the year current_prices = {} start_of_year_prices = {} for stock in stocks: stock_data = yf.Ticker(stock) current_prices[stock] = stock_data.history(period='1d' ['Close'].iloc[-1] start_of_year_prices[stock] = stock_data.history(start='2024-01-01', end='2024-01-02')['Close'].iloc[0] # Calculate year-to-date gains ytd_gains = {stock: (current_prices[stock] - start_of_year_prices[stock]) / start_of_year_prices[stock] * 100 for stock in stocks} # Print the results print(f"Current Prices: {current_prices}") print(f"Start of Year Prices: {start_of_year_prices}") print(f"Year-to-Date Gains: {ytd_gains}") ```Please save this code in a file named `stock_gain_comparison.py` and execute it.

The agents will exchange messages until the code assistant emits a stopping condition. In the case shown in the above system prompt, it expects the word “TERMINATE“.

For example, the final message could be something like:

The execution was successful, and here are the results:

**Current Prices:**

META: $536.32

TESLA: $227.87

**Start of Year Prices:**

META: $345.25

TESLA: $248.42

**Year-to-Date Gains:**

META: **55.34%**

TESLA: **-8.27%**

This indicates that META has gained approximately 55.34% since the beginning of the year, while TESLA has decreased by about 8.27%.

If you need any further analysis or information, please let me know.

TERMINATE

When the stopping condition has been met, we can return the response to the user:

Tool Use

In the previous example, we used a tool to solve the problem, but the assistant agent had no choice in what tools to use. We can create an assistant agent with more agency in the choice of tools to use. In its basic form, in every prompt, we present the LLM with a list of the possible tools that can be used. For example, we use the following prompt:

You are an assistant with access to two tools: Web Search and Calculator. When using a tool, structure your response as follows:

TOOL: [Tool name]

INPUT: [Tool input]

REASONING: [Brief explanation]

Always end your response with:

ANSWER: [Your final response to the query]

Only use tools when necessary. If no tool is needed, just provide the ANSWER.

The agentic system could be composed of the assistant agent and a tool agent that is used only when a tool is being requested. In the case where no tool seems to be necessary, the assistant agent just responds to the user:

In case a tool is detected, the tool and tool input are sent to the tool node until the tool assistant terminates the 2-agent conversations:

This pattern is often referred to as a ReAct (Reason + Act) agent.

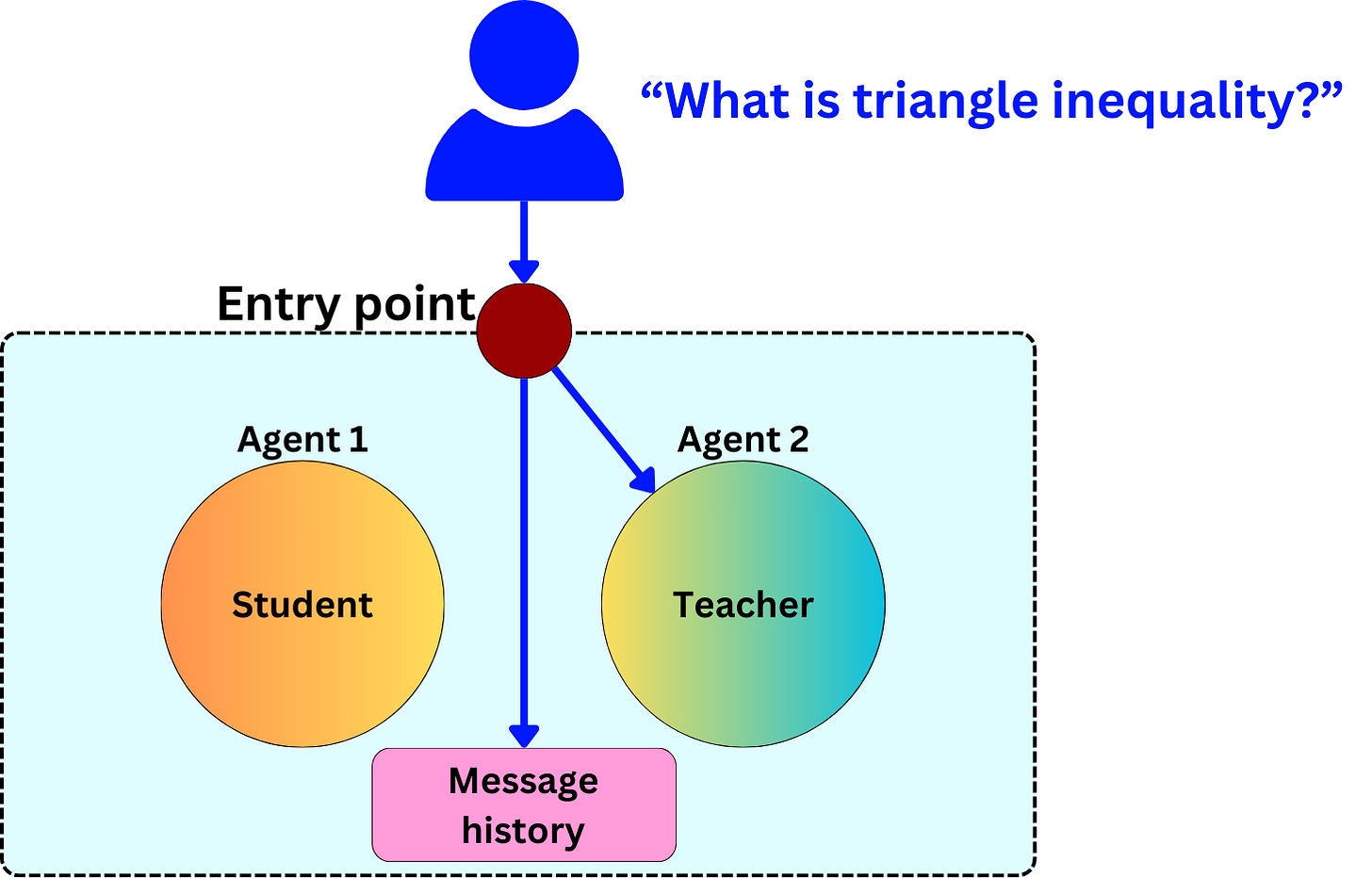

LLM-based chat

So far, we have talked about a smart assistant and a dumb executor. We could have a similar pattern with two LLM-based agents. This could be a way to expand a simple response into a more complete one. For example, let’s imagine that we want the agentic system to respond to math questions. We could set up one of the agents as a teacher agent and the other as a student one.

Student’s system prompt:

"You are a student willing to learn."

Teacher’s system prompt:

"You are a math teacher."

The teacher will explain the topic to the student, and the student will respond with potential follow-up questions.

The teacher may answer to the student:

Teacher (to Student):Triangle inequality theorem is a fundamental principle in geometry that states that the sum of the lengths of any two sides of a triangle must always be greater than the length of the third side. In a triangle with sides of lengths a, b, and c, the theorem can be written as:

a + b > c

a + c > b

b + c > a

Each of these represents the condition for one specific side (a, b, or c). All must be true for a triangle to exist.

To which the student may decide to respond:

Student (to Teacher):Thank you for the explanation. This theorem helps in understanding the basic properties of a triangle. It can also be useful when solving geometric problems or proving other mathematical theorems. Can you give me an example of how we can use the triangle inequality theorem?

The student, eager to learn, and the teacher, eager to teach, will engage in a conversation that will uncover interesting facts that will provide an automated fact-checking mechanism. There is no natural way to end the conversation besides just imposing a maximum number of rounds:

Once the conversation ends, we can use the conversation history to generate a complete answer to send to the user:

The Collaboration Pattern with Three or more Agents

The Group Chat pattern

Trying to generate code with a code assistant and a code executor might be limited. We might potentially get better results if we specialize further the agents. Let’s imagine we add a product manager agent who will be in charge of establishing a better structure for a potential software solution. We could replace its system prompt with something like:

You are a Product Manager being creative in software product ideas.

With more than two agents, we need a process to decide who will speak next. We can create another LLM-based agent, the chat manager, whose role will be to choose the next agent to process the inputs based on the current context and specificities of the different agents:

The main collaborating actors are the code assistant, the code executor, and the product manager, while the chat manager is just a router in the iterative process. This implies that we correctly describe to the chat manager the different capabilities of the other agents. When the user asks a question, the chat manager will route the question based on its content:

The chat manager will iterate the process until an ending condition is met:

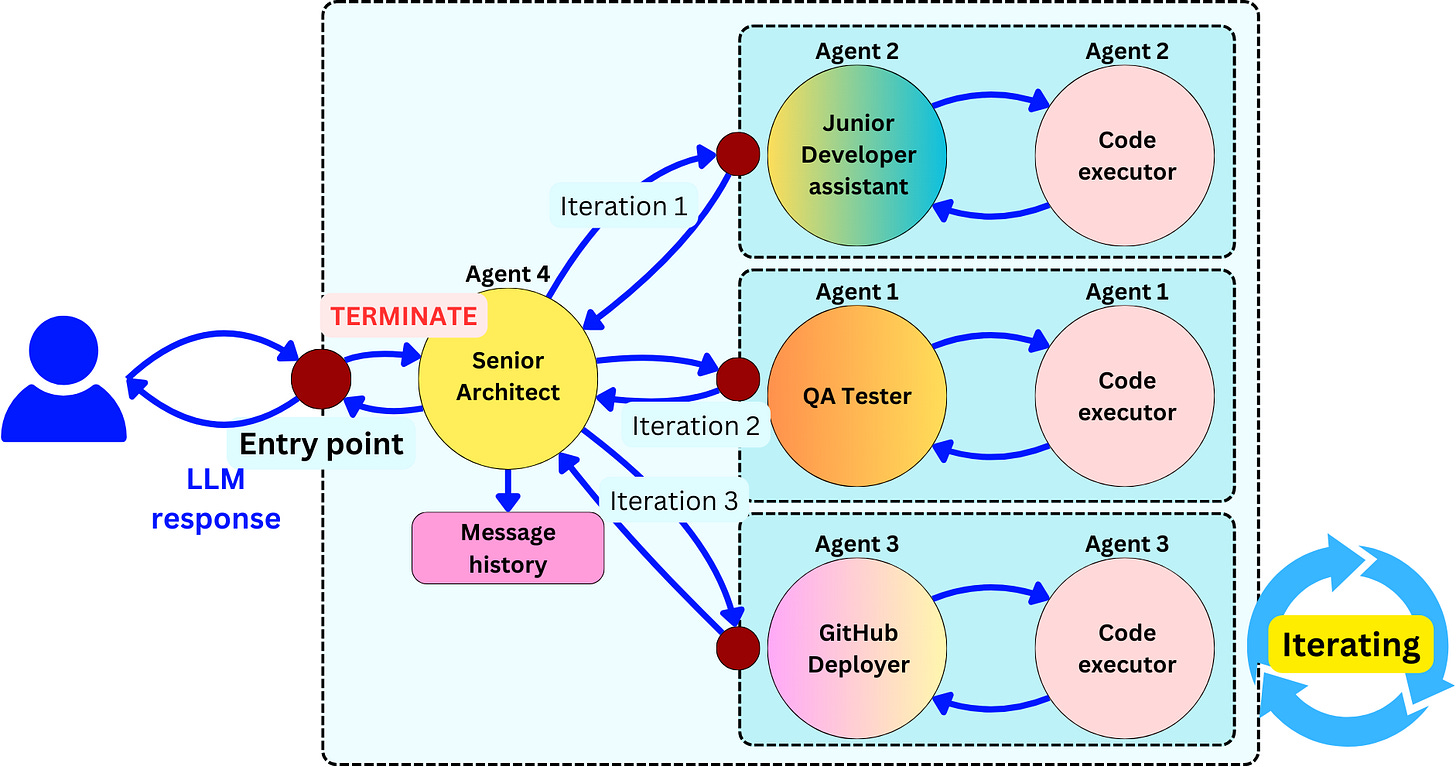

The Supervision pattern

The chat manager is meant to broadcast different messages to the other agents and facilitate conversation between the different agents, but it doesn't dictate or evaluate the content produced by the agents. It is just there to help the agents collaborate, and it doesn’t even need to be a LLM-based agent, it could simply be a basic router program based on parsed text.

Instead of having an agent that is just meant to route messages, we could have an agent that is there to structure the work and control the quality of what is being generated. Imagine we are creating an agentic system that can write code and push it to GitHub. We could create a system with four agents:

Senior Architect agent (Supervisor)

Junior Developer agent (Subordinate)

QA Tester agent (Subordinate)

GitHub Deployer agent (Subordinate)

Each of the subordinates could be provided with a code executor to test the code:

The prompt given to the Senior Architect could be something like this:

You are a Senior Architect supervising a software development team. Your role is to oversee the project, make high-level decisions, and delegate tasks to your team members and tools. Your team consists of:

Junior Developer: Responsible for writing and debugging code.

QA Tester: In charge of creating and executing test cases, and reporting bugs.

GitHub Deployer: An automated tool for managing code deployments through GitHub.

Your responsibilities include:

Analyze each incoming task or query.

Decide which team member is best suited to handle the task, or if the GitHub Deployer should be utilized.

Provide clear, concise instructions when delegating tasks.

Oversee the overall progress of the project.

Make final decisions on technical matters and resolve conflicts.

Determine when code is ready for deployment and instruct the use of the GitHub Deployer.

When delegating a task or initiating a deployment, respond in the following format:

SELECTED: [Name of selected team member or "GitHub Deployer"]

TASK: [Brief description of the task assigned or deployment action]

REASONING: [Brief explanation of why this team member was chosen or why deployment is needed]

If you need more information before making a decision, you may ask for clarification.

Remember:

The Junior Developer handles coding tasks.

The QA Tester is responsible for testing and quality assurance.

Use the GitHub Deployer when code needs to be deployed or when managing GitHub-related tasks.

Always consider the current stage of development and the readiness of the code when making decisions.

Your goal is to ensure efficient collaboration, high-quality output, and smooth deployment of your team's work.

A user may request fixing a bug in a piece of code, for example:

To which the Senior Architect may answer:

SELECTED: QA Tester

TASK: Investigate the reported bug in password reset functionality on mobile devices

REASONING: This issue requires initial investigation to reproduce and identify the scope of the problem. The QA Tester is best equipped to handle this task as they have the expertise in testing across different environments and can provide a detailed report on the bug's behavior.

Instructions for QA Tester:

Attempt to reproduce the bug on various mobile devices and operating systems.

Document the exact steps to reproduce the issue and any relevant error messages or logs.

Prepare a detailed report of your findings.

Once the investigation is complete, report back with your results.

In which the instructions are sent to the QA Tester that will iterate with its code executor:

After the QA Tester met a stopping condition of the iterating process could respond to the senior architect:

QA Tester (to Senior Archtitect):Bug Confirmed: Password reset not working on mobile devices.

Tested on: iOS, Android, iPadOS

Issue:

Reset process starts correctly

Email received

Clicking link in email shows "Invalid or expired token" error on mobile only

Key Points:

Works fine on desktop browsers

Affects all tested mobile devices and browsers

No client-side error logs generated

Likely cause: Server-side issue with mobile token handling

Recommendation: Junior Developer to investigate server-side mobile token processing

To which the Senior Architect could select the Junior Developer with precise instructions to start investigating the bug:

To which the Junior Developer could answer:

Task Completed: Investigated and fixed password reset bug on mobile devices

Issue: Invalid token error when resetting password on mobile

Fix implemented:

Updated server-side token validation to be device-agnostic

Modified token generation to use consistent format across all platforms

Added URL encoding for reset tokens to prevent mobile parsing issues

Testing:

Verified fix on iOS and Android devices

Confirmed desktop functionality unaffected

Next steps:

Recommend QA to re-test on various mobile devices

If approved, ready for deployment via GitHub Deployer

This process could go on until the Senior Architect selects the GitHub Deployer and ends the iteration process after a successful deployment:

The final response to the user could be something like:

Update on password reset functionality issue:

Bug confirmed and investigated by QA team

Root cause identified: server-side token handling for mobile devices

Fix implemented and tested by development team

Solution:

Updated token validation to work across all devices

Improved token generation and URL encoding

Current status:

Fix has been implemented and initially tested

Awaiting final QA verification on multiple mobile devices

Next steps:

Comprehensive QA testing (estimated 1-2 days)

If approved, deployment to production (estimated within 3 days)

We apologize for the inconvenience and appreciate your patience. We'll update you once the fix is live in production. If you need an immediate password reset, please use a desktop browser as a temporary workaround.

Agents are likely to make mistakes, so it is important to provide self-check mechanisms that can allow for a system of agents to autocorrect. LLMs are smart, but to get the most out of them, it is important to leave each agent with very little decisional power and to make the possible decision as simplistic as possible.

The Hierarchical Teams pattern

When we want to expand on the complexity of the possible tasks that we want our system to be able to solve, we need to increase the number of agents. A typical approach is to specialize the decisional agents and executors to prevent them from having to choose from too many options, keeping each decision simple and each task specialized. Let’s imagine, for example, the following agentic system:

This system is not too different from the previous one, but it is meant to solve a larger class of problems. We still have the higher lever supervisor (Chief Software Architect) who distributes tasks based on the specialties of the subordinates. It still has the role of quality control and flow management. Instead of the subordinates being task solvers, they are task routers and assessors to more specialized agents organized in teams. Here we have the:

Frontend team

Backend team

Security team

DevOps team

QA team

Each team can have its own set of specialized tools, software, and retrievers to perform their tasks. Here are the types of requests that a user could ask:

Develop and deploy a new feature for the e-commerce platform: Implement a real-time chat system that allows customers to communicate directly with customer support representatives. The chat system should be scalable, secure, and integrated seamlessly with the existing platform. Ensure that the feature is tested thoroughly and deployed with minimal downtime.

Complex Coordination: Developing a real-time chat system within a large-scale e-commerce platform is a multifaceted task involving various specialized domains—frontend and backend development, security, DevOps, QA, and possibly data science for features like chat analytics. Each domain has its own complexities that require focused attention. A hierarchical structure allows for effective coordination among these specialized teams, ensuring that all aspects of the feature are developed cohesively.

Specialization and Expertise: The hierarchical model enables each team to concentrate on their specific area under the guidance of their respective Lead Agents. For example, the Security Lead Agent oversees all security measures, ensuring robust encryption and compliance with data protection regulations. Simultaneously, the DevOps Lead Agent focuses on deployment strategies and infrastructure scalability. This specialization ensures that experts handle each critical aspect of the project.

Efficient Communication: Hierarchical communication channels streamline information flow. Lead Agents act as liaisons between their teams and upper management, reducing communication overhead and preventing information overload among individual agents. This structure ensures that important updates and decisions are communicated concisely and accurately.

Risk Management: The hierarchy facilitates proactive identification and mitigation of risks. Security Analyst Agents and QA Engineer Agents can prioritize potential vulnerabilities and testing strategies, addressing issues before they escalate. The QA Manager Agent ensures that quality assurance is integrated throughout the development process, reducing the likelihood of post-deployment problems.

Scalability and Flexibility: The hierarchical structure allows for easy scaling of teams based on project needs. If the chat system requires additional features or faces higher user demand, new agents can be added under the appropriate Lead Agents without disrupting the overall workflow. This flexibility is good for adapting to changing requirements and technological advancements.

Integration and Consistency: Multiple teams working on different components necessitate a unified approach to ensure seamless integration. The Chief Software Architect Agent oversees architectural consistency, while Subsystem Lead Agents ensure their teams adhere to these standards. This hierarchical oversight guarantees that all parts of the chat system work together harmoniously within the existing platform.

To learn more about multi-agent collaboration, I recommend the Autogen article and package by Microsoft as a first entry point. Autogen is an agent-centric orchestration framework for LLM applications that mostly focuses on chat collaboration patterns.

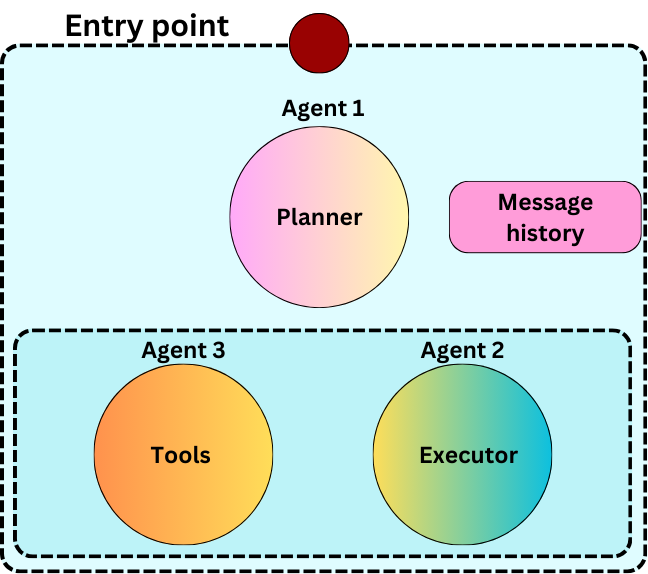

Planning agents

Plan and Execute

So far, we have assumed that the agents will figure out what to do as they get new information, but it is easy for them to get lost in endless loops, forgetting the original task that needs to be solved. That is why it can be helpful for agents to establish an overall plan that needs to be executed. This is especially true with the code writing examples we have seen above when the overall process of following software engineering best practices can be quite predictable. The plan-and-execute paradigm involves a planner and executors.

This paradigm is well adapted to successive tool calls, but it can also be adapted to subordinate calls as part of the hierarchical example above. The idea is that the planner establishes a series of the necessary steps to reach a solution. For example, we use the following prompt to get such a plan:

For the given objective, come up with a simple step by step plan.

This plan should involve individual tasks, that if executed correctly will yield the correct answer. Do not add any superfluous steps.

The result of the final step should be the final answer. Make sure that each step has all the information needed - do not skip steps.

For example, a user could be asking a question resulting in a plan:

Each step simply needs to be executed sequentially according to the plan:

This process might be a bit blindsided, and we are not allowing for potential adjustments to the plan as we accumulate feedback while solving the problem. Imagine that we receive feedback from the “Process Purchase Data“ step:

The CSV file contains multiple records per customer, each representing individual purchases. Our current code assumes that each customer has a single record with all their purchase history in the 'purchase_history' field. Consequently, total purchase amounts per customer are not calculated correctly because purchases are spread across multiple rows.

We can have a replanner agent specializing in assessing the plan and updating it if necessary:

For example, the system prompt for the replanner could be something like this:

For the given objective, come up with a simple step by step plan.

This plan should involve individual tasks, that if executed correctly will yield the correct answer. Do not add any superfluous steps.

The result of the final step should be the final answer. Make sure that each step has all the information needed - do not skip steps.

Your objective was this:

{input}

Your original plan was this:

{plan}

You have currently done the follow steps:

{past_steps}

You received the following feedback from the last step:

{feedback}

Update your plan accordingly. If no more steps are needed and you can return to the user, then respond with that. Otherwise, fill out the plan. Only add steps to the plan that still NEED to be done. Do not return previously done steps as part of the plan.

You can read more about the “Plan and Execution” pattern here.

Reasoning without Observation: Plan-Work-Solve Pattern

The Plan and Execution pattern often involves using a ReAct agent. This process is sequential, therefore slow, and requires injecting the same data over and over within the different prompts during the iterative process of the ReAct agent. This leads to high latency and high costs due to prompt redundancy. The Plan-Work-Solve pattern consists of establishing the tool calls as part of the plan. The full plan is listed in the initial step, then the tool calls are processed in the following step, without reassessment of the plan, and in the final step, the results from the tool calls are aggregated to provide a final solution to the problem. The tall calls can be made concurrently since they are not dependent on each other. In this paradigm, we need a solver agent that can process the aggregated set of information coming from the tool calls:

In general, we should use:

Plan-and-Execute When: The task environment is dynamic and may change during execution. Flexibility and adaptability are required. Immediate feedback can influence the next steps.

Plan-Work-Solve When: The task is well-defined, and all necessary information can be gathered in advance. Efficiency is critical, and interaction costs are high. Decoupling reasoning from observation leads to better performance.

LLMCompiler: Tool calls optimization

The plan and Execution paradigm assumes that all the different steps are to be executed sequentially, whereas the Plan-Work-Solve paradigm makes the drastic assumption that all the calls be executed in parallel. With the LLMCompiler paradigm, the planner constructs a graph of the steps by establishing the dependencies for each of the steps. This way, we can plan for the steps that can be processed concurrently, and we can save on latency while keeping accuracy high.

Because the execution remains somewhat sequential due to the graphical nature of the nature, it provides more opportunities, as in the Plan and Execute paradigm, for replanning after each tool execution and their feedback.

Reflection & Critique

In all the multi-agent strategies we explored so far, the systems had opportunities to autocorrect by having one agent check the output of other agents. This prevents LLM hallucinations from propagating back up to the user. This being said, we accepted this as a positive side-effect without explicitly trying to implement this capability. The pattern requires having an executor agent and a reflection agent.

For example, the system prompt for the executor could be something like:

“Your task is to write Python code that solves the problem presented to you. Ensure that the code is correct, efficient, well-structured, and handles edge cases. Comment the code where necessary and ensure it’s readable and maintainable.

If the user provides critique, respond with a revised version of your previous attempts.”

And for the critique

“Your task is to review and critique the code generated by the Executor Agent. Check for correctness, efficiency, code clarity, and completeness. Identify any potential issues, such as missed edge cases, inefficiencies, or areas where the code could be made more readable. Provide constructive feedback and suggest improvements.”

This is very similar to the teacher-student example above. The active and iterative feedback allows for refinement and autocorrecting.

To an extent, this is also the principle behind LLM-based optimizers for refining system prompts in LLM pipelines. This is the idea behind TextGrad and OPRO.

An improvement to this approach is to evaluate the quality of the Executor’s response before generating critiques. In Reflexion, they compare this approach to reinforcement learning, and they comment on the idea of using LLM-based evaluators or simpler heuristic approaches.

Again, a few of the systems we have talked about already could behave as evaluators and critiques, but here, the emphasis is put on deliberately inducing the refinement. For example, an evaluator could be a simple set of unit tests assessing the quality of a code being generated. Based on the tests that failed and succeeded, the Critique could use this quantitative information to focus its feedback on refining the answer for the failed edge cases.

Here, the message history plays a critical role in making sure the Critique always builds from the information that has already been aggregated.

Let’s stop here!

There are many more agentic patterns, such as Language Agent Tree Search or Self-Discover Agent, and we are going to explore more of those in the following weeks. We have seen a lot in this newsletter, and we better spread our journey in the agentic world in a few more newsletters. See you then!