Understanding XGBoost from A to Z!

I often say that at some point in my career, I became more of an XGBoost modeler than a Machine Learning modeler. On large tabular datasets, it would provide close to optimum results without much effort. LightGBM and Catboost are obviously as good and sometimes better, but I will always keep a special place in my heart for XGBoost.

Let me show you how this algorithm was put together. In a previous video, we looked at how the gradient-boosted trees algorithm works. Now, I am going to show you how XGboost improved on it. Let's get into it!

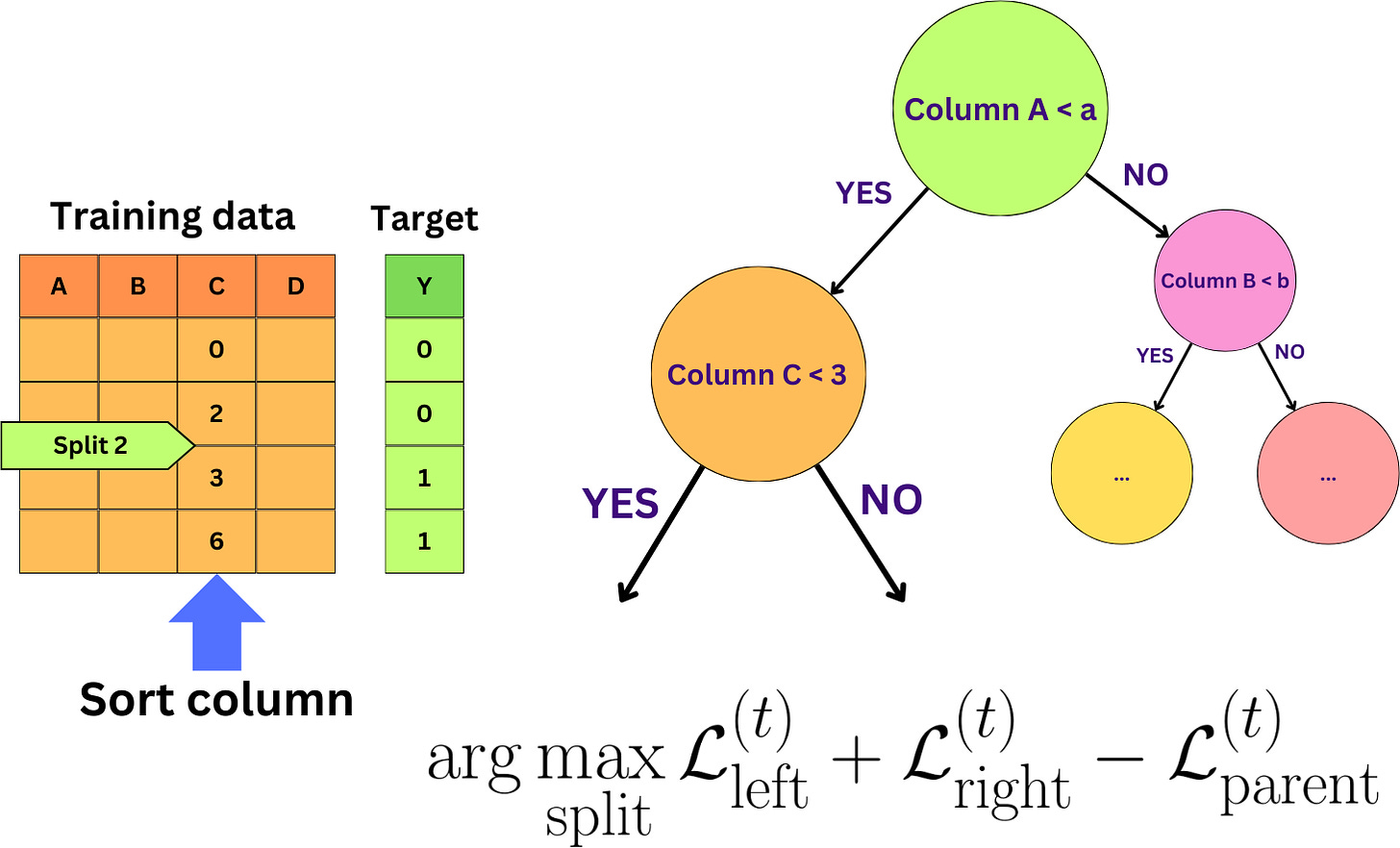

To build a tree, we recursively split the training data until we meet an ending condition, in which case, we reach a leaf node.

So, we need to find the right column and the right value to split at each node.

The problem is, knowing that we already have an ensemble of trained trees, how do we add a new tree?

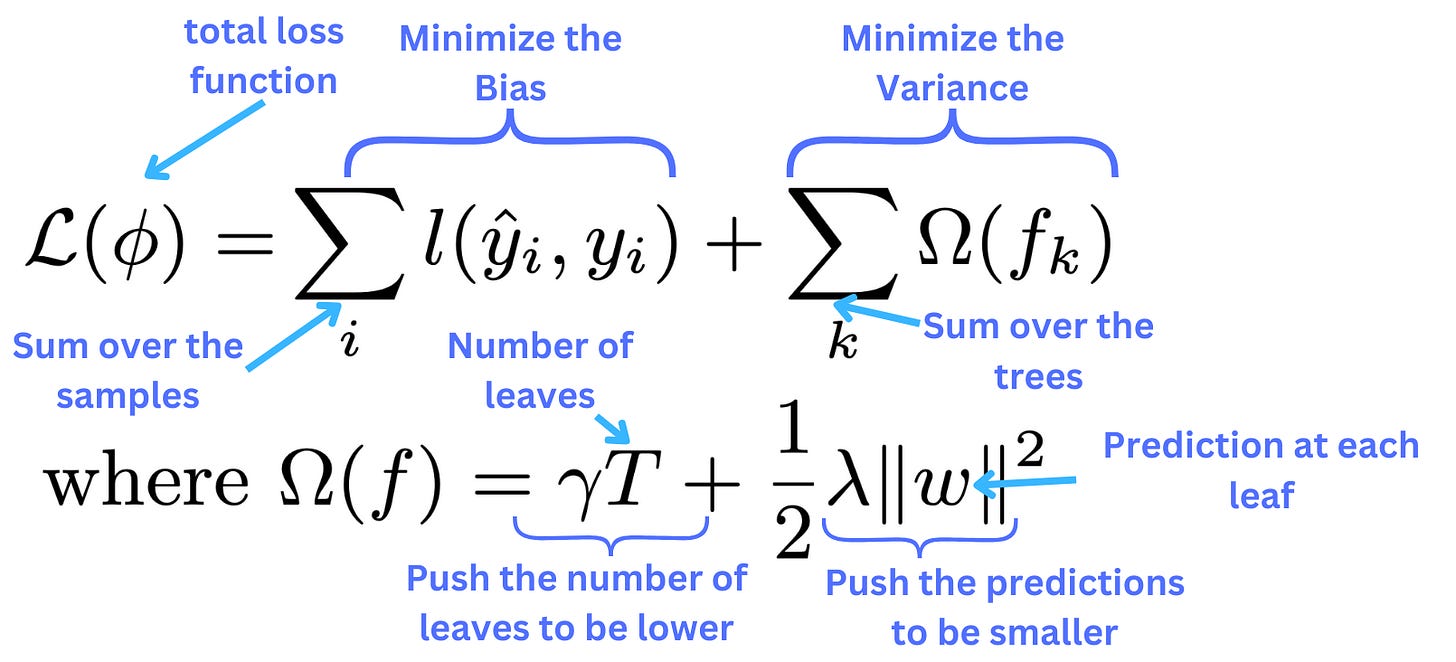

In XGBoost, the loss function is engineered to minimize bias and variance at the same time.

In the sample level loss function, the predictions take into account the previous trees as well as the new tree we want to build.

But, because this loss function is difficult to deal with, we do a second-order Taylor expansion.

Because the loss function related to the previous trees does not depend on the new tree, it is equivalent to minimizing the terms only involving the new tree.

To highlight the prediction weights at each leaf, we decompose the sum over the samples into a sum of the leaves and a sum over the samples within each leaf.

We can now find the optimal weights at each leaf by minimizing the loss function.

So, to find the right split, the problem becomes what is the split that maximizes the difference between the parent’s loss and the children’s losses.

The form of the optimal loss function is interesting because minimizing the loss means maximizing the gradient as well as minimizing its curvature.

XGBoost does not take the split that decreases the most the loss by following the highest gradient; it also considers its curvature

This is obviously very reminiscent of the concept of the Bias-Variance tradeoff, and the gradient sure reminds us of the bias and the curvature of the variance. Even their analytical expressions look similar. While they tend to capture similar information, they are most likely not exactly equal though. Bias and Variance are statistical metrics, while gradient and curvature are functional ones. Despite those differences, this is one of the first times that those concepts are mathematically formalized in a tree-based algorithm as part of the objective function such that the trees learned by XGBoost are optimal ones with respect to the Bias-Variance tradeoff problem.

To choose the right split, we iterate over all the columns and possible splits and choose the split that maximizes the difference in losses.

We can parallelize split search by scanning each feature independently and reducing the resulting splits to the optimal one.

To handle missing values, we just assess each split, putting them left or right, and choose the direction that maximizes the loss difference.

We considered a few of the improvements related to XGBoost, but here are the ones we ignored:

First, all the columns are pre-sorted while keeping a pointer to the original index of the entry. This removes the need to sort the feature at every search.

They used a Compressed Column Format for a more efficient distribution of the data.

They used a cache-aware prefetching algorithm to minimize non-contiguous memory access that results from the pre-sorting step.

They developed an approximated split search algorithm that speeds the tree building further.

Watch the video for more information!

SPONSOR US

Get your product in front of more than 63,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - tens of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

Space Fills Up Fast - Reserve Today

Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing damienb@theaiedge.io.

Thanks Damien; are you sure of your interpretation of curvature wrt overfitting? AFAICT neither the XGBoost documentation nor its original paper mention it as a means to combat overfitting.

The way I see it, the use of curvature here is simply similar to a Newton-Rhaphson method of gradient descent. Said differently, we're basically just using a quadratic local approximation to the loss. Said again differently, the curvature information is mostly about determining the step size in gradient descent.

In particular, if doing least-squares regression, then the quadratic approximation becomes exact, which means that the curvature-based method yields no more and no less overfitting than fitting a regression tree with least squares (which is potentially a lot of overfitting).