What is the Application Layer?

The RAG Application

Optimizing the Indexing Pipeline

Optimizing the Query

Optimizing the Retrieval

Optimizing the Document Selection

Optimizing the Context Creation

Building a Simple RAG Application

Implementing the Indexing Pipeline

Implementing the Retrieval Pipeline

What is the Application Layer?

Just deploying a large language model is not enough if the goal is to build an application with it. As such, a deployed LLM can generate a number, a token, an embedding, or some text, but you need to post-process that information to make it potentially useful for your users.

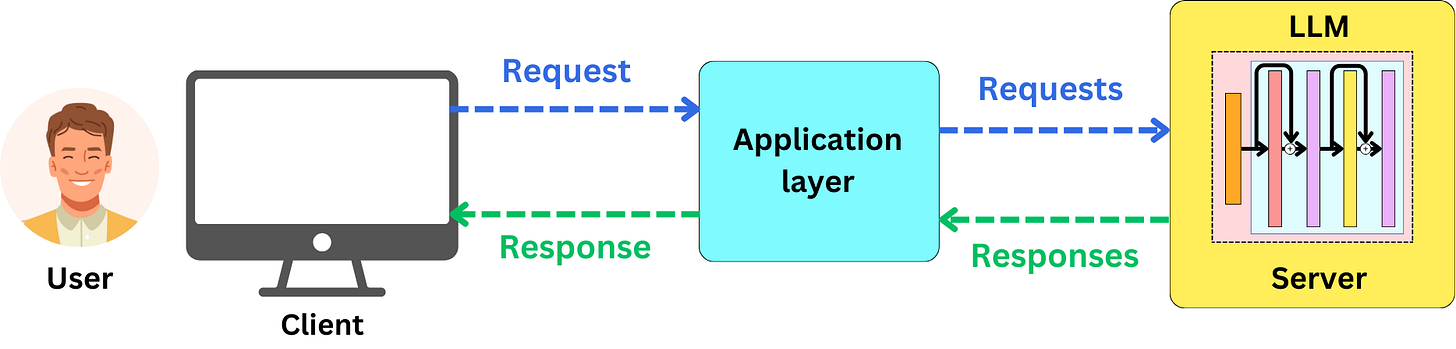

So let's look at a very naive way to implement an application. Let’s imagine we have a large language model that is deployed on a server, and the user is interacting with a client. The client could be a browser on a computer or on a mobile phone, or it could be an application on a mobile, a tablet, or a computer. Through the client, the user is going to be able to request a response from the LLM, and the server is going to respond with a response to the client.

This is a very limited implementation because it assumes that the LLM server can provide whatever the client needs. However, the client may need a lot more information. You could need some user data and some additional logic in the way the response or the request gets routed. So, there are a lot more difficulties that may be involved in dealing with a request or response from a server.

That's where the application layer comes into play. The application layer plays an intermediate role between the client and the LLM server. Instead of routing the request from the client directly to the LLM server, the application layer processes the requests and responses.

There's a lot more that can be happening in the application layer. We implement business logic and there's a lot about the application that may not be related to the large language model. We could have multiple interactions between the LLM server and the application layer before the client's response is ready. We may may to store intermediate data or augment the LLM with proprietary data and tools.

The application layer is a critical piece when we deploy any application and, specifically, when we deploy a large language model application. We cannot just plug the user onto a large language model server. We need some intermediary layer that will deal with the logic in the way the user can interact with the LLM server and the other components of the overall application.

The RAG Application

One typical large language model application that has seen a lot of success recently is the retrieval augmented generation application, RAG for short. I would like to show you how to implement a quick RAG, but before that, I would like to explain the different concepts that relate to retrieval augmented generation and how we can optimize the different axes of this application.

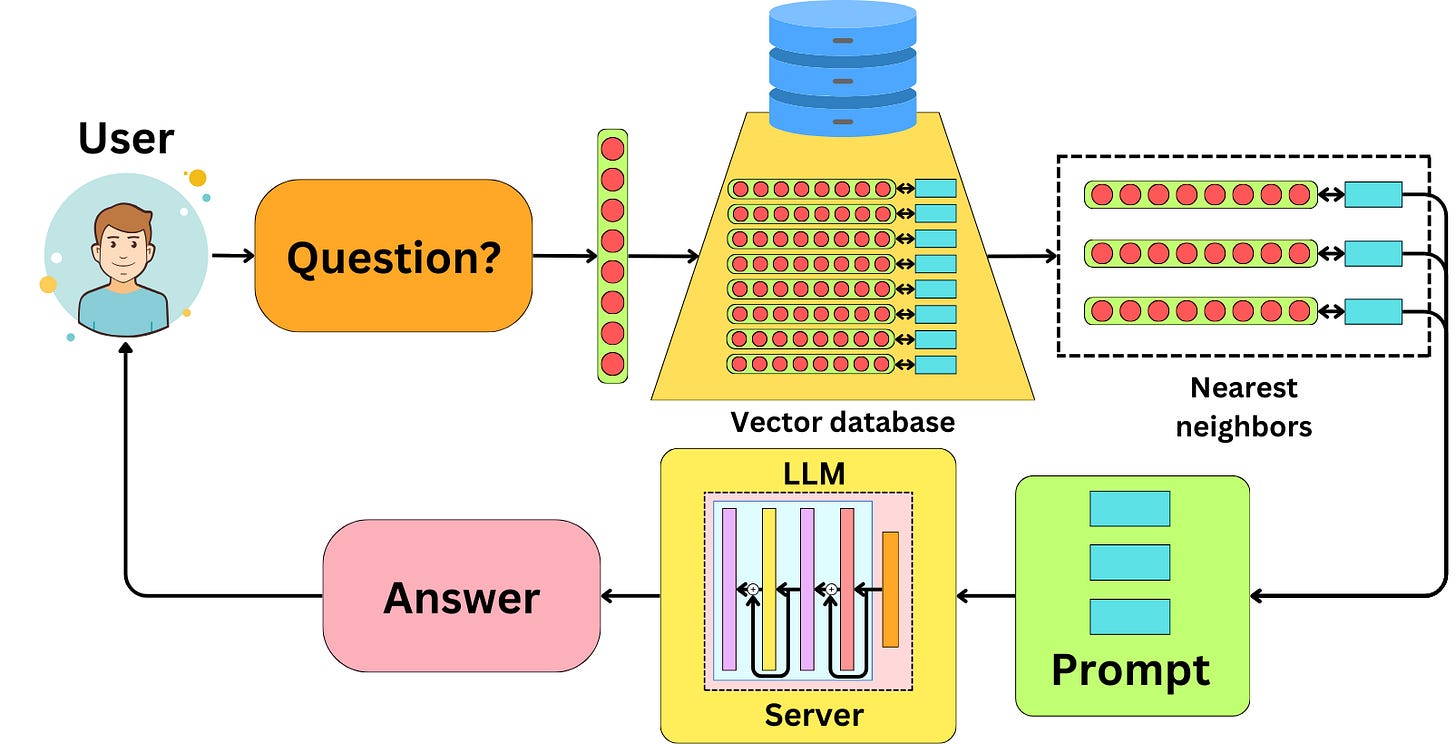

The idea with Retrieval Augmented Generation (RAG) is to encode the data you want to expose to your LLM into embeddings and index that data into a vector database. When a user asks a question, it is converted to an embedding, and we can use it to search for similar embeddings in the database. Once we found similar embeddings, we construct a prompt with the related data to provide context for an LLM to answer the question. The similarity here is usually measured using the cosine similarity metric.

The RAG pipeline can be broken down into five components:

The indexing pipeline: we need to build the pipeline to index the data into a vector database.

The query: a query needs to be created from the question the user asks to search the database.

The retrieval: once we have a query, we need to retrieve the data from the database

The document selection: not all the data retrieved from the database must be passed as part of the context within the prompt, and we can filter less relevant documents.

Giving context to the LLM: once the right data has been selected to be passed as context to the LLM, there are different strategies to do so.

Those are the five axes we could optimize to improve the RAG pipeline.

Optimizing the Indexing Pipeline

Indexing by small data chunks

In RAG, the data you retrieve doesn't have to be the data you used to index it! Typically, when we talk about RAG, we assume that the data is stored in its vector representation in a vector database. When we query the database, we then retrieve the most similar data to the query vector. But it doesn't have to be the case!

For example, the document could be quite large and could contain multiple conflicting information about different concepts. The query vector usually comes from a question about a single concept, so it is unlikely that the vector representation of the question will be similar to the large document. Instead, we could break down the large document into smaller chunks, convert those into their vector representations, and index the large document multiple times using the child documents' vectors. The small child documents have more chance to contain a unique concept, so they are great for indexing the data for similarity search, but they don't contain a lot of context to answer the question, so it is better to retrieve the larger document.

Indexing by the questions the document answers

We can also index the document by the questions that the document answers. As part of the indexing pipeline, we can have an LLM prompted to generate the questions that the document could answer. We then get the embeddings of the questions and index the document by those embeddings. When we have a question, the resulting query vector will be much more similar to the questions about the document than the document itself. However, the data retrieved should be the document so the LLM has all the context necessary to answer the question.