The optimization space

The optimization strategies

The experimental design

Building a pipeline

The model selection is the component that involves the ML algorithmic components. When we talk about “model selection“, we mean searching for the optimal model for a specific training dataset. If we have features X and a target Y, we would like to learn what is the optimal transformation F from the data:

The term “optimal“implies we have a model performance metric, and the “optimal” model is the one that maximizes that metric.

There are different axes we can consider to optimize our model:

The model parameter space: this is the “space“we optimize when we “train” a model through statistical learning. The parameters are learned using an optimization principle such as the Maximum likelihood estimation principle.

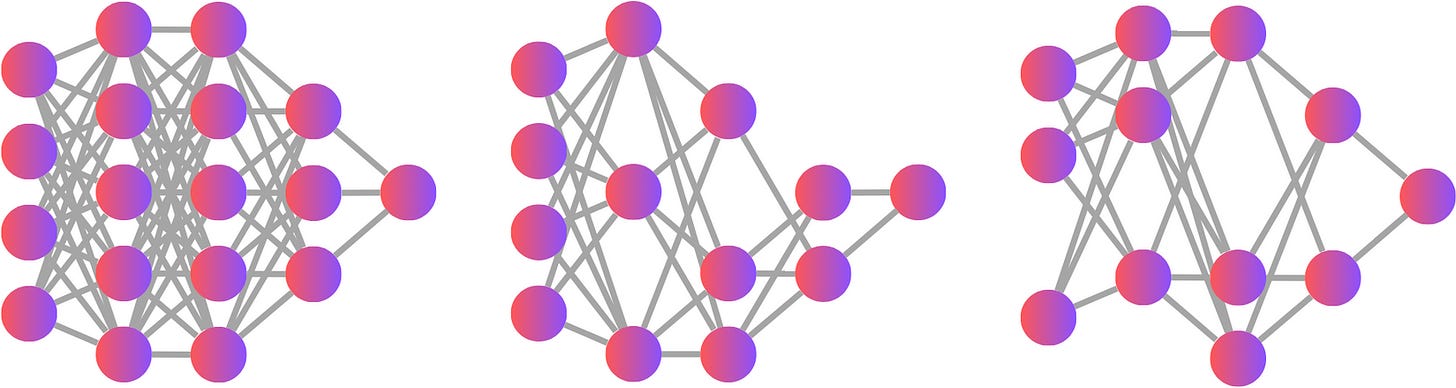

The model paradigm space: Many supervised learning algorithms could be used to solve the same problem. Algorithms like Naive Bayes, XGBoost, or Neural Network could perform very differently depending on the specific dataset.

The hyperparameter space: those are the model parameters we cannot optimize using statistical learning, but they are choices we need to make to set up our training run.

The model architecture space: this is more relevant for Neural Networks. The model architecture can be characterized by a set of hyperparameters, but it tends to be a more complex search than typical hyperparameters. The search space dimension can be as big as 1040.

The feature space: We also need to select the right feature to feed to our model. Different models will react differently depending on the features we use. Too many features and we may overfit. Too few features and we may underfit.

The feature transformation space: We could consider many transformations to improve our model's performance, such as feature encoding or Box-Cox transformation.

The optimization strategies

Considering the complexity of those different subspaces, it is often impractical to attempt to solve the problem exactly, and we need to find ways to select a suitable model quickly.